Location Configuration

Veeam Kasten can usually invoke protection operations such as snapshots within a cluster without requiring additional credentials. While this might be sufficient if Veeam Kasten is running in some of (but not all) the major public clouds and if actions are limited to a single cluster, it is not sufficient for essential operations such as performing real backups, enabling cross-cluster and cross-cloud application migration, and enabling DR of the Veeam Kasten system itself.

To enable these actions that span the lifetime of any one cluster, Veeam

Kasten needs to be configured with access to external object storage or

external NFS/SMB file storage. This is accomplished via the creation of

Location Profiles.

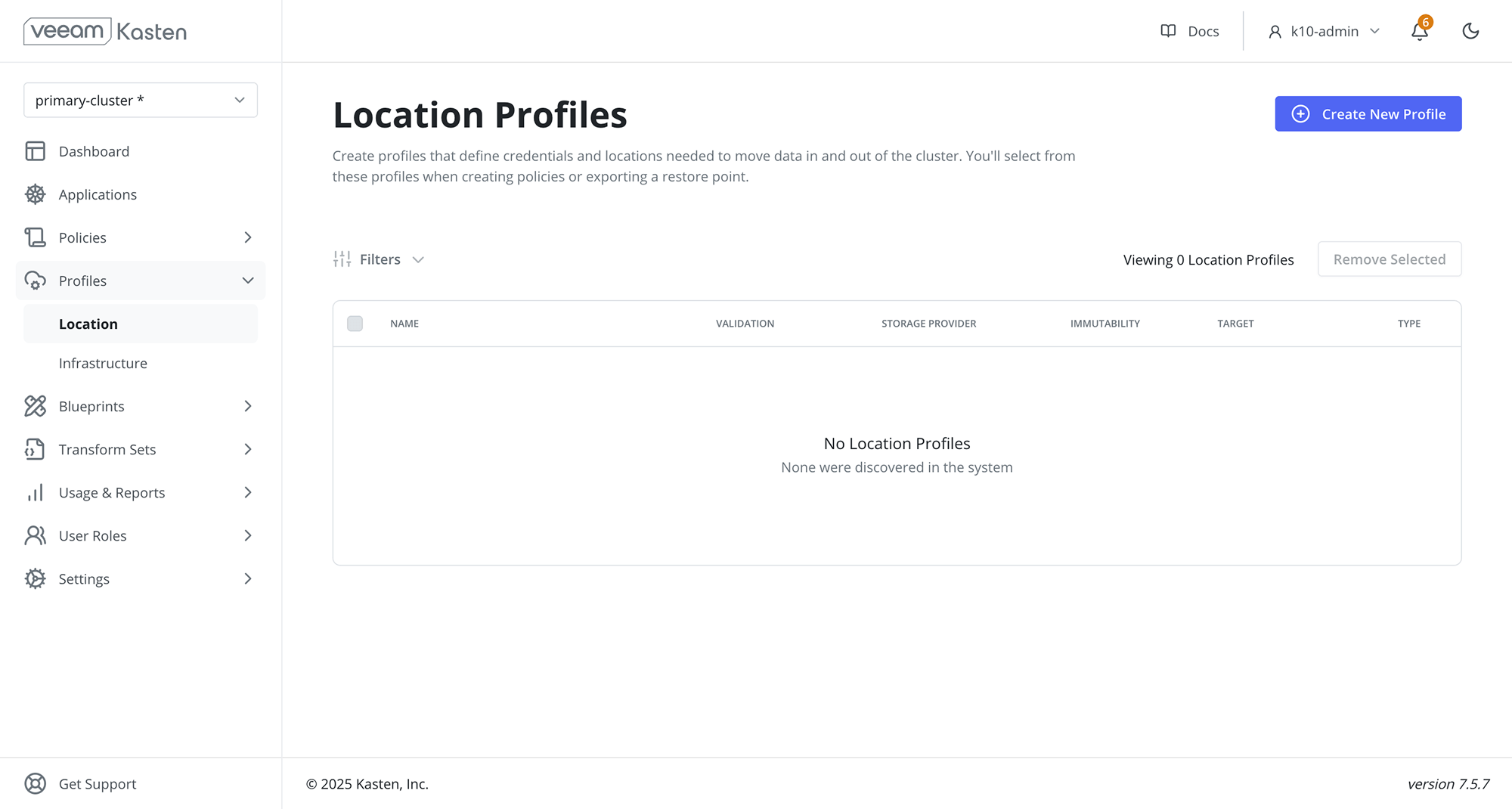

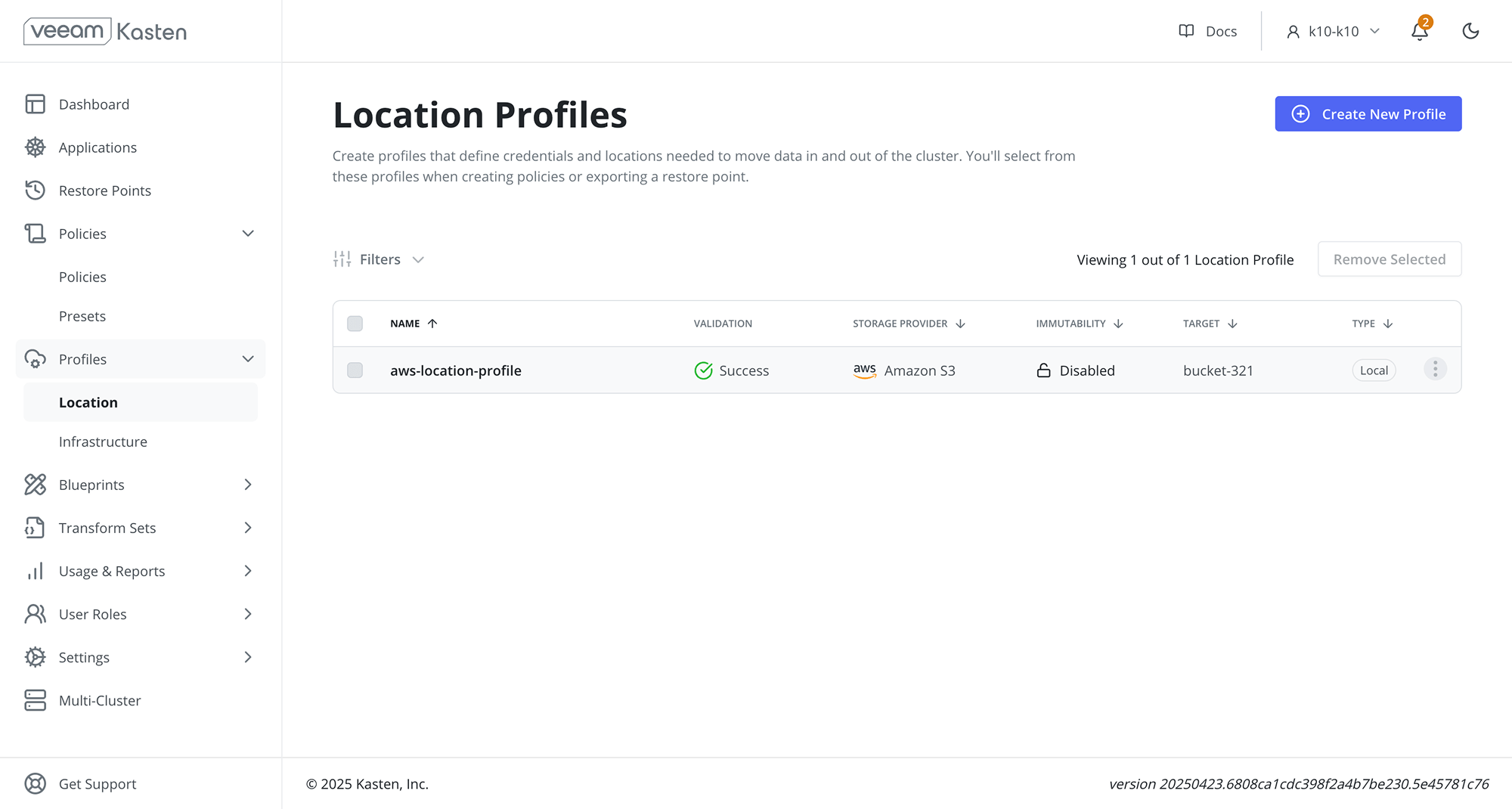

Location Profile creation can be accessed from the Location page of

the Profiles menu in the navigation sidebar or via the

CRD-based Profiles API.

Location Profiles

Location profiles are used to create backups from snapshots, move

applications and their data across clusters and potentially across

different clouds, and to subsequently import these backups or exports

into another cluster. To create a location profile, click Create New Profile

on the profiles page.

Object Storage Location

Support is available for the following object storage providers:

Veeam Kasten creates Kopia repositories in object store locations. Veeam Kasten uses Kopia as a data mover which implicitly provides support to deduplicate, encrypt and compress data at rest. Veeam Kasten performs periodic maintenance on these repositories to recover released storage.

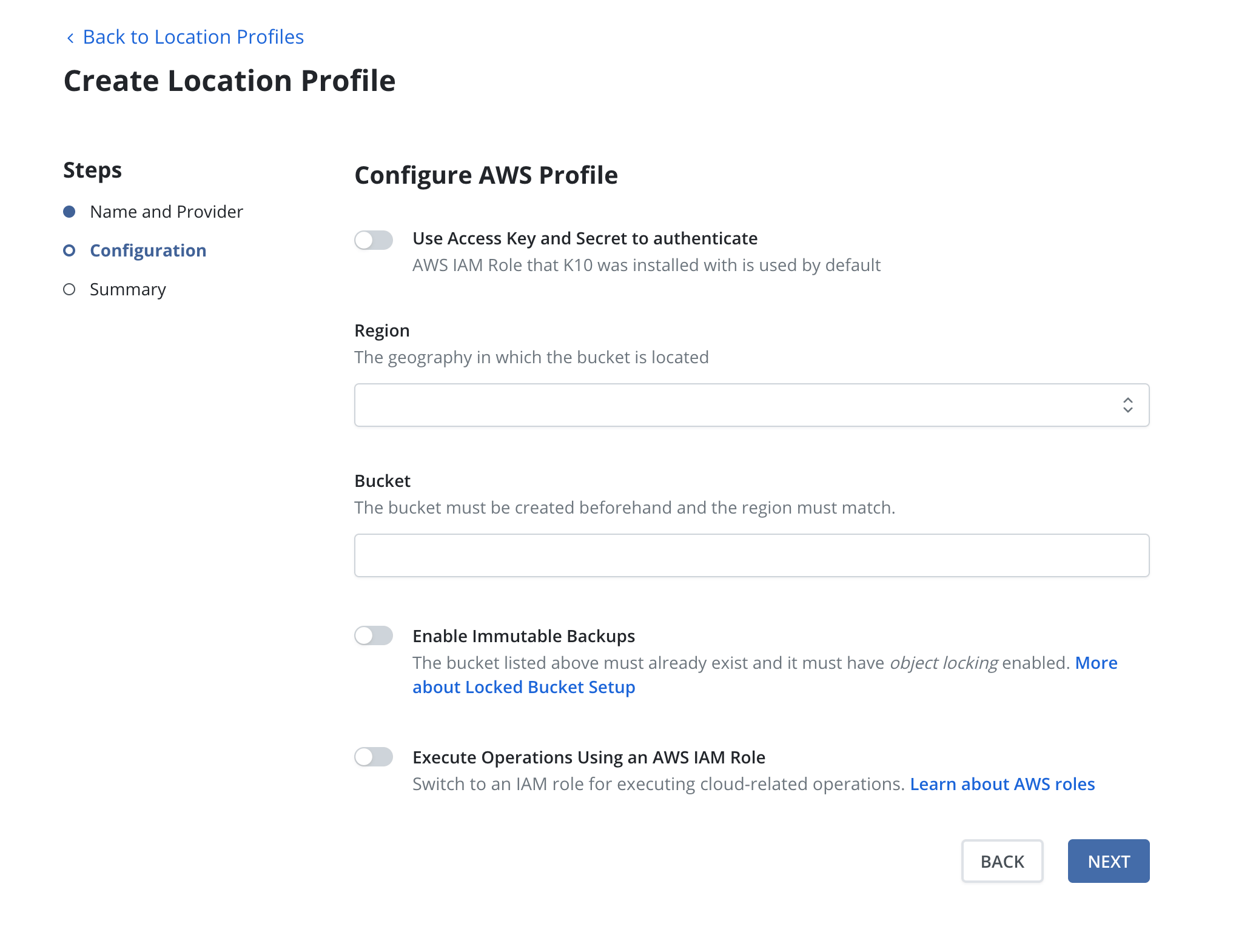

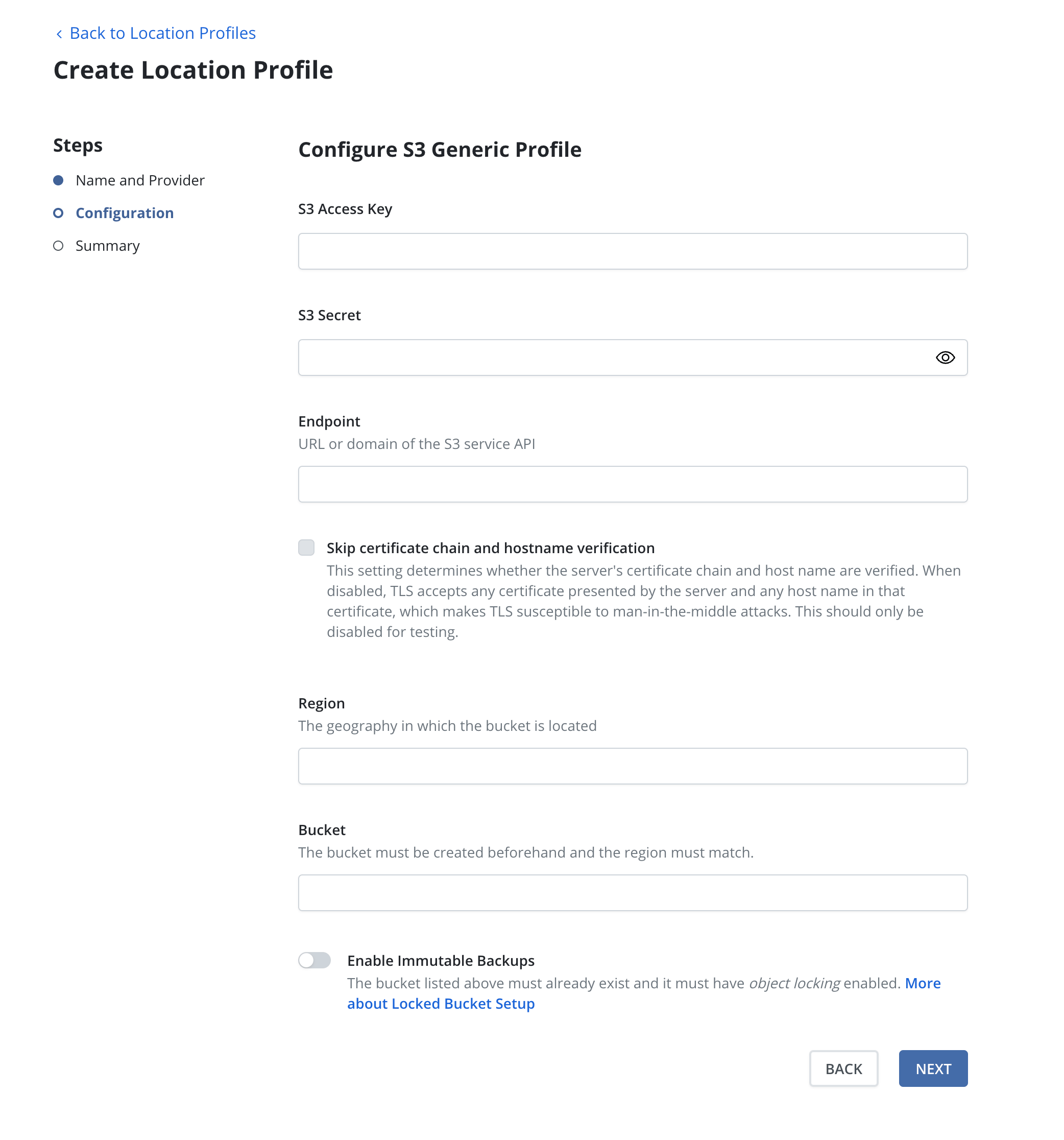

Amazon S3 or S3 Compatible Storage

Enter the access key and secret, select the region and enter the bucket

name. The bucket must be in the region specified. If the bucket has

object locking enabled then set the Enable Immutable Backups toggle

(see Immutable Backups for details). If the bucket is using

S3 Intelligent-Tiering, only Standard-IA, One Zone-IA and

Glacier Instant Retrieval storage classes are supported by Veeam

Kasten.

An IAM role may be specified for an Amazon S3 location profile by

selecting the Execute Operations Using an AWS IAM Role button.

If an S3-compatible object storage system is used that is not hosted by one of the supported cloud providers, an S3 endpoint URL will need to be specified and optionally, SSL verification might need to be disabled. Disabling SSL verification is only recommended for test setups.

When a location profile is created, the config profile will be created, and a profile similar to the following will appear:

The minimum supported version for NetApp ONTAP S3 is 9.12.1.

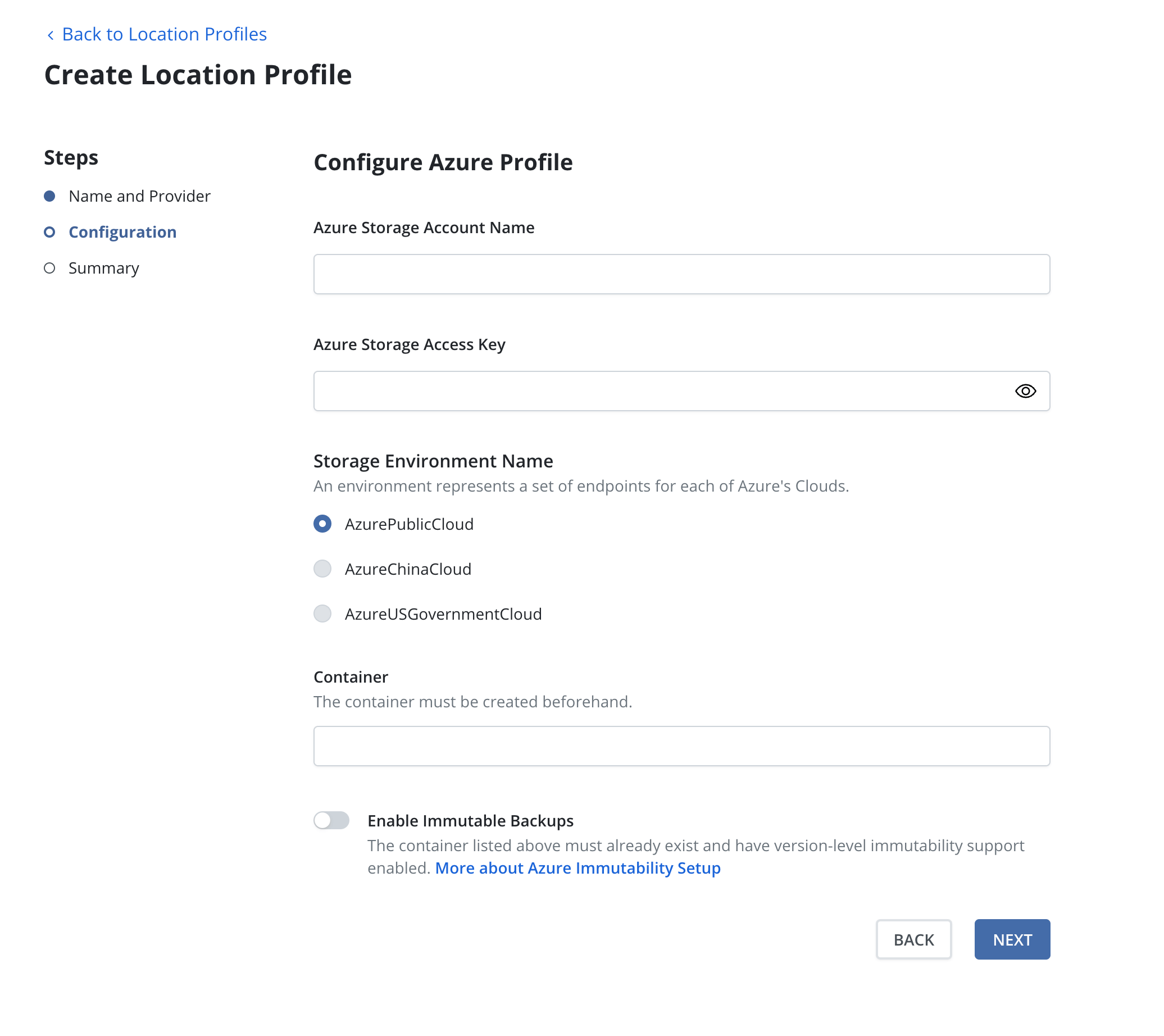

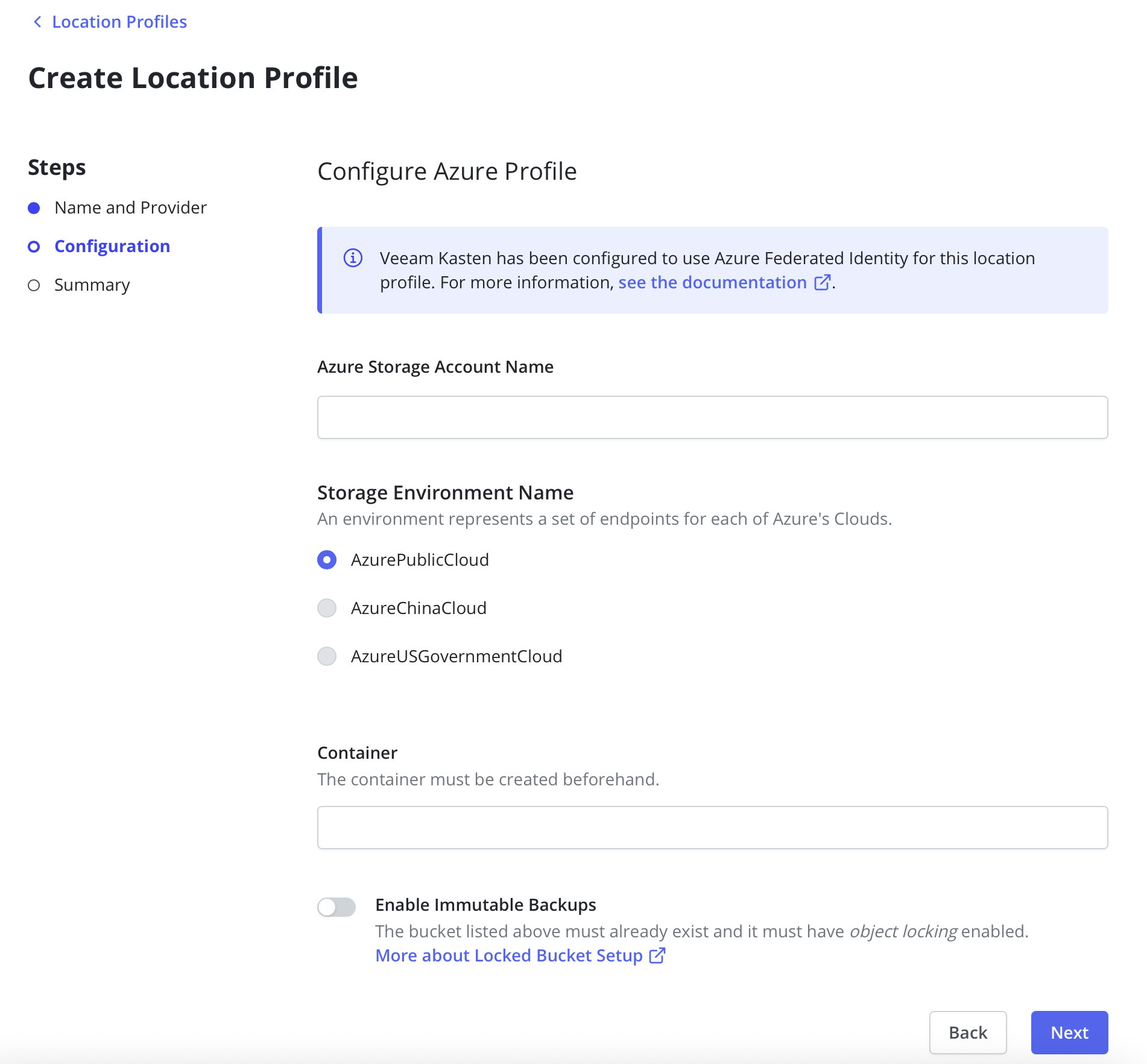

Azure Storage

To use an Azure storage location, you are required to pick an

Azure Storage Account, a Cloud Enviornment and a Container.

The Container must be created beforehand.

Azure Federated Identity

Veeam Kasten supports authenticating Azure location profiles with Azure Federated Identity credentials. An Azure Storage Access Key is not required. When using Azure Federated Identity all Azure location profiles will authenticate with Federated Identity credentials.

Learn more about installing Openshift on Azure.

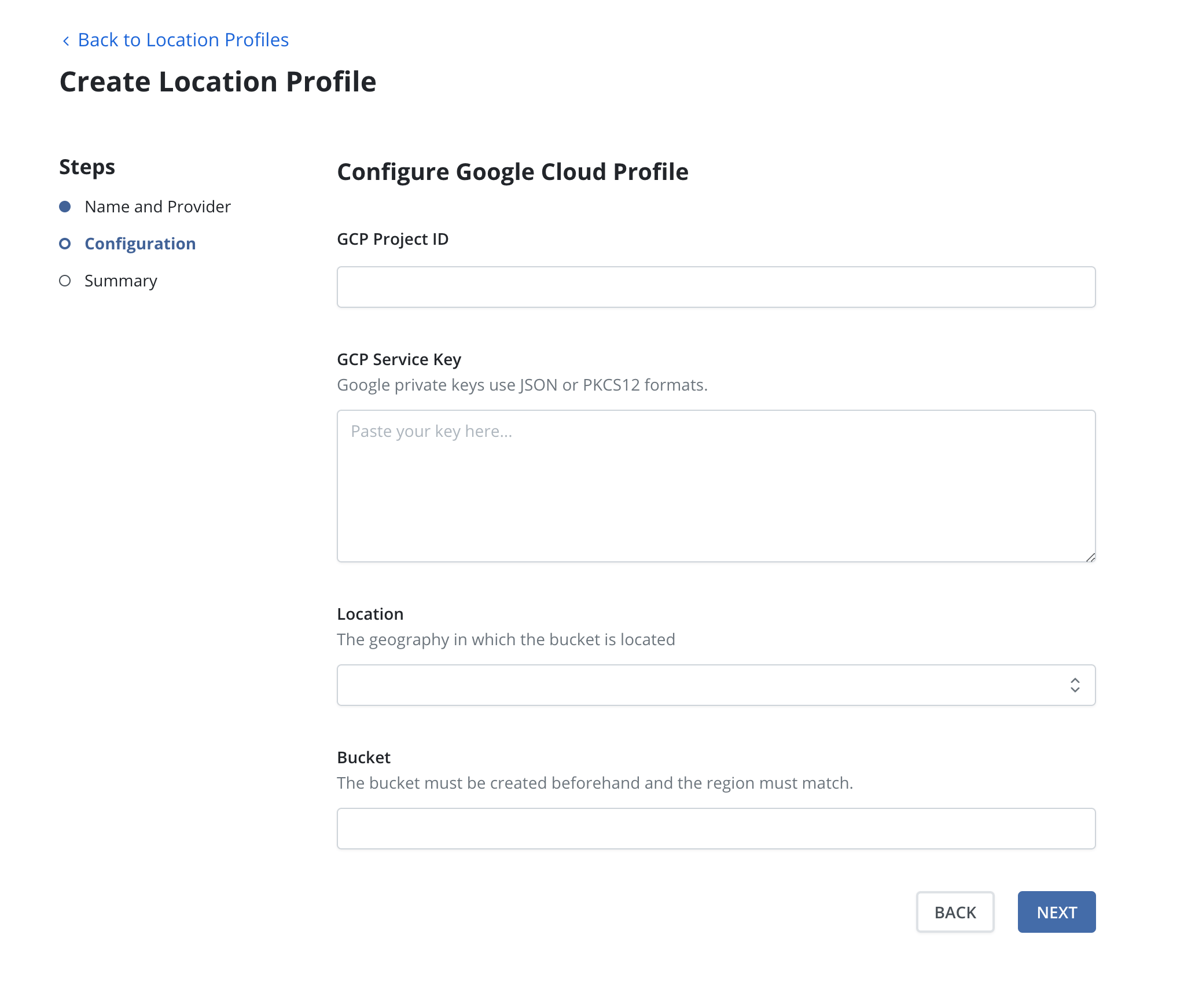

Google Cloud Storage

In addition to authenticating with Google Service Account credentials, Veeam Kasten also supports authentication with Google Workload Identity Federation with Kubernetes as the Identity Provider.

In order to use Google Workload Identity Federation, some additional Helm settings are necessary. Please refer to Installing Veeam Kasten with Google Workload Identity Federation for details on how to install Veeam Kasten with these settings.

Enter the project identifier and the appropriate credentials, i.e., the service key for the Google Service Account or the credential configuration file for Google Workload Identity Federation. Credentials should be in JSON or PKCS12 format. Then, select the region and enter a bucket name. The bucket must be in the specified location.

When using Google Workload Identity Federation with Kubernetes as the

Identity Provider, ensure that the credential configuration file is

configured with the format type (--credential-source-type) set to

Text, and specify the OIDC ID token path (--credential-source-file)

as /var/run/secrets/kasten.io/serviceaccount/GWIF/token.

Veeam Data Cloud Vault

A Veeam Data Cloud Vault Repository may be used as the destination for persistent volume snapshot data in compatible environments.

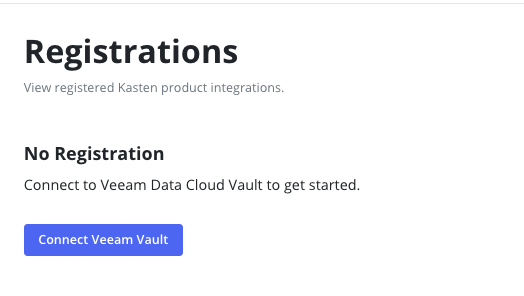

Prior to creating a Veeam Data Cloud Vault location profile within Veeam Kasten, a Kasten instance must

first be registered with Veeam Data Cloud. Visit Settings > Registration to start that process. See

Veeam Data Cloud Vault Integration Guide

for additional details.

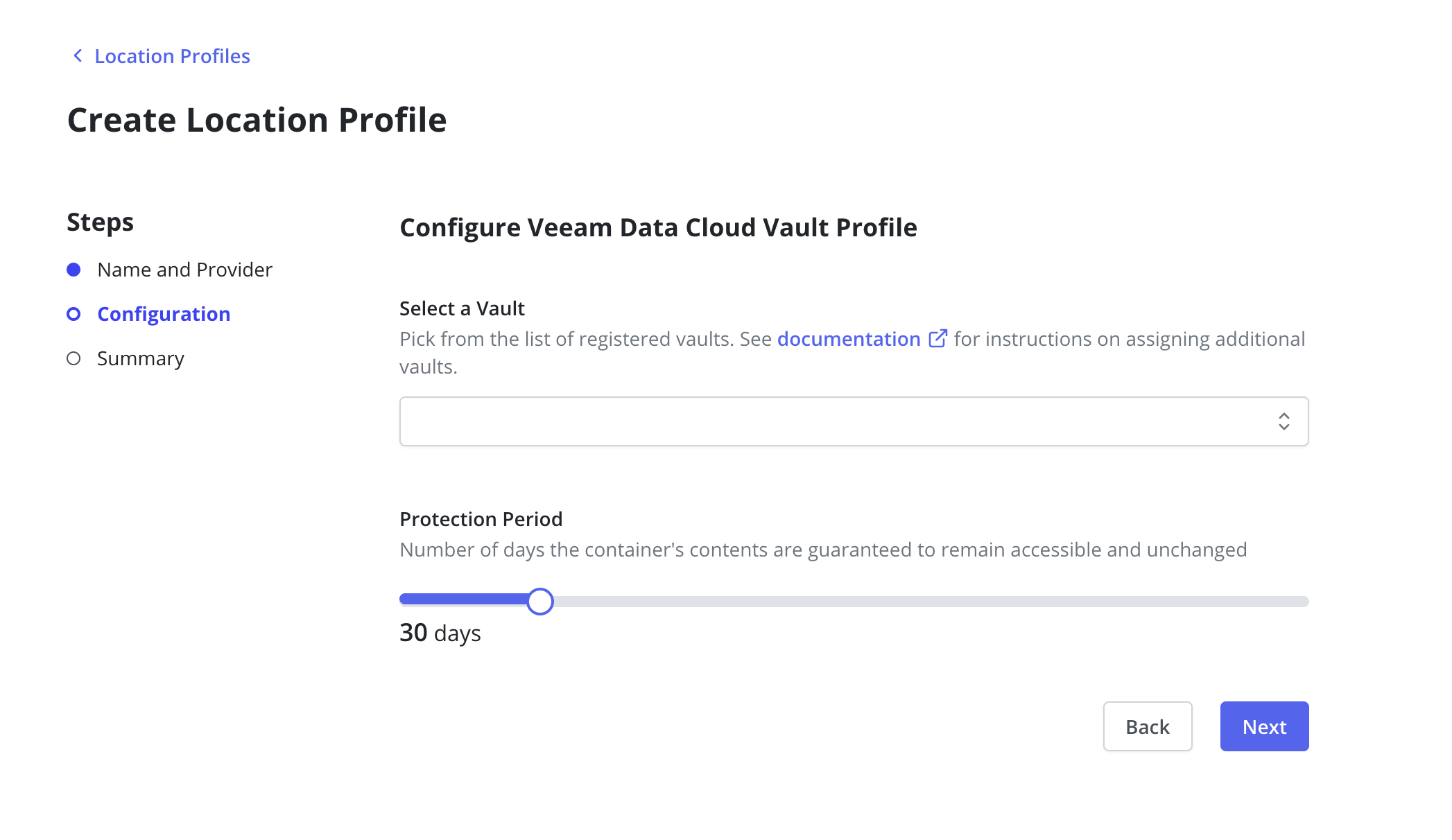

To create a Veeam Data Cloud Vault location profile, select Create New Profile

and specify Veeam Data Cloud Vault as the provider type.

Select one of the storage vaults assigned to this Veeam Kasten Backup Server. If you haven't yet

assigned a storage vault to this registered cluster, you'll have to visit your VDC Account page to configure that.

For more information on that process please visit the Veeam Data Cloud Vault user guide

Upon clicking Submit, the dialog will validate the input data.

If registration has occurred recently, there is a possibility it may take 30 minutes to propagate. Please wait or come back and try again later if location profile validation fails and you've recently configured the registration or vault assignment steps.

All Veeam Vault locations are configured as immutable; follow these instructions to learn more about configuration within Veeam Kasten.

Considerations

The following limitations should be considered when exporting data from Veeam Kasten to Veeam Vault:

- In order to use Veeam Vault as an export location, at a minimum Kasten requires egress access be allowed to the following FQDNs:

- https://baaas.butler.veeam.com

- https://*.blob.core.windows.net

- Veeam Vault is a generic object storage repository

- All Veeam Vault exports are immutable.

- Data captured in Veeam Vault remains (and continues to incur charges) until the retention period expires, even if the location profile is removed from a Kasten installation.

- While Veeam Vault can be used to protect kubernetes data from any on-premises or cloud environment, when running in Azure Veeam highly recommends the Kasten cluster be located in the same Azure region as the Veeam Vault storage account to limit possible ingress and egress data charges

- Removing the Veeam Vault location profile will not remove any data from Veeam Vault and prevents Kasten from running future cleanup actions.

File Storage Location

You can use either NFS or SMB file storage as a location profile. The setup process for each is described below.

To avoid issues on NFS/SMB servers with limited storage, new exports are prevented from starting when storage is 95% full. Veeam Kasten automatically recovers storage as it cleans up expired snapshots, but reaching this state may signal a need to update retention settings or expand storage.

NFS File Storage

Requirements:

- An NFS server reachable from all nodes where Veeam Kasten is installed.

- An exported NFS share, mountable on all nodes.

- A PersistentVolume (PV) and PersistentVolumeClaim (PVC) in the Veeam Kasten namespace.

Steps:

Specification details will vary based on environment. Consult Kubernetes distribution documentation for additional details on configuring NFS connections.

-

Create a PersistentVolume for the NFS share:

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageClassName: ""

mountOptions:

- hard

- nfsvers=4.1

nfs:

path: /

server: 172.17.0.2 -

Create a PersistentVolumeClaim in the Veeam Kasten namespace:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: test-pvc

namespace: kasten-io

spec:

storageClassName: ""

volumeName: nfs-pv

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi -

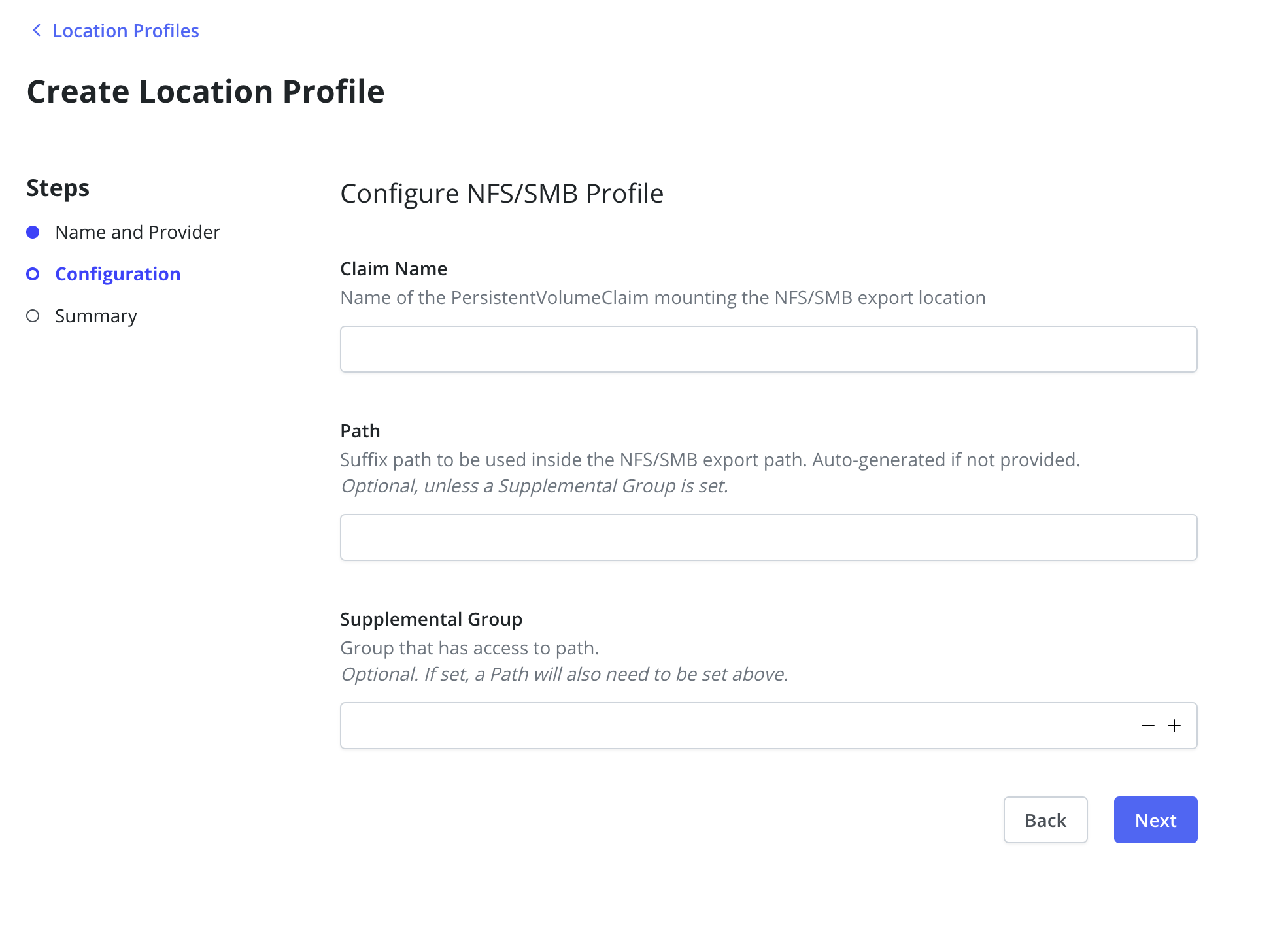

Create an NFS/SMB Location Profile referencing the previously created PVC:

By default, Veeam Kasten uses the root user to access the NFS location profile. To use a different user, set the Supplemental Group and Path fields. The Path must refer to a directory within the PVC, and the group must have read, write, and execute access.

SMB File Storage

Requirements:

- An SMB server reachable from all nodes where Veeam Kasten is installed.

- An exported SMB share, mountable on all nodes.

- A PersistentVolume (PV) and PersistentVolumeClaim (PVC) in the Veeam Kasten namespace (default:

kasten-io).

Steps:

The following example is specific to Red Hat OpenShift and the smb.csi.k8s.io storage provisioner. For other Kubernetes distributions or provisioners, consult the appropriate documentation.

-

Create a PersistentVolume for the SMB share:

apiVersion: v1

kind: PersistentVolume

metadata:

annotations:

pv.kubernetes.io/provisioned-by: smb.csi.k8s.io

name: smb-pv

spec:

capacity:

storage: 100Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageClassName: ""

mountOptions:

- dir_mode=0777

- file_mode=0777

csi:

driver: smb.csi.k8s.io

volumeHandle: smb-server.default.svc.cluster.local/share#

volumeAttributes:

source: //<hostname>/<shares>

nodeStageSecretRef:

name: smbcreds

namespace: samba-server -

Create a Secret for SMB credentials:

apiVersion: v1

kind: Secret

metadata:

name: smbcreds

namespace: samba-server

stringData:

username: <username>

password: <password>volumeHandle: Unique identifier for the volume.volumeAttributes.source: UNC path to the SMB share.nodeStageSecretRef: References the Secret with SMB credentials.

-

Create a PersistentVolumeClaim in the Veeam Kasten namespace:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: test-pvc

namespace: kasten-io

spec:

storageClassName: ""

volumeName: smb-pv

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi -

Create an NFS/SMB Location Profile referencing the previously created PVC:

By default, Veeam Kasten uses the root user to access the SMB location. To use a different user, set the Supplemental Group and Path fields. The Path must refer to a directory within the PVC, and the group must have read, write, and execute access.

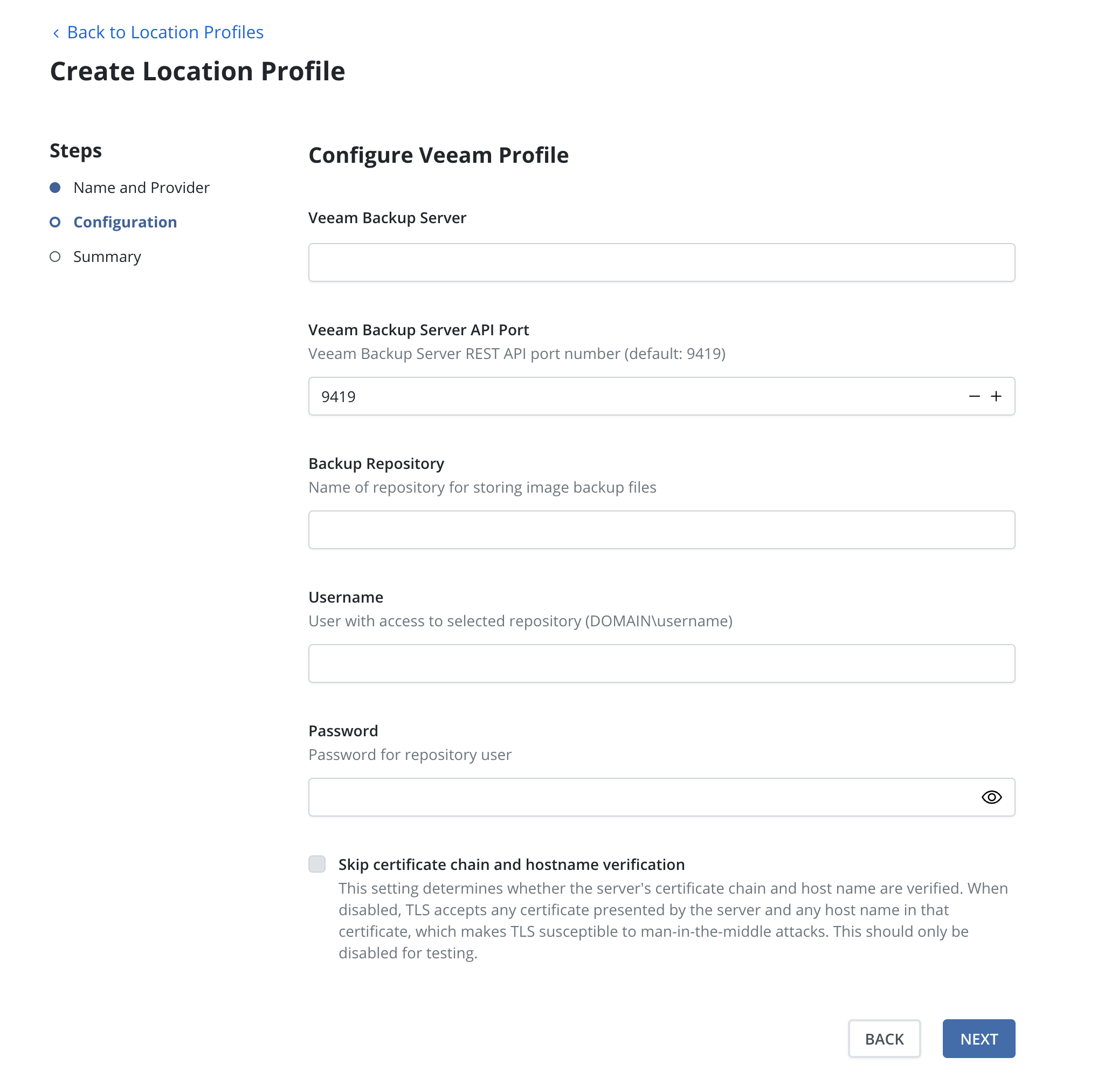

Veeam Repository Location

A Veeam Repository may be used as the destination for persistent volume snapshot data in compatible environments. See Storage Integration for additional details.

To create a Veeam Repository location profile, select

Create New Profile and specify Veeam Repository as the

provider type.

Provide the DNS name or the IP address of the Veeam backup server in the

Veeam Backup Server field. The Veeam Backup Server API Port field is

pre-configured with the installation default value and may be changed if

necessary. Specify the name of a backup repository on this server in the

Backup Repository field.

Using more than one unique VBR backup server per Veeam Kasten instance is not supported and may cause synchronization issues between Veeam Kasten and VBR. Creating multiple location profiles connecting to different instances of VBR will produce a warning and should only be performed during temporary reconfiguration of an environment.

Provide access credentials in the Username and Password fields.

Ensure Access Permissions are granted within VBR for the specified account (or related security group) and backup repository. See Veeam Kasten Integration Guide for details.

Upon clicking Submit, the dialog will validate the input data.

Communication with the server uses SSL and requires that the server's

certificate be trusted by Veeam Kasten. Alternatively, enabling the

Skip certificate chain and hostname verification option disables

certificate validation.

If using an immutable Veeam Repository, follow these instructions to ensure proper configuration within Veeam Kasten.

Location Settings for Migration

If the location profile is used for exporting an application for cross-cluster migration, it will be used to store application restore point metadata and, when moving across infrastructure providers, bulk data too. Similarly, location profiles are also used for importing applications into a cluster that is different than the source cluster where the application was captured.

In case of NFS/SMB File Storage Location, the exported NFS/SMB share must be reachable from the destination cluster and mounted on all the nodes where Veeam Kasten is installed.

Read-Only Location Profile

Veeam Kasten supports read-only location profiles for import and restore operations. These profiles require no write permissions, as the system performs only read operations during these phases.

Read-only profiles can only be used for import and restore operations. Attempting to use them for backup or export operations will result in an error.

This configuration is particularly useful when:

- Working with immutable backups

- Implementing strict security policies

- Accessing shared backup repositories

Immutable Backups

The frequency of ransomware attacks on enterprise customers is increasing. Backups are essential for recovering from these attacks, acting as a first line of defense for recovering critical data. Attackers are now targeting backups as well, to make more difficult, if not impossible for their victims to recover.

Veeam Kasten can leverage object-locking and immutability features available in many object store providers to ensure its exported backups are protected from tampering. When exporting to a locked bucket, the restore point data cannot be deleted or modified within a set period, even with administrator privileges. If an attacker obtains privileged object store credentials and attempts to disrupt the backups stored there, Veeam Kasten can restore the protected application by reading back the original, immutable and unadulterated restore point.

Immutable backups are supported for AWS S3 and other S3 compatible object stores. Additionally, they are supported for Azure and Google.

More information on the full Immutable Backups Workflow.

The generic storage and shareable volume backup and restore workflows are not compatible with the protections afforded by immutable backups. Use of a location profile enabled for immutable backups can be used for backup and restore, but the protection period is ignored, and the profile is treated as a non-immutability-enabled location. Please note that using an object-locking bucket for such use cases can amplify storage usage without any additional benefit. Please contact support for any inquiries.

S3 Locked Bucket Setup

To prepare Veeam Kasten to export immutable backups, a bucket must be prepared in advance.

- The bucket must be created on AWS S3 or an S3 compatible object store.

- The bucket must be created with object locking enabled. Note: On some S3-compatible implementations, the object locking property of a bucket can only be enabled/configured at bucket creation time.

A sample Minio CLI mc script that will set up an immutable-backup-eligible locked bucket in AWS S3:

## Set up the following variables:

BUCKET_NAME=<choose a unique bucket name>

REGION=<pick the region for the bucket>

AWS_ACCESS_KEY_ID=<access key ID>

AWS_SECRET_ACCESS_KEY=<secret access key>

## Alias the s3 account credentials

mc alias set s3 https://s3.amazonaws.com ${AWS_ACCESS_KEY_ID} ${AWS_SECRET_ACCESS_KEY}

## Make the bucket with locking enabled

mc mb --region=${REGION} s3/${BUCKET_NAME} --with-lock

For more information on setting up object-locking: * Using S3 Object Lock

Profile Creation

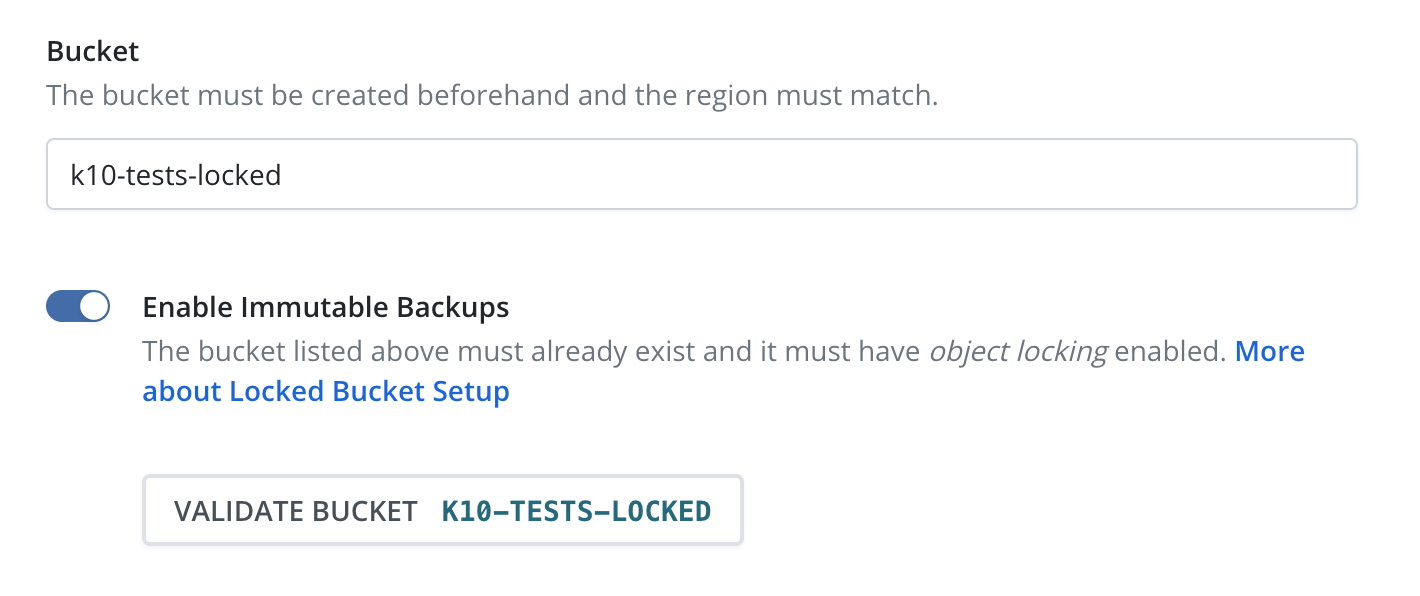

Once a bucket has been prepared with each of these requirements met, a profile can be created from the Veeam Kasten dashboard to point to it.

Follow the steps for setting up a profile as normal. Enter a profile name, object store credentials, region, and bucket name.

It is recommended that the credentials provided to access the locked bucket are granted minimal permissions only:

- List objects

- List object versions

- Determine if bucket exists

- Get object lock configuration

- Get bucket versioning state

- Get/Put object retention

- Get/Put/Remove object

- Get object metadata

See Using Veeam Kasten with AWS S3 for a list of required permissions.

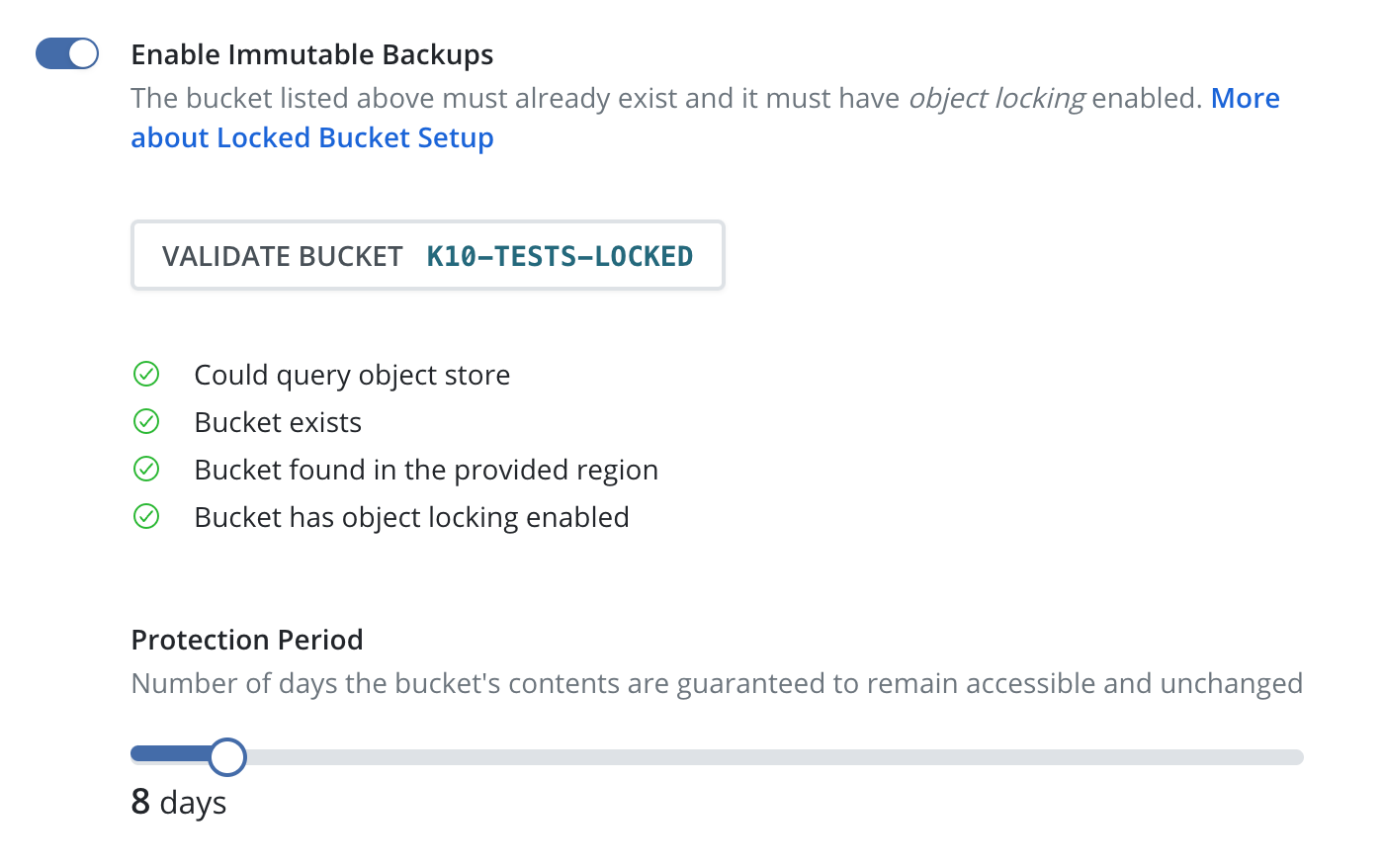

After selecting the checkbox labeled "Enable Immutable Backups" a new button labeled "Validate Bucket <bucket-name>" will appear. Click the Validate Bucket button to initiate a series of checks against the bucket, verifying the bucket can be reached and meets all of the requirements denoted above. All conditions must be met for the check to succeed and for the profile form to be submitted.

If the provided bucket meets all of the conditions, a Protection Period slider will appear. The protection period is a user-selectable time period that Veeam Kasten will use when maintaining an ongoing immutable retention period for each exported restore point. A longer protection period means a longer window in which to detect, and safely recover from, an attack; backup data remains immutable and unadulterated for longer. The trade-off is increased storage costs, as potentially stale data cannot be removed until the object's immutable retention expires.

Veeam Kasten limits the maximum protection period that can be selected to 90 days. A safety buffer is added to the desired protection period. This is to ensure Veeam Kasten can always find and maintain ongoing protection of any new objects written to the bucket before their retention lapses. The minimum protection period is 1 day.

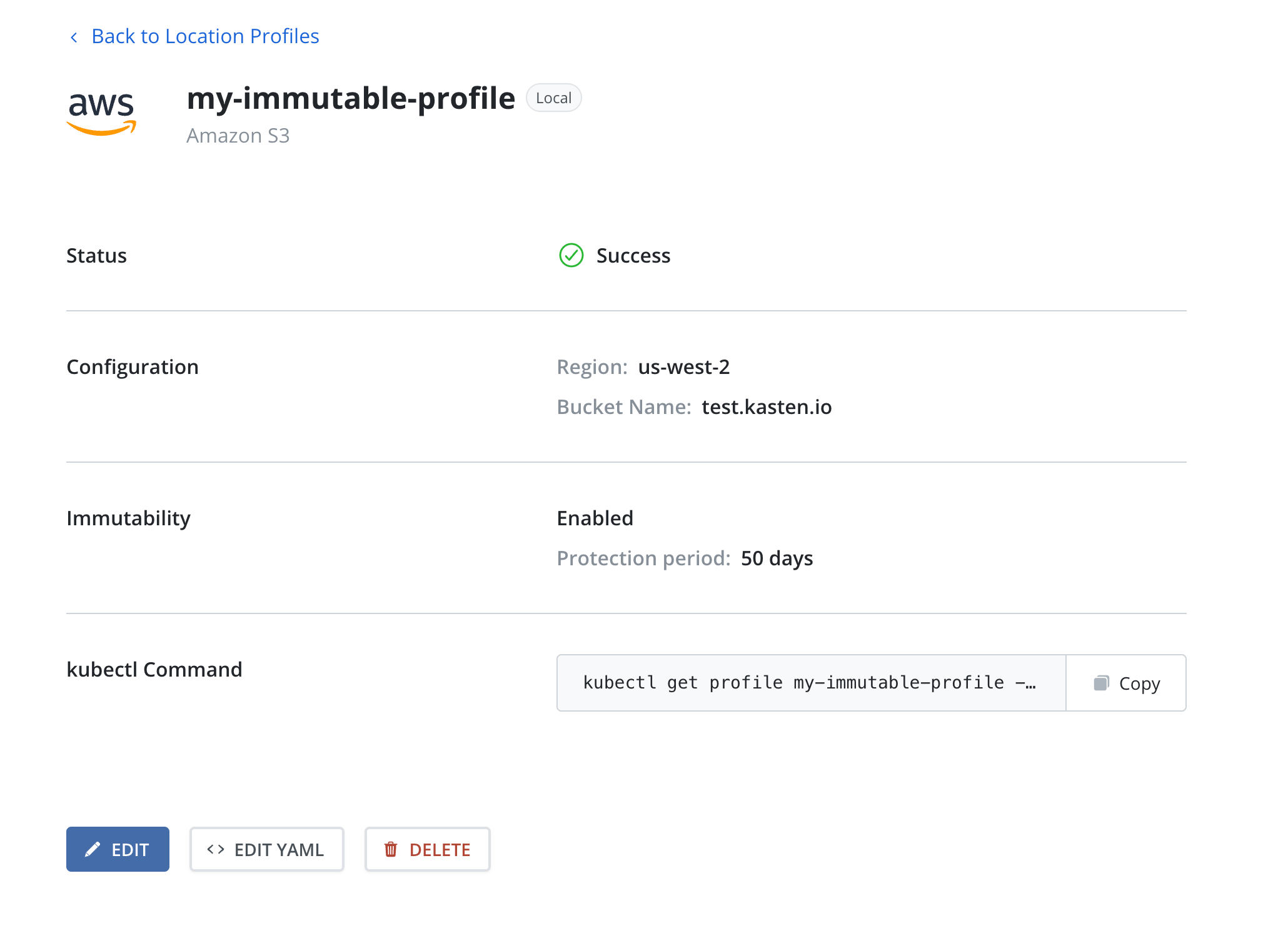

Click the "Submit" button. The profile will be submitted to Veeam Kasten and appear in the Location Profiles list. The dedicated view page will reflect the object immutability status of the referenced bucket, as well as the selected protection period.

Protecting applications with Immutable Backups

Selecting the locked bucket profile as the Export Location Profile in the Backups procedure will render all application data immutable for the duration of the protection period. Additionally, to ensure Veeam Kasten can restore that application data, Veeam Kasten should also be protected with an immutable locked-bucket Disaster Recovery (DR) profile.

In a situation where the cluster and/or object store has been corrupted, attacked, or otherwise tampered with, Veeam Kasten might be just as susceptible to being compromised as any other application. Protecting both (apps and Veeam Kasten) with immutable locked-bucket profiles will ensure the data is intact, and that Veeam Kasten knows how to restore it. Therefore, if one or more locked bucket location profiles are being used to back up and protect vital applications, it is highly recommended that a locked bucket profile should also be used with Veeam Kasten DR.

When setting up a location profile for Veeam Kasten DR, one should choose a protection period that is AT LEAST as long as the longest protection period in use for application backups. For example if one application is being backed up using a profile with a 1 week protection period, and another using a 1 year protection period, the protection period for the Veeam Kasten DR backup profile should be at least 1 year to ensure the latter application can always be recovered by Veeam Kasten in the required 1-year time window.

See Restoring Veeam Kasten Backup for instructions on how to restore Veeam Kasten to a point-in-time.

Azure Immutability Setup

To set up immutability in Azure take into account the following requirements:

- Ensure that the container exists and is reachable with the credentials provided in the profile form.

- Enable versioning on the related storage account.

- Ensure support for version-level immutability on the container or related storage account.

Since Veeam Kasten ignores retention policies, it is not necessary to set one on the container. As an alternative, choose the desired protection period, and the files will be initially protected for that amount plus a safety buffer to ensure protection compliance.

Google Immutability Setup

To set up immutability in Google take into account the following requirements:

- Ensure that the bucket exists and is reachable with the credentials provided in the profile form.

- Enable object versioning on the bucket.

- Enable object retention lock on the bucket.

- If using minimal permissions with the credentials,

storage.objects.setRetentionpermission is also required.

Veeam Data Cloud Vault Immutability

There are no special setup requirements to configure Veeam Vault immutability. See Setting up Immutability for Veeam Vault for more details.

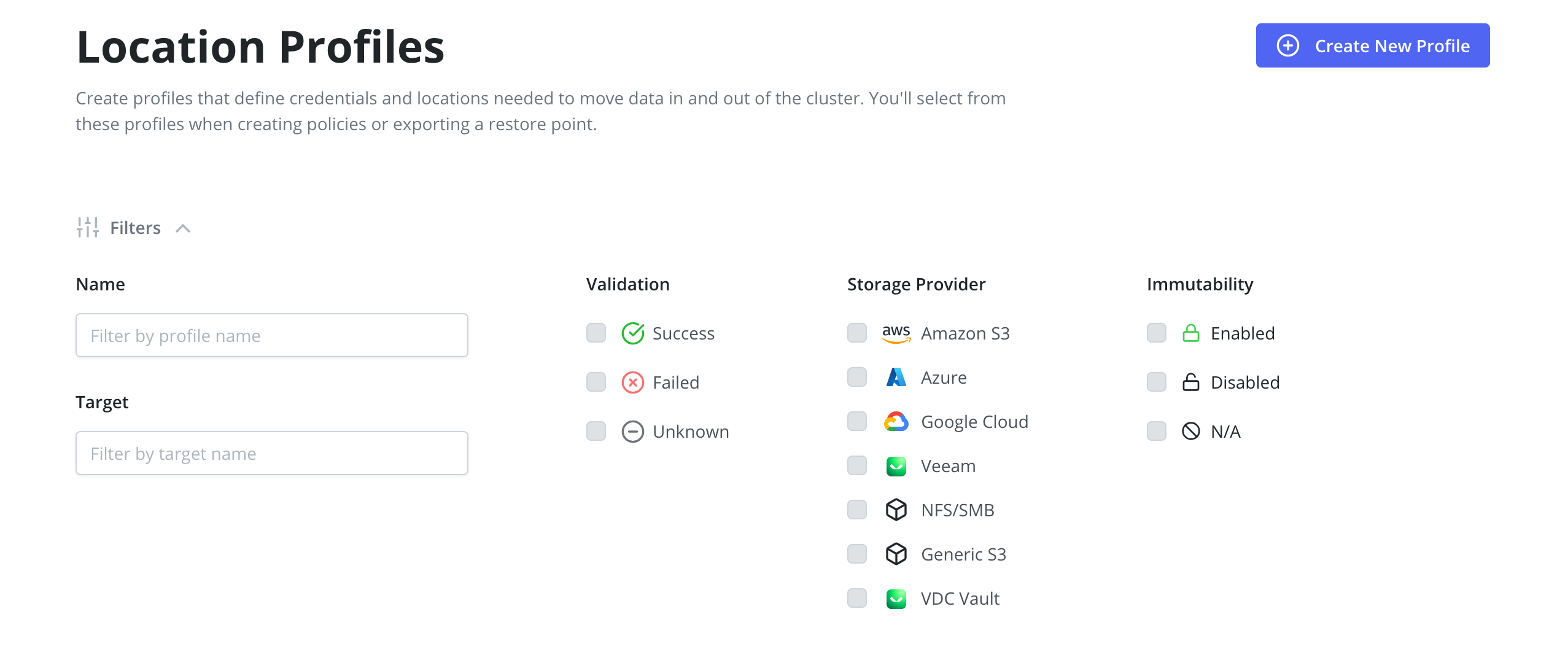

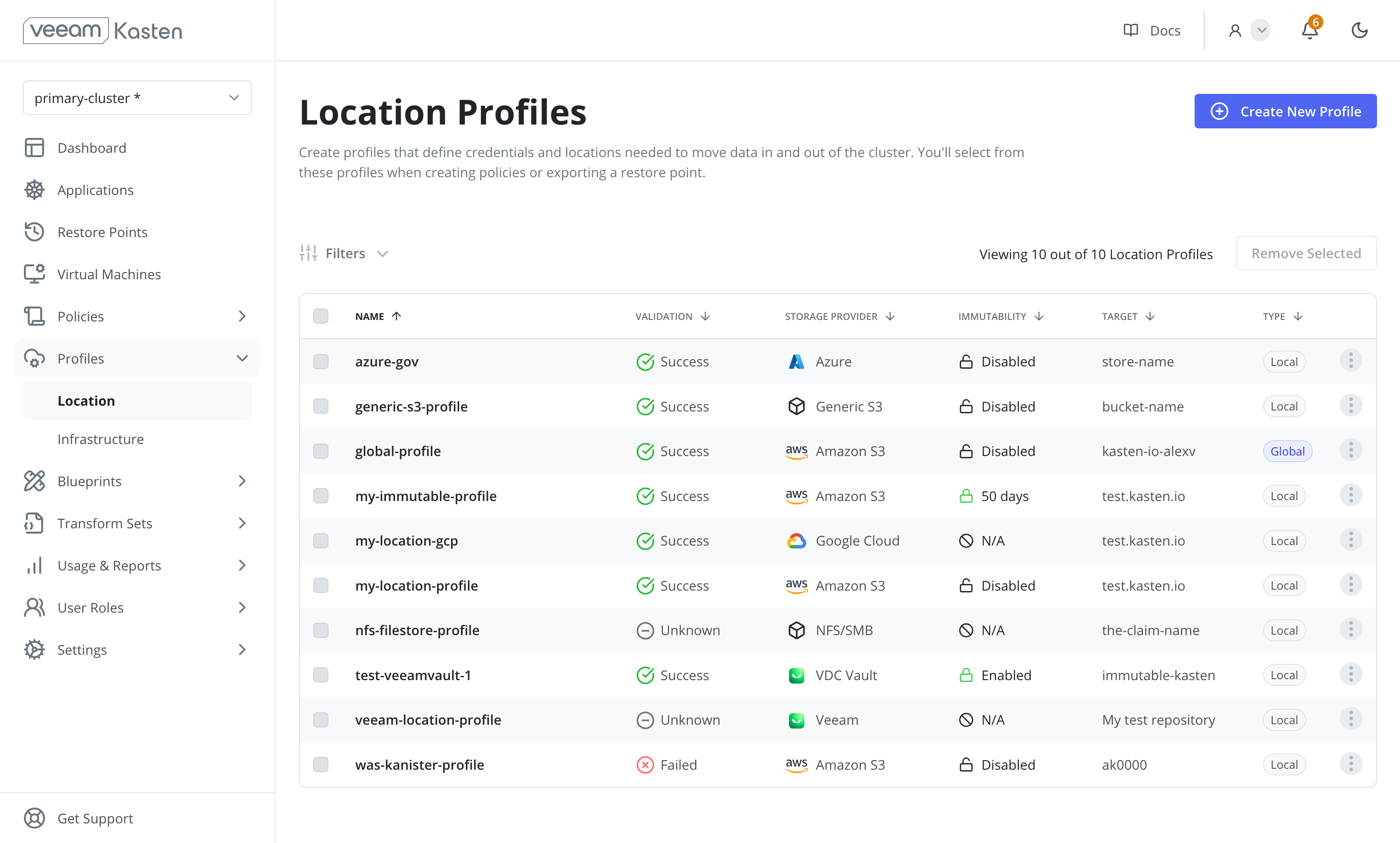

Location Profiles page

The Location Profiles page can be accessed by clicking on Location under the

the Profiles menu in the navigation sidebar.

Filtering

The Location Profiles page supports filtering based on the following properties:

- Name: The name assigned to the location profile.

- Target: The destination for exported snapshots: bucket name (Amazon S3, GCP), container name (Azure), or persistent volume claim name (NFS/SMB).

- Validation: The current validation status of the location profile.

- Storage Provider: The third-party storage provider associated with the profile.

- Immutability: Indicates whether immutability is enabled for the profile.