Integrating Security Information and Event Management (SIEM) Systems

Inhibiting data protection software and deleting backup data are examples of actions that may be taken by a malicious actor before proceeding to the next stage of an attack, such as file encryption. Prompt notification of such potentially malicious behavior can help mitigate the impact of an attack.

To provide activity correlation and analysis, Veeam Kasten can integrate with SIEM solutions. SIEMs ingest and aggregate data from an environment, including logs, alerts, and events, for the purpose of providing real-time threat detection and analysis, and assisting in investigations.

As an application built upon Kubernetes CRDs and API Aggregation, Veeam Kasten events (e.g., creating a Location Profile resource) can be captured through the Kubernetes audit log. These events can then be ingested by a SIEM system. However, there are situations where you may not have direct control over the Kubernetes audit policy configuration for a cluster (or the kube-apiserver), especially when using a cloud-hosted managed Kubernetes service. This limitation can impact the detail available in Kubernetes API server responses that can be collected for audit events and the customization of log transmission.

For this reason, Veeam Kasten provides an extended audit mechanism to enable direct ingestion of Veeam Kasten events into a SIEM system, independently of Kubernetes cluster audit policy configurations. Furthermore, this extended mechanism allows more fine-tuned control over how to store these logs, including options like file-based and cloud-based storage.

The audit policy applied to Veeam Kasten's aggregated-apiserver is

the following:

apiVersion: audit.k8s.io/v1

kind: Policy

omitStages:

- "RequestReceived"

rules:

- level: RequestResponse

resources:

- group: "actions.kio.kasten.io"

resources: ["backupactions", "cancelactions", "exportactions", "importactions", "restoreactions", "retireactions", "runactions"]

- group: "apps.kio.kasten.io"

resources: ["applications", "clusterrestorepoints", "restorepoints", "restorepointcontents"]

- group: "repositories.kio.kasten.io"

resources: ["storagerepositories"]

- group: "vault.kio.kasten.io"

resources: ["passkeys"]

verbs: ["create", "update", "patch", "delete", "get"]

- level: None

nonResourceURLs:

- /healthz*

- /version

- /openapi/v2*

- /openapi/v3*

- /timeout*

This section provides documentation on configuring each of these mechanisms and includes example rules that a SIEM system can enable. Sample integrations are provided for Datadog Cloud SIEM and Microsoft Sentinel, though similar detection rules can be adapted to any SIEM platform capable of ingesting Kubernetes audit and container logs.

Detecting Veeam Kasten SIEM Scenarios

Below are multiple scenarios which could be used to drive SIEM detection and alerts based on Veeam Kasten user activity:

| Resource | Action |

|---|---|

| RestorePoints | Excessive Deletion |

| RestorePointContents | Excessive Deletion |

| ClusterRestorePoints | Excessive Deletion |

| CancelAction | Excessive Create |

| RetireAction | Excessive Create |

| Passkeys | Excessive Update/Delete/Get |

Enabling Agent-based Veeam Kasten Event Capture

By default, Veeam Kasten is deployed to write these new audit event logs

to stdout (standard output) from the aggregatedapis-svc pod. These

logs can be ingested using an agent installed in the cluster. Examples

for Datadog Cloud SIEM and

Microsoft Sentinel are provided below.

To disable, configure the Veeam Kasten deployment with

--set siem.logging.cluster.enabled=false.

Enabling Agent-less Veeam Kasten Event Capture

Many SIEM solutions support ingestion of stdout log data from

Kubernetes applications using an agent deployed to the cluster. If an

agent-based approach is not available or not preferred, Veeam Kasten

offers the option to send these audit events to a Location Profile.

SIEM-specific tools can then be used to ingest the log data from the

object store.

Currently, only AWS S3 Location Profiles are supported as a target for Veeam Kasten audit events.

By default, Veeam Kasten is deployed with the ability to send these new

audit event logs to available cloud object stores. However, enabling

this feature is just the first step. The action of sending the logs

depends on the creation or update of an applicable

K10 AuditConfig that points to a valid Location Profile. An example for

Datadog is shown below .

To disable the sending of these logs to AWS S3, you can configure the

Veeam Kasten deployment with the following command:

--set siem.logging.cloud.awsS3.enabled=false.

To begin, you should first determine the name of your target Location Profile.

Next, define and apply an AuditConfig manifest to your Veeam Kasten

namespace. In the example below, make sure to replace the target values

for spec.profile.name and spec.profile.namespace before applying.

If the spec.profile.namespace is left blank, the default value will be

the namespace of the AuditConfig.

## Create AuditConfig manifest with Location Profile name/namespace specific

## to your environment.

$ cat > k10-auditconfig.yaml <<EOF

apiVersion: config.kio.kasten.io/v1alpha1

kind: AuditConfig

metadata:

name: k10-auditconfig

namespace: kasten-io

spec:

profile:

name: <LOCATION PROFILE NAME>

namespace: <LOCATION PROFILE NAMESPACE>

EOF

## Apply AuditConfig manifest

$ kubectl apply -f k10-auditconfig.yaml -n kasten-io

config.kio.kasten.io/k10-auditconfig created

Veeam Kasten event logs will now be sent to the target Location Profile

bucket under the k10audit/ directory. If you wish to change the

destination path of the logs within the bucket, configure the Veeam

Kasten deployment with

--set siem.logging.cloud.path=<DIRECTORY PATH WITHIN BUCKET>.

Datadog Cloud SIEM

Veeam Kasten integrates with Datadog Cloud SIEM to provide high-fidelity signal data that can be used to detect suspicious activity and support security operators.

Configuring Ingest

Review each of the sections below to understand how Veeam Kasten event data can be sent to Datadog. Both methods can be configured per cluster.

Setting up the Datadog Agent on a Kubernetes Cluster

The Datadog Agent can be installed on the Kubernetes cluster and used to collect application logs, metrics, and traces.

Refer to Datadog Kubernetes documentation for complete instructions on installing the Agent on the cluster.

For Datadog to ingest Veeam Kasten event logs, the Agent must be

configured with log collection

enabled

and an include_at_match global processing rule to match the Veeam

Kasten specific pattern, (?i).*K10Event.*.

Here is an example of a values.yaml file for installing the Datadog

Agent using Helm:

datadog:

apiKey: <YOUR DD API KEY>

appKey: <YOUR DD APP KEY>

site: datadoghq.com

logs:

enabled: true

containerCollectAll: true

env:

- name: DD_LOGS_CONFIG_PROCESSING_RULES

value: '[{"type": "include_at_match", "name": "include_k10", "pattern" : "(?i).*K10Event.*"}]'

Refer to the Datadog processing rules documentation for instructions on alternative methods for configuring processing rules.

Setting up the Datadog Forwarder with AWS

The Datadog Forwarder is an AWS Lambda function used to ingest Veeam Kasten event logs sent to an AWS S3 bucket.

Refer to the Datadog cloudformation documentation to install the Forwarder in the same AWS region as the target S3 bucket.

After deploying the Forwarder, follow the to Datadog S3 trigger documentation to add an S3 Trigger using the settings below:

| Field | Value |

|---|---|

| Bucket | <TARGET S3 BUCKET> |

| Event type | Object Created (All) |

| Prefix | <TARGET S3 BUCKET PREFIX> (defaults to k10audit/) |

| Suffix | <BLANK> |

Adding Detection Rules

Detection Rules define how Datadog analyzes ingested data and when to generate a signal. Using these rules, Veeam Kasten event data can be used to alert organizations to specific activity that could indicate an ongoing security breach. This section provides the details required to add example Veeam Kasten rules to Datadog Cloud SIEM.

Open the Datadog Cloud SIEM user interface and select Detection Rules from the toolbar.

At the top right corner of the page, click the New Rule button.

Complete the form using the details below for each rule.

Each rule should be configured to notify the appropriate services and/or users. Since the specific configurations are unique to each environment, they are not covered in the examples provided below.

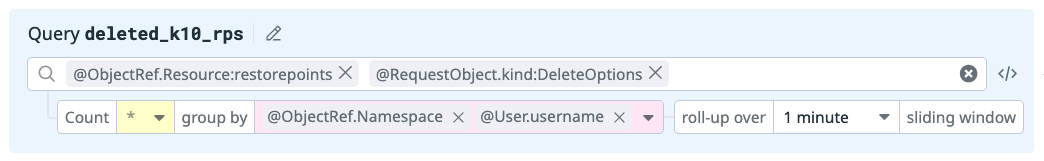

Veeam Kasten RestorePoints Manually Deleted

The purpose of this rule is to detect deletions of Veeam Kasten RestorePoint resources initiated by a user. Typically, the removal of this type of resource would be the result of backup data no longer being needed based on a policy's retention schedule and performed directly by Veeam Kasten.

Removal of a Kubernetes namespace containing RestorePoints may also trigger this signal.

| Rule Name | Kasten RestorePoints Manually Deleted |

|---|---|

| Rule Type | Log Detection |

| Detection Method | Threshold |

| Query |  |

| Trigger | deleted_k10_rps > 0 |

| Severity | Low |

| Tags | tactic:TA0040-impact |

Use the following notification body to provide an informative alert:

### Goal

Detect when Kasten RestorePoints (Kubernetes application backups) are being

manually deleted by a user. This could be an indication that the environment

has been compromised and Kasten backups are being deleted to prevent system

recovery following an attack.

### Strategy

Monitor Kubernetes Audit logs to detect when a single user or non-Veeam Kasten

service account deletes RestorePoint resources beyond the defined threshold.

### Triage and response

1. Determine if the user `{{@User.username}}` and IP address(es)

`{{@network.ip.list}}` should be retiring backup data for

`{{@ObjectRef.Namespace}}`. NOTE: This rule may also be triggered by the

legitimate deletion of the `{{@ObjectRef.Namespace}}` namespace to which the

RestorePoint resources belong.

2. If the action is legitimate, consider including the user in a suppression

list. See [Best practices for creating detection rules with Datadog Cloud

SIEM][1] for more information.

3. Otherwise, use the Cloud SIEM - User Investigation dashboard to see if the

user `{{@User.username}}` has taken other actions.

4. If the results of the triage indicate that an attacker has taken the

action, begin your company's incident response process and investigation.

[1]: https://www.datadoghq.com/blog/writing-datadog-security-detection-rules/#fine-tune-security-signals-to-reduce-noise

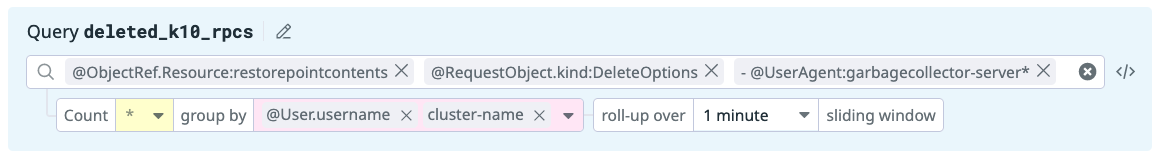

Kasten RestorePointContents Manually Deleted

The purpose of this rule is to detect deletions of Veeam Kasten RestorePointContent resources initiated by a user. The removal of this type of resource should only be the result of backup data no longer being needed based on a policy's retention schedule and performed directly by Veeam Kasten.

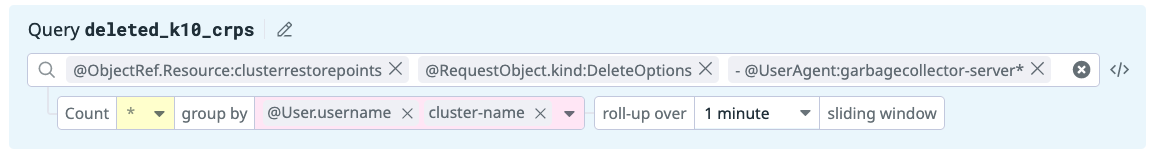

| Rule Name | Kasten ClusterRestorePoints Manually Deleted |

|---|---|

| Rule Type | Log Detection |

| Detection Method | Threshold |

| Query |  |

| Trigger | deleted_k10_crps > 0 |

| Severity | High/Critical |

| Tags | tactic:TA0040-impact, technique:T1490-inhibit-system-recovery |

Use the following notification body to provide an informative alert:

### Goal

Detect when Kasten RestorePointContents (Kubernetes application backups)

are being manually deleted by a user. This could be an indication that the

environment has been compromised and Kasten backups are being deleted to

prevent system recovery following an attack.

### Strategy

Monitor Kubernetes Audit logs to detect when a single user or non-Veeam Kasten

service account deletes RestorePointContent resources beyond the defined

threshold.

### Triage and response

1. Determine if the user `{{@User.username}}` and IP address(es)

`{{@network.ip.list}}` should be retiring backup data on cluster

`{{cluster-name}}`.

2. If the action is legitimate, consider including the user in a suppression

list. See [Best practices for creating detection rules with Datadog Cloud

SIEM][1] for more information.

3. Otherwise, use the Cloud SIEM - User Investigation dashboard to see if

the user `{{@User.username}}` has taken other actions.

4. If the results of the triage indicate that an attacker has taken the

action, begin your company's incident response process and investigation.

5. If restoring applications from deleted RestorePointContents (RPCs) is

required on this cluster, see [Recovering Veeam Kasten From a Disaster][2] for more

information.

[1]: https://www.datadoghq.com/blog/writing-datadog-security-detection-rules/#fine-tune-security-signals-to-reduce-noise

[2]: https://docs.kasten.io/latest/operating/dr.html#recovering-k10-from-a-disaster

Use of the cluster-name tag in both the query and notification body

requires capturing Veeam Kasten event logs via Datadog Agent.

Veeam Kasten ClusterRestorePoints Manually Deleted

The purpose of this rule is to detect deletions of Veeam Kasten ClusterRestorePoint resources initiated by a user. The removal of this type of resource should only be the result of backup data no longer being needed based on a policy's retention schedule and performed directly by Veeam Kasten.

| Rule Name | Kasten ClusterRestorePoints Manually Deleted |

|---|---|

| Rule Type | Log Detection |

| Detection Method | Threshold |

| Query |  |

| Trigger | deleted_k10_crps > 0 |

| Severity | High/Critical |

| Tags | tactic:TA0040-impact, technique:T1490-inhibit-system-recovery |

Use the following notification body to provide an informative alert:

### Goal

Detect when Kasten ClusterRestorePoint (Kubernetes backups of cluster-scoped

resources) are being manually deleted by a user. This could be an indication

that the environment has been compromised and Kasten backups are being

deleted to prevent system recovery following an attack.

### Strategy

Monitor Kubernetes Audit logs to detect when a single user or non-Veeam Kasten

service account deletes ClusterRestorePoint resources beyond the defined

threshold.

### Triage and response

1. Determine if the user `{{@User.username}}` and IP address(es)

`{{@network.ip.list}}` should be retiring backup data from cluster

`{{cluster-name}}`.

2. If the action is legitimate, consider including the user in a suppression

list. See [Best practices for creating detection rules with Datadog Cloud

SIEM][1] for more information.

3. Otherwise, use the Cloud SIEM - User Investigation dashboard to see if the

user `{{@User.username}}` has taken other actions.

4. If the results of the triage indicate that an attacker has taken the

action, begin your company's incident response process and investigation.

5. If restoring applications from deleted RPCs is required on this cluster,

see [Recovering Veeam kasten From a Disaster][2] for more information.

[1]: https://www.datadoghq.com/blog/writing-datadog-security-detection-rules/#fine-tune-security-signals-to-reduce-noise

[2]: https://docs.kasten.io/latest/operating/dr.html#recovering-k10-from-a-disaster

Use of the cluster-name tag in both the query and notification body

requires capturing Veeam Kasten event logs via Datadog Agent.

Microsoft Sentinel

Veeam Kasten integrates with Microsoft Sentinel to provide high-fidelity signal data that can be used to detect suspicious activity and support security operators.

Configuring Ingest

The Azure Monitor agent can be installed on Azure Kubernetes Service (AKS) and Azure Arc-managed Kubernetes clusters for collecting logs and metrics.

Refer to the Azure Monitor documentation for instructions on enabling Container Insights. Container Insights must be configured to send container logs to the Log Analytics workspace associated with Sentinel.

To minimize the cost associated with log collection, individual namespaces may be excluded from Azure Monitor using a ConfigMap as documented here.

Importing Analytics Rules

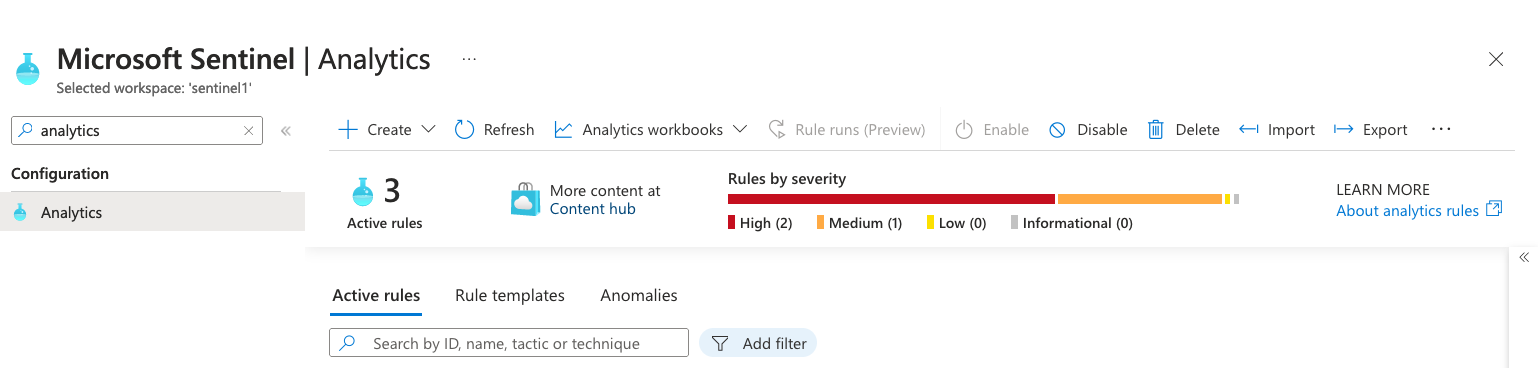

Analytics Rules define how Sentinel analyzes ingested data and when to generate an alert. Using these rules, Veeam Kasten event data can be used to alert organizations to specific activity that could indicate an ongoing security breach. This section provides the details required to add example Veeam Kasten rules to Sentinel.

Download the provided rules: kasten_sentinel_rules.json

- Open a Sentinel instance from the Azure Portal user interface.

- Select Analytics from the sidebar.

-

Select Import from the toolbar.

-

Choose the previously downloaded file named

kasten_sentinel_rules.jsonto import the rules.

Kasten RestorePoint Resources Manually Deleted

The purpose of this rule is to detect deletions of Veeam Kasten

RestorePoint, RestorePointContents, ClusterRestorePoint, and

ClusterRestorePointContents resources initiated by a user. The removal

of these resource types should only occur as a result of backup data no

longer being needed based on a policy's retention schedule and

performed directly by Veeam Kasten.

Each rule should be configured to notify the appropriate services and/or users. Since each environment has its own configurations, these are not covered in the examples provided below. See the Sentinel documentation for details on creating automation rules to manage notifications and responses.