Protecting Applications

Protecting an application with K10, usually accomplished by creating a policy, requires the understanding and use of three concepts:

Snapshots and Backups: Depending on your environment and requirement, you might need just one or both of these data capture mechanisms

Scheduling: Specification of application capture frequency and snapshot/backup retention objectives

Selection: This defines not just which applications are protected by a policy but, whenever finer-grained control is needed, resource filtering can be used to restrict what is captured on a per-application basis

This section demonstrates how to use these concepts in the context of a K10 policy to protect applications. Today, an application for K10 is defined as a collection of namespaced Kubernetes resources (e.g., ConfigMaps, Secrets), relevant non-namespaced resources used by the application (e.g., StorageClasses), Kubernetes workloads (i.e., Deployments, StatefulSets, OpenShift DeploymentConfigs, and standalone Pods), deployment and release information available from Helm v3, and all persistent storage resources (e.g., PersistentVolumeClaims and PersistentVolumes) associated with the workloads.

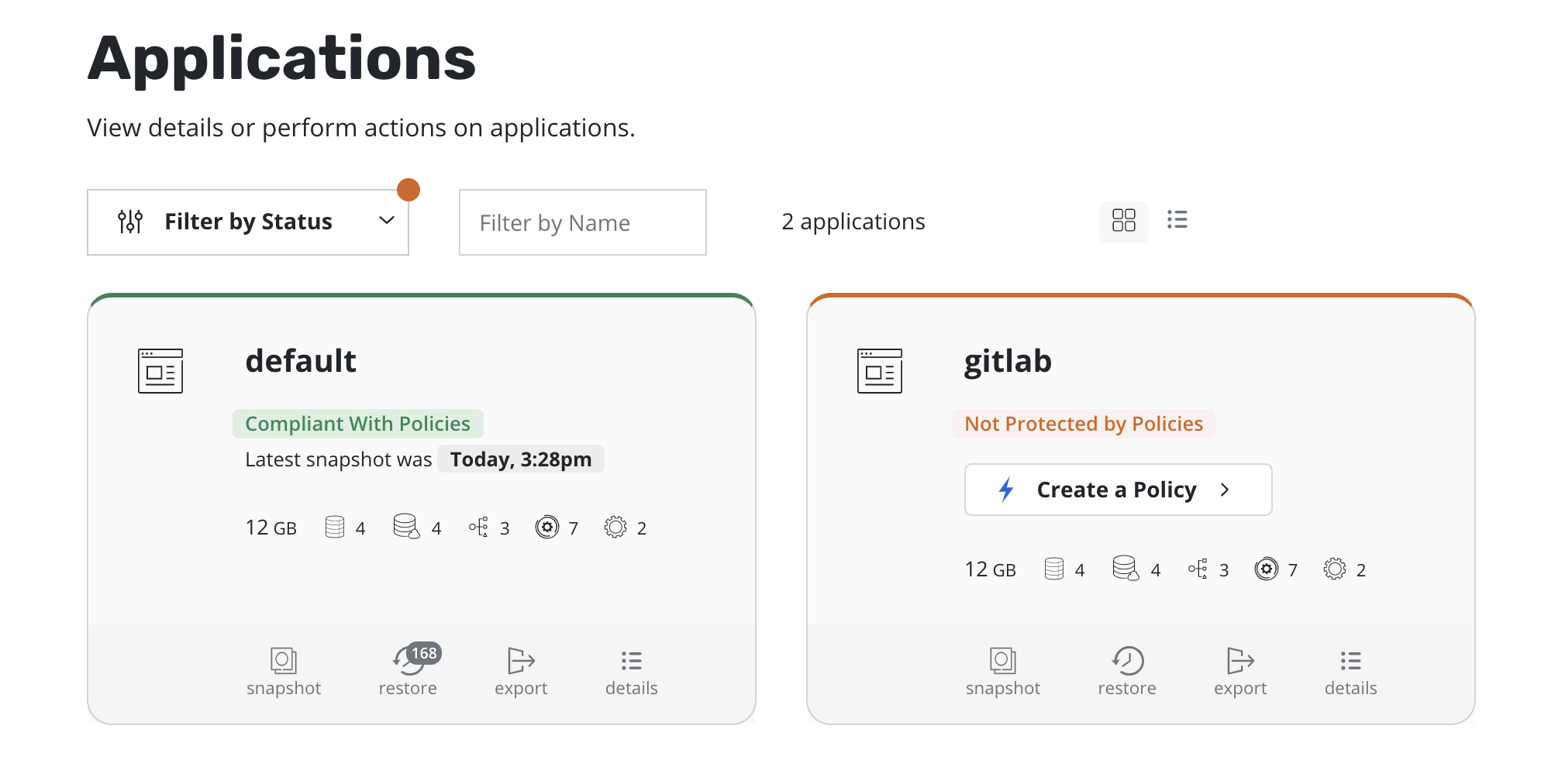

While you can always create a policy from scratch from the policies page, the easiest way to define policies for unprotected applications is to click on the Applications card on the main dashboard. This will take you to a page where you can see all applications in your Kubernetes cluster.

To protect any unmanaged application, simply click Create a policy

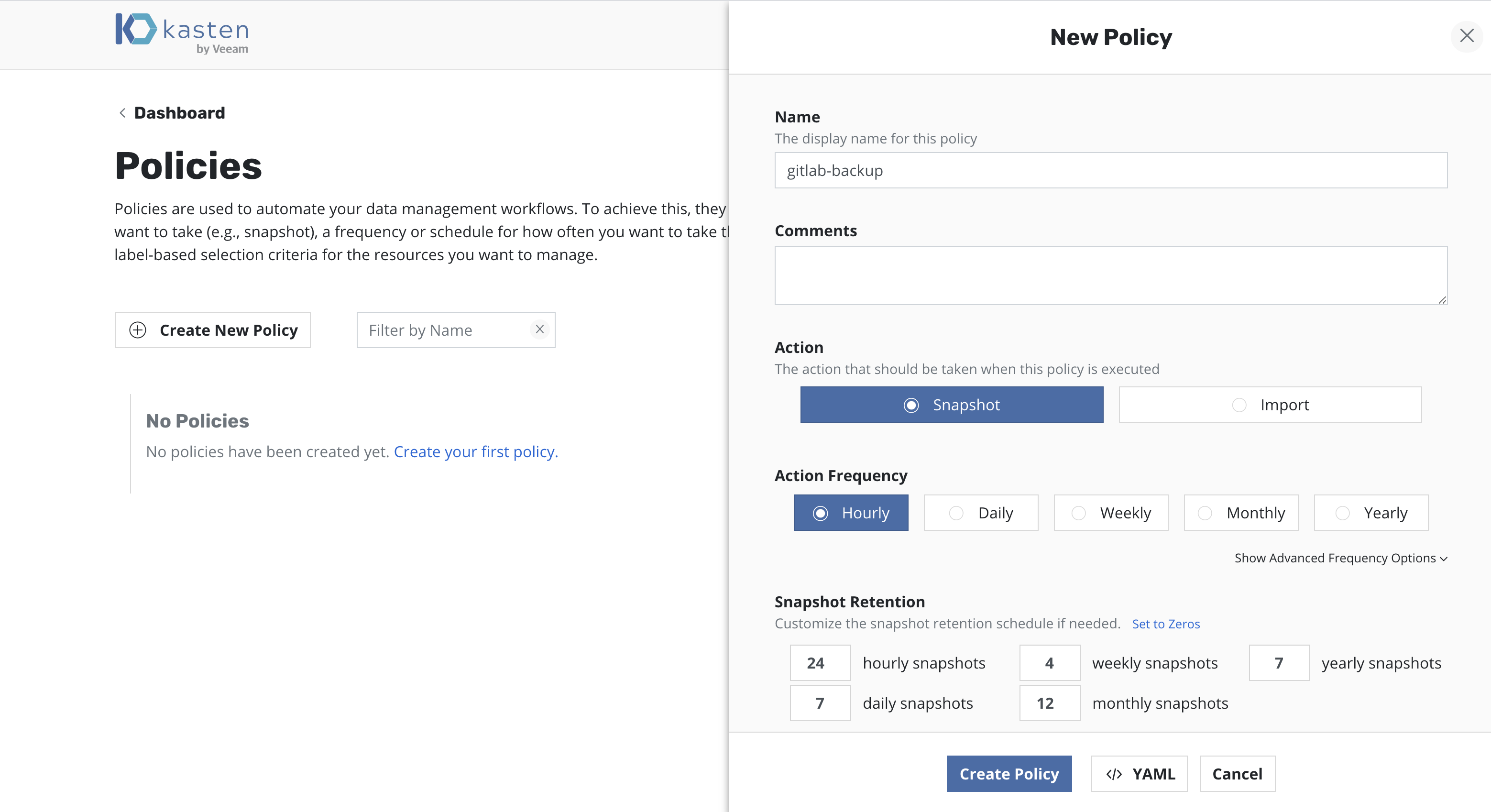

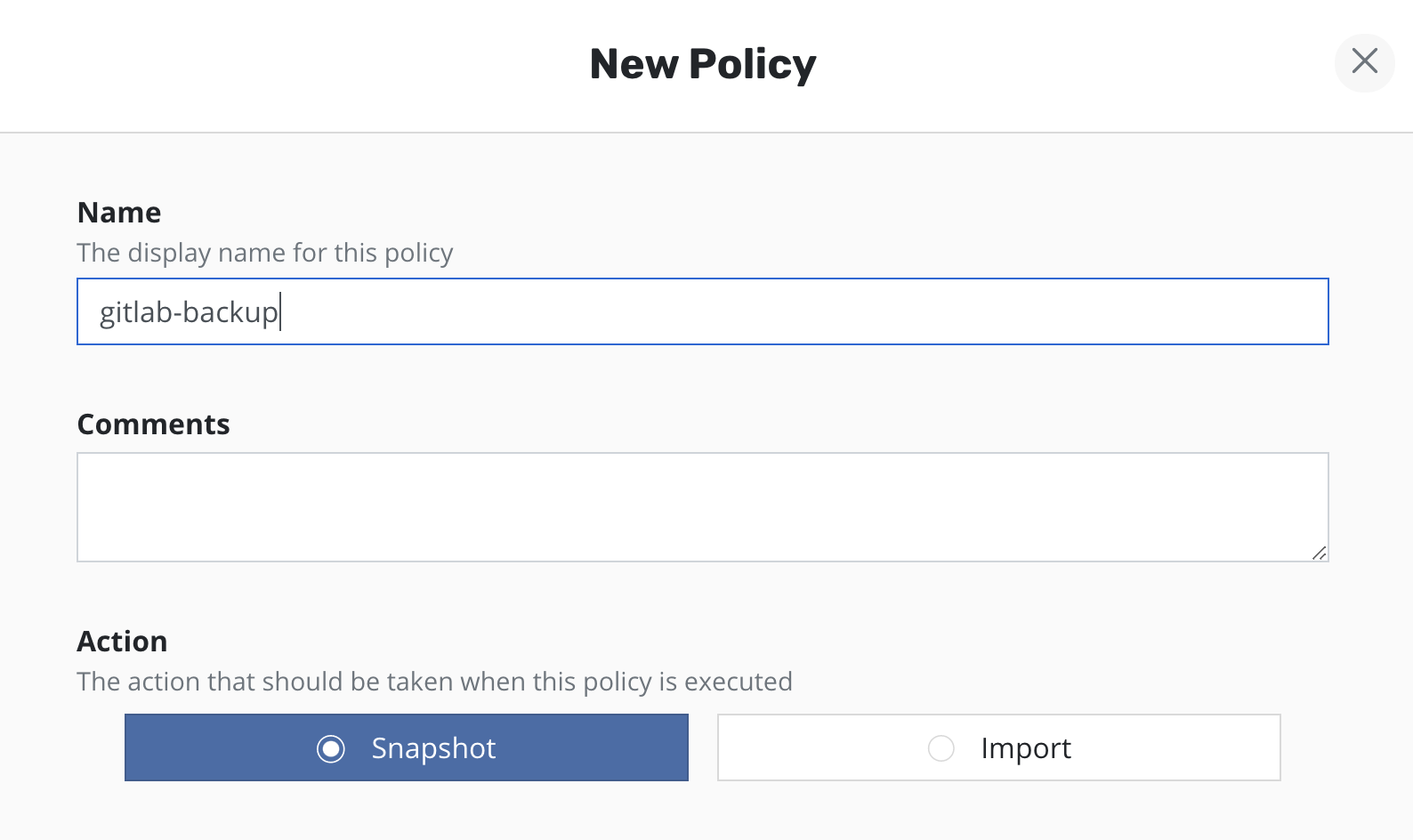

and, as shown below, that will take you to the policy creation section

with an auto-populated policy name that you can change. The concepts

highlighted above will be described in the below sections in the

context of the policy creation workflow.

Snapshots and Backups

All policies center around the execution of actions and, for protecting applications, you start by selecting the snapshot action with an optional backup (currently called export) option to that action.

Snapshots

Note

A number of public cloud providers (e.g., AWS, Azure, Google Cloud) actually store snapshots in object storage and they are retained independent of the lifecycle of the primary volume. However, this is not true of all public clouds (e.g., IBM Cloud) and you might also need to enable backups in public clouds for safety. Please check with your cloud provider's documentation for more information.

Snapshots are the basis of persistent data capture in K10. They are usually used in the context of disk volumes (PVC/PVs) used by the application but can also apply to application-level data capture (e.g., with Kanister).

Snapshots, in most storage systems, are very efficient in terms of having a very low performance impact on the primary workload, requiring no downtime, supporting fast restore times, and implementing incremental data capture.

However, storage snapshots usually also suffer from constraints such as having relatively low limits on the maximum number of snapshots per volume or per storage array. Most importantly, snapshots are not always durable. First, catastrophic storage system failure will destroy your snapshots along with your primary data. Further, in a number of storage systems, a snapshot's lifecycle is tied to the source volume. So, if the volume is deleted, all related snapshots might automatically be garbage collected at the same time. It is therefore highly recommended that you create backups of your application snapshots too.

Backups

Note

In most cases, when application-level capture mechanisms (e.g., logical database dumps via Kanister) are used, these artifacts are directly sent to an object store or an NFS file store. Backups should not be needed in those scenarios unless a mix of application and volume-level data is being captured or in case of a more specific use case.

Given the limitations of snapshots, it is often advisable to set up backups of your application stack. However, even if your snapshots are durable, backups might still be useful in a variety of use cases including lowering costs with K10's data deduplication or backing your snapshots up in a different infrastructure provider for cross-cloud resiliency.

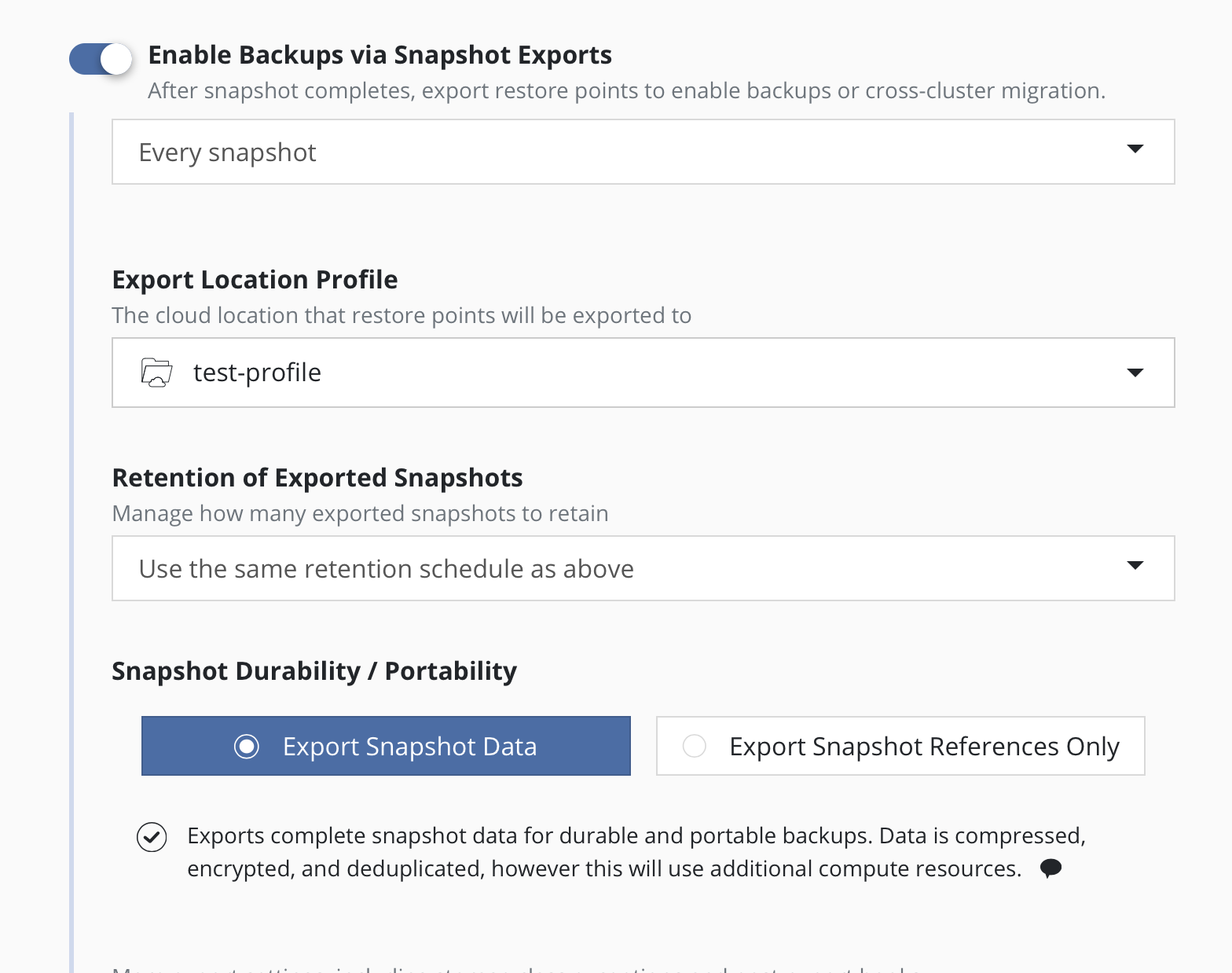

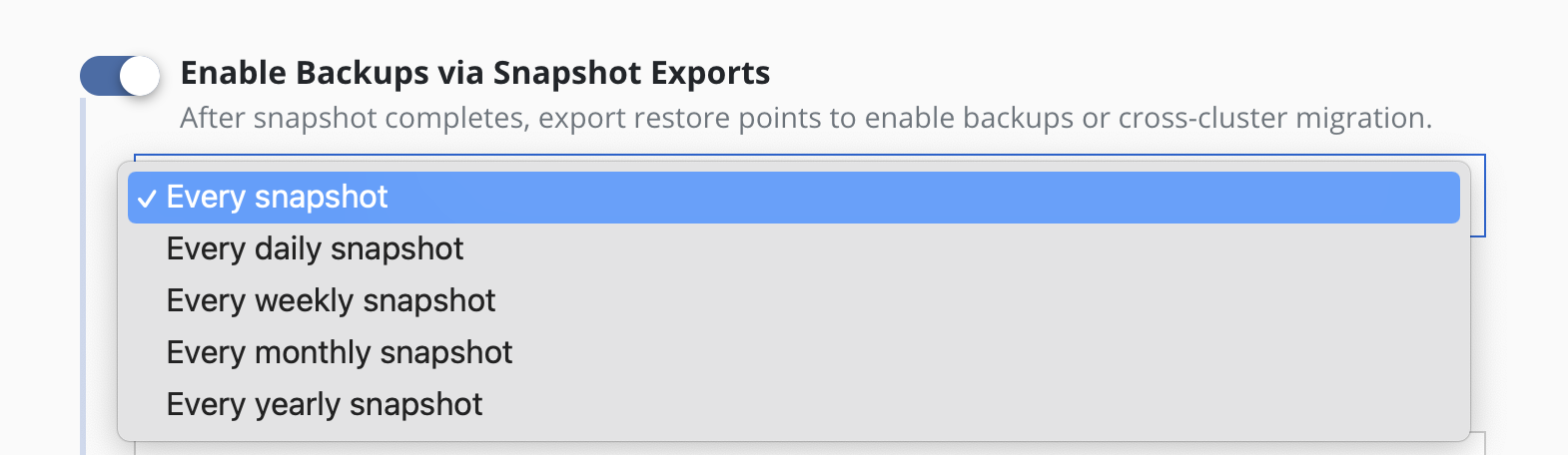

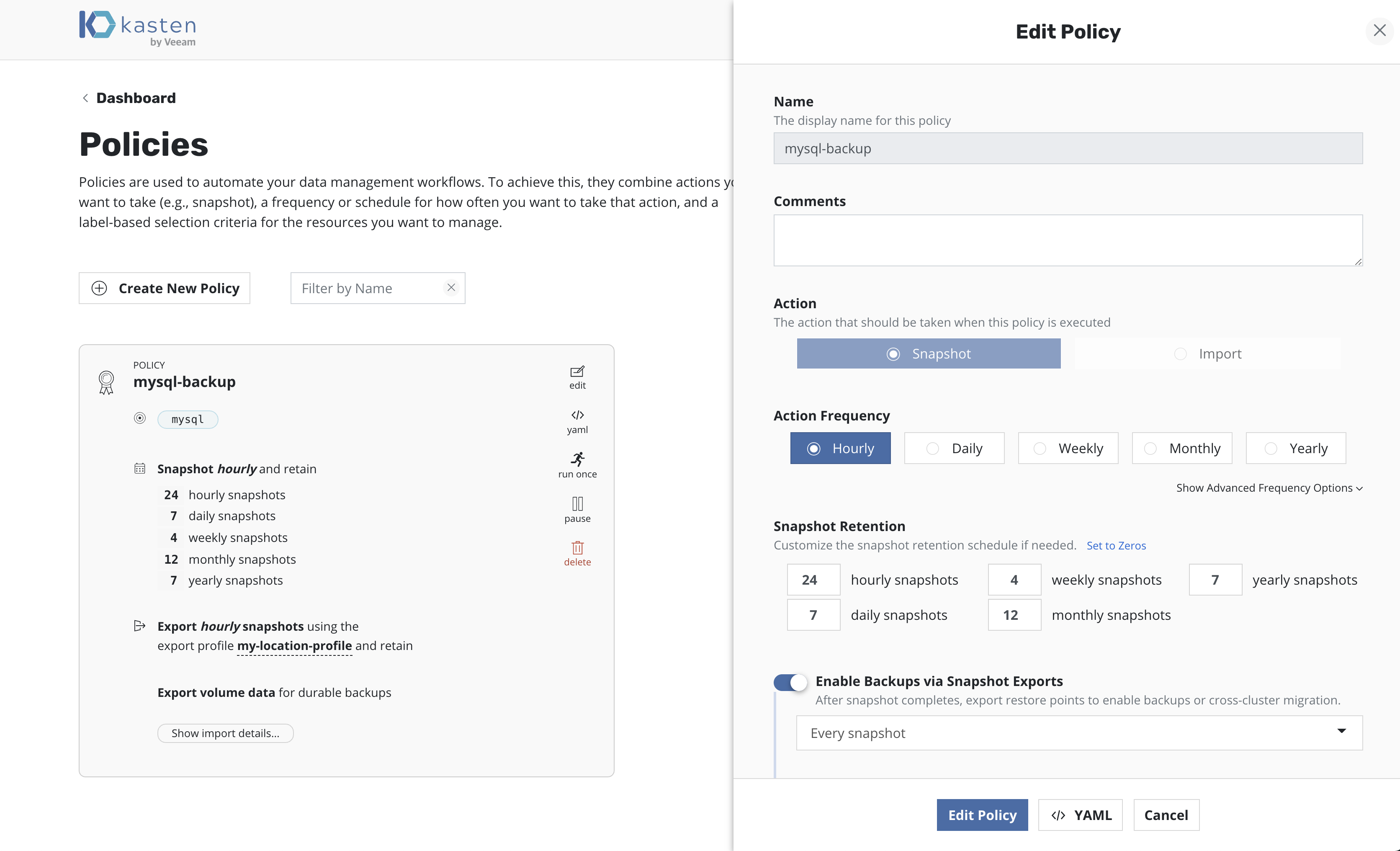

Backup operations convert application and volume snapshots into

backups by transforming them into an infrastructure-independent format

and then deduplicating, compressing, and encrypting them before storing

them in an object store or an NFS file store. To convert your snapshots into

backups, select the profile to indicate where the backups should be stored and

Enable Backups via Snapshot Exports during policy creation.

Additional settings for portability and only exporting snapshot

references will also be visible here. They are primarily used

for migrating applications across clusters and more information on them

can be found in the Exporting Applications

section. These settings are available when creating a policy, and when

manually exporting a restore point.

Scheduling

There are four components to scheduling:

How frequently the primary snapshot action should be performed

How often snapshots should be exported into backups

Retention schedule of snapshots and backups

When the primary snapshot action should be performed

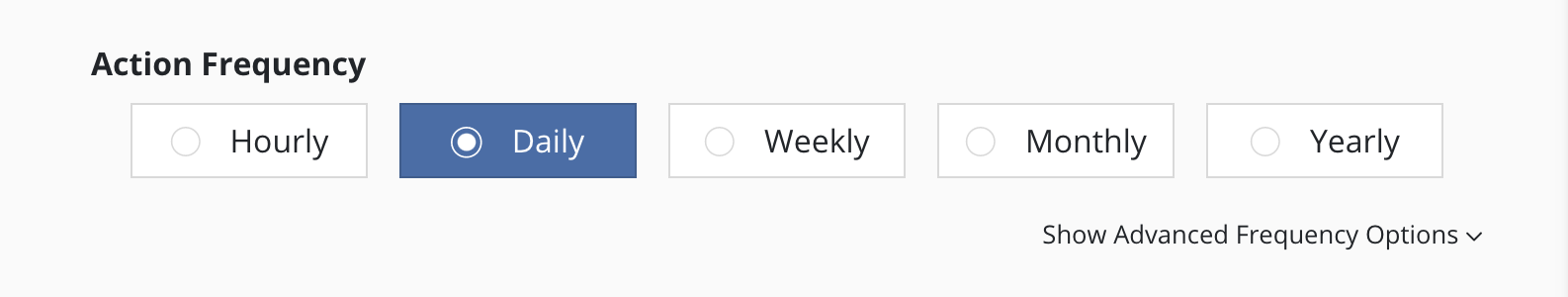

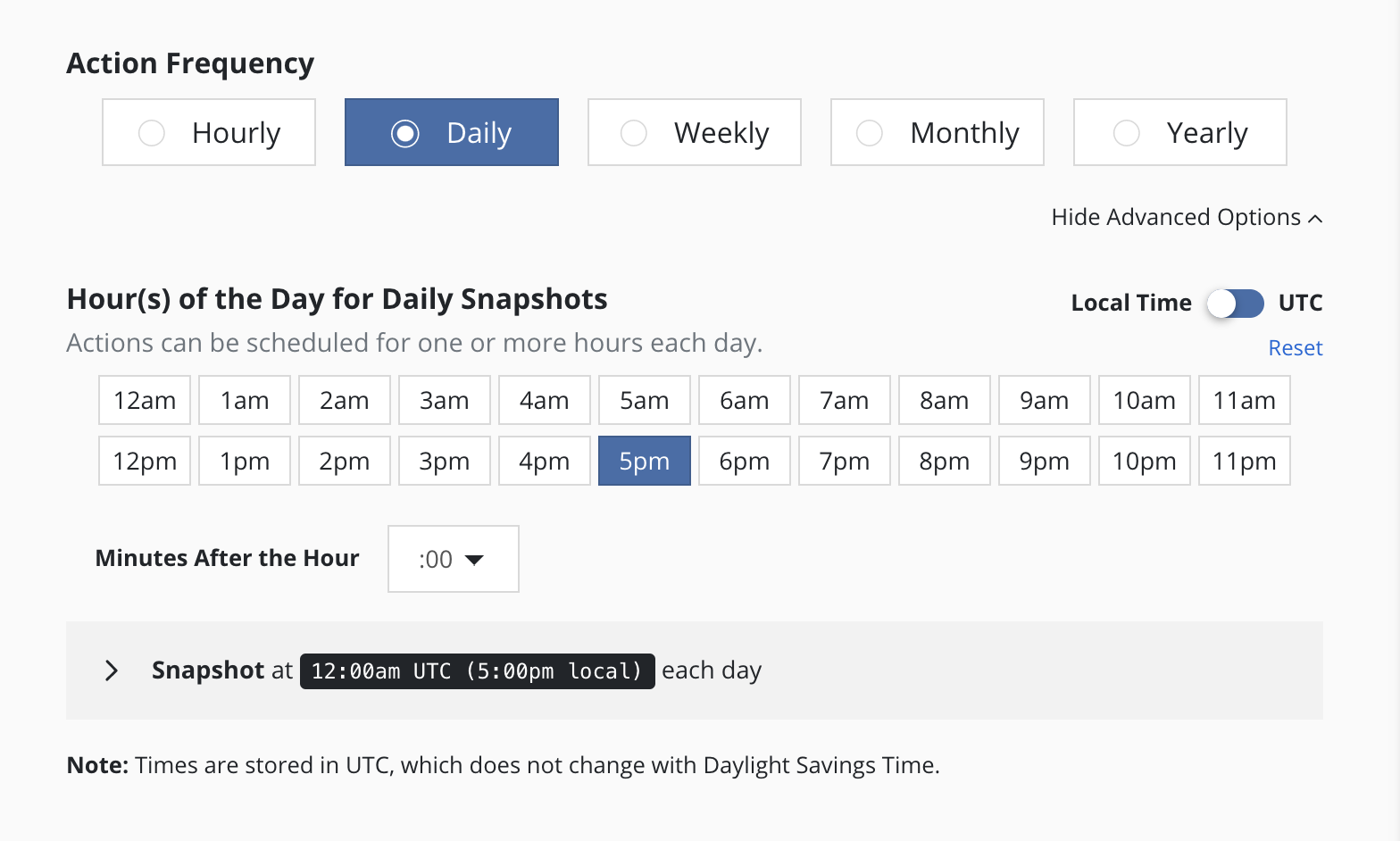

Action Frequency

Actions can be set to execute at an hourly, daily, weekly, monthly, or yearly granularity. By default, actions set to hourly will execute at the top of the hour and other actions will execute at midnight UTC.

It is also possible to select the time at which actions will execute and sub-frequencies that execute multiple actions per frequency. See Advanced Schedule Options below.

Sub-hourly actions are useful when you are protecting mostly Kubernetes objects or small data sets. Care should be taken with more general-purpose workloads because of the risk of stressing underlying storage infrastructure or running into storage API rate limits. Further, sub-frequencies will also interact with retention (described below). For example, retaining 24 hourly snapshots at 15-minute intervals would only retain 6 hours of snapshots.

Snapshot Exports to Backups

Backups performed via exports, by default, will be set up to export every snapshot into a backup. However, it is also possible to select a subset of snapshots for exports (e.g., only convert every daily snapshot into a backup).

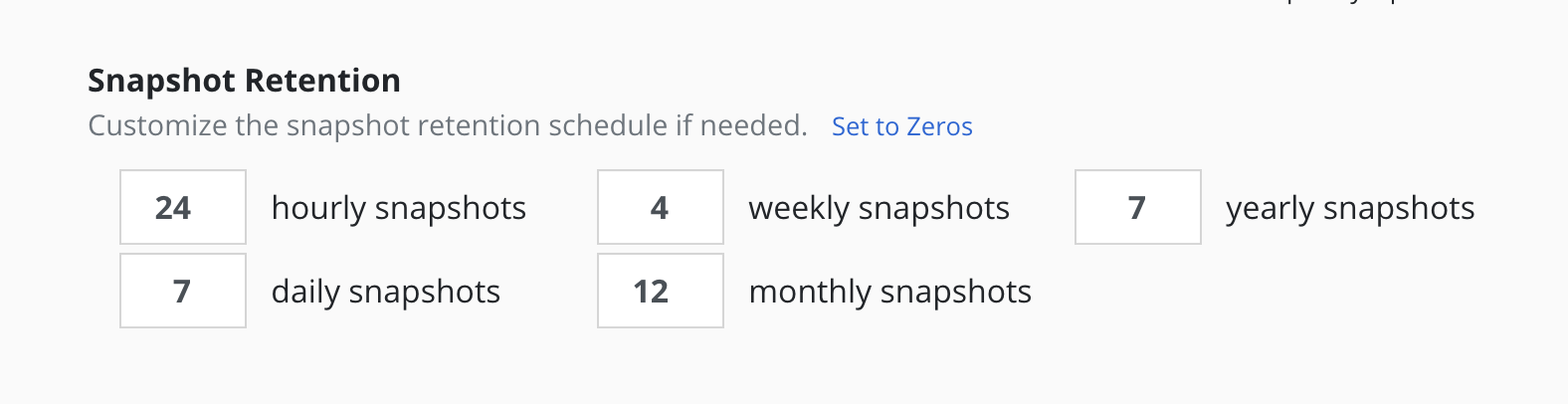

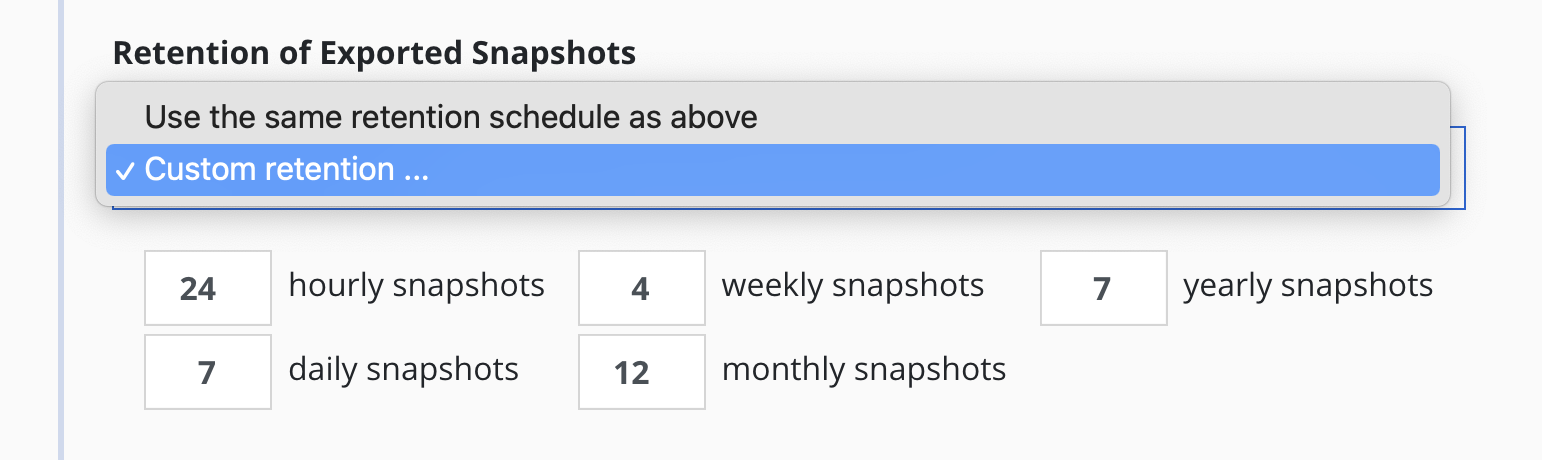

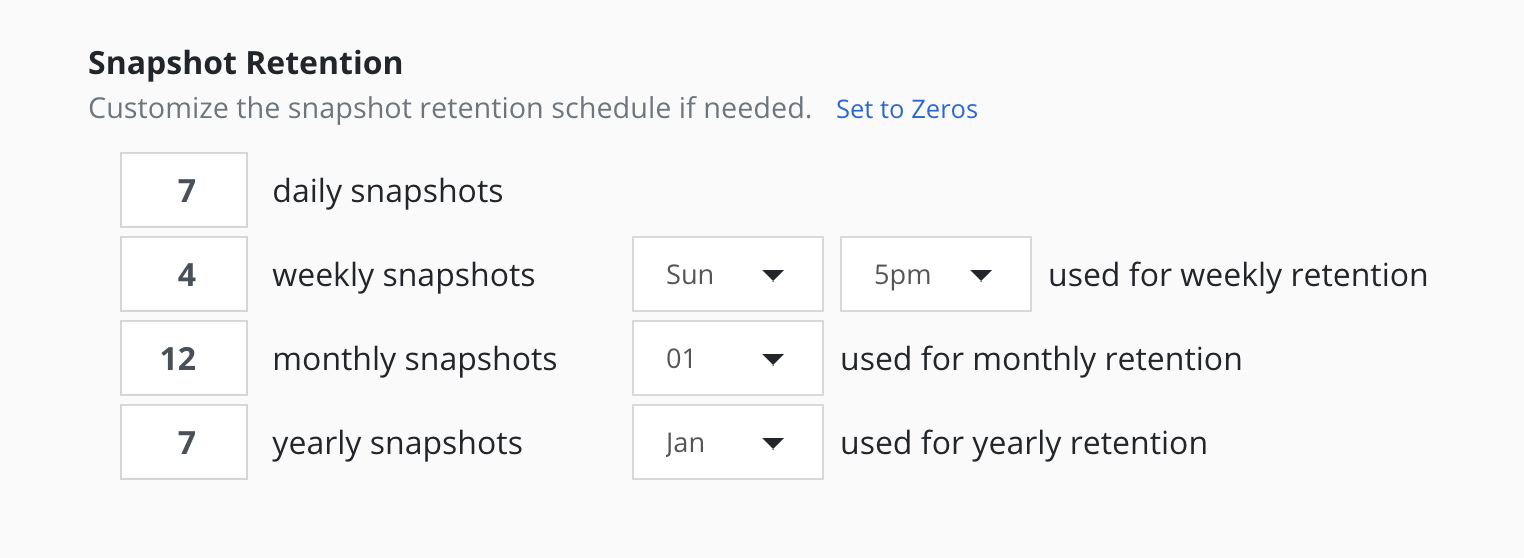

Retention Schedules

A powerful scheduling feature in K10 is the ability to use a GFS retention scheme for cost savings and compliance reasons. With this backup rotation scheme, hourly snapshots and backups are rotated on an hourly basis with one graduating to daily every day and so on. It is possible to set the number of hourly, daily, weekly, monthly, and yearly copies that need to be retained and K10 will take care of both cleanup at every retention tier as well as graduation to the next one.

By default, backup retention schedules will be set to be the same as snapshot retention schedules but these can be set to independent schedules if needed. This allows users to create policies where a limited number of snapshots are retained for fast recovery from accidental outages while a larger number of backups will be stored for long-term recovery needs. This separate retention schedule is also valuable when limited number of snapshots are supported on the volume but a larger backup retention count is needed for compliance reasons.

The retention schedule for a policy does not apply to snapshots and

backups produced by

manual policy runs. Any artifacts created by

a manual policy run will need to be manually cleaned up.

Snapshots and backups created by scheduled runs of a policy can be

retained and omitted from the retention counts by adding a

k10.kasten.io/doNotRetire: "true" label to the

RunAction created for the policy run.

Advanced Schedule Options

By default, actions set to hourly will execute at the top of the hour and other actions will execute at midnight UTC.

The Advanced Options settings enable picking how many times and when

actions are executed within the interval of the frequency. For example,

for a daily frequency, what hour or hours within each day and what minute

within each hour can be set.

The retention schedule for the policy can be customized to select which snapshots and backups will graduate and be retained according to the longer period retention counts.

By default, hourly retention counts apply to the hourly at the top of the hour, daily retention counts apply to the action at midnight, weekly retention counts refer to midnight Sunday, monthly retention counts refer to midnight on the 1st of each month, and yearly retention counts refer to midnight on the 1st of January (all UTC).

When using sub-frequencies with multiple actions per period, all of the actions are retained according to the retention count for that frequency.

The Advanced Options settings allows a user to display and enter times in either local time or UTC. All times are converted to UTC and K10 policy schedules do not change for daylight savings time.

Application Selection and Exceptions

This section describes how policies can be bound to applications, how namespaces can be excluded from policies, how policies can protect cluster-scoped resources, and how exceptions can be handled.

Application Selection

You can select applications by two specific methods:

Application Names

Labels

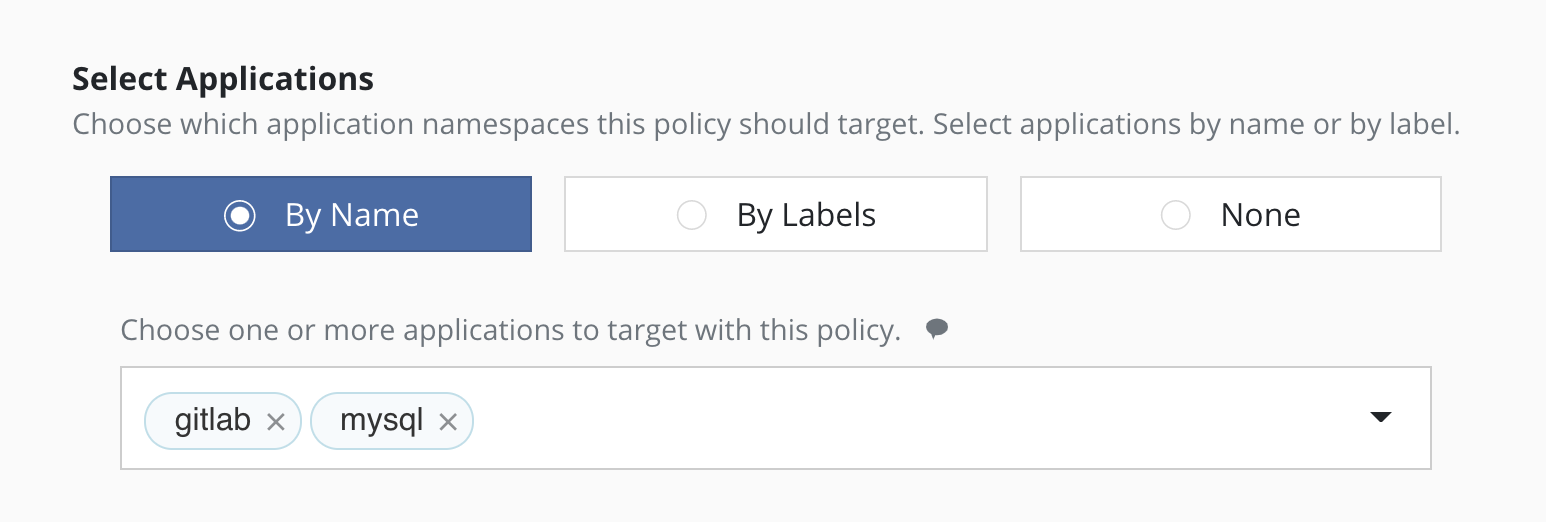

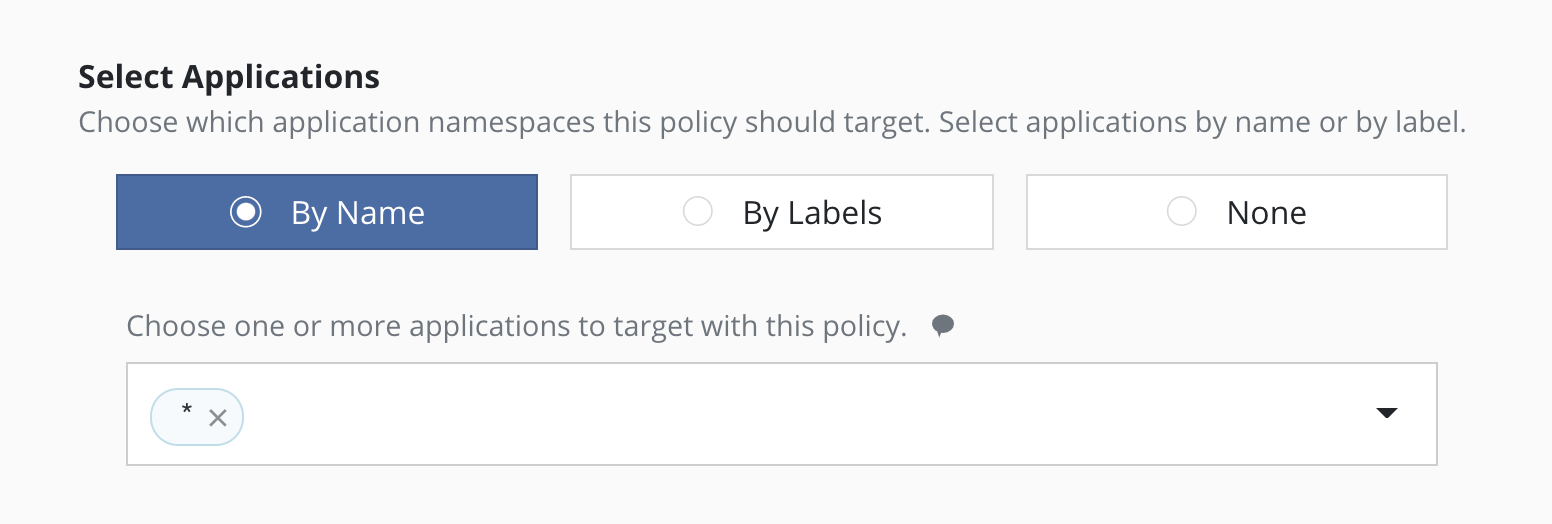

Selecting By Application Name

The most straightforward way to apply a policy to an application is to use its name (which is derived from the namespace name). Note that you can select multiple application names in the same policy.

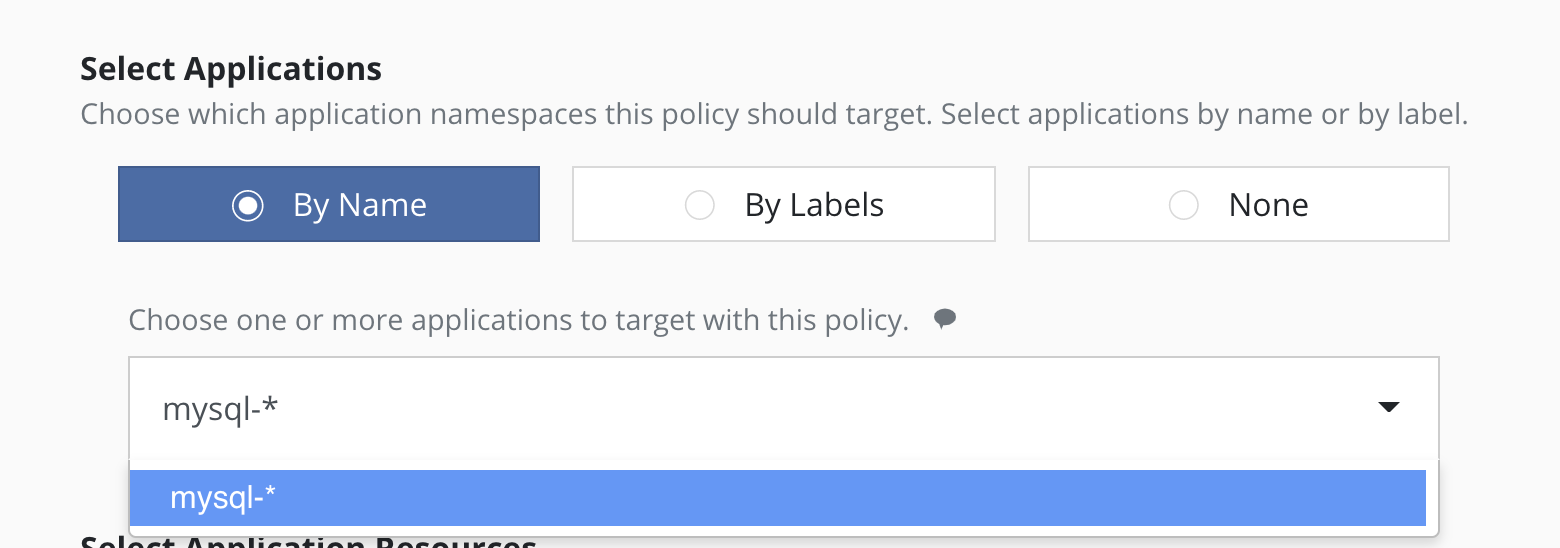

Selecting By Application Name Wildcard

For policies that need to span similar applications, you can select applications by an application name wildcard. Wildcard selection will match all application that start with the wildcard specified.

For policies that need to span all applications, you can select

all applications with a * wildcard.

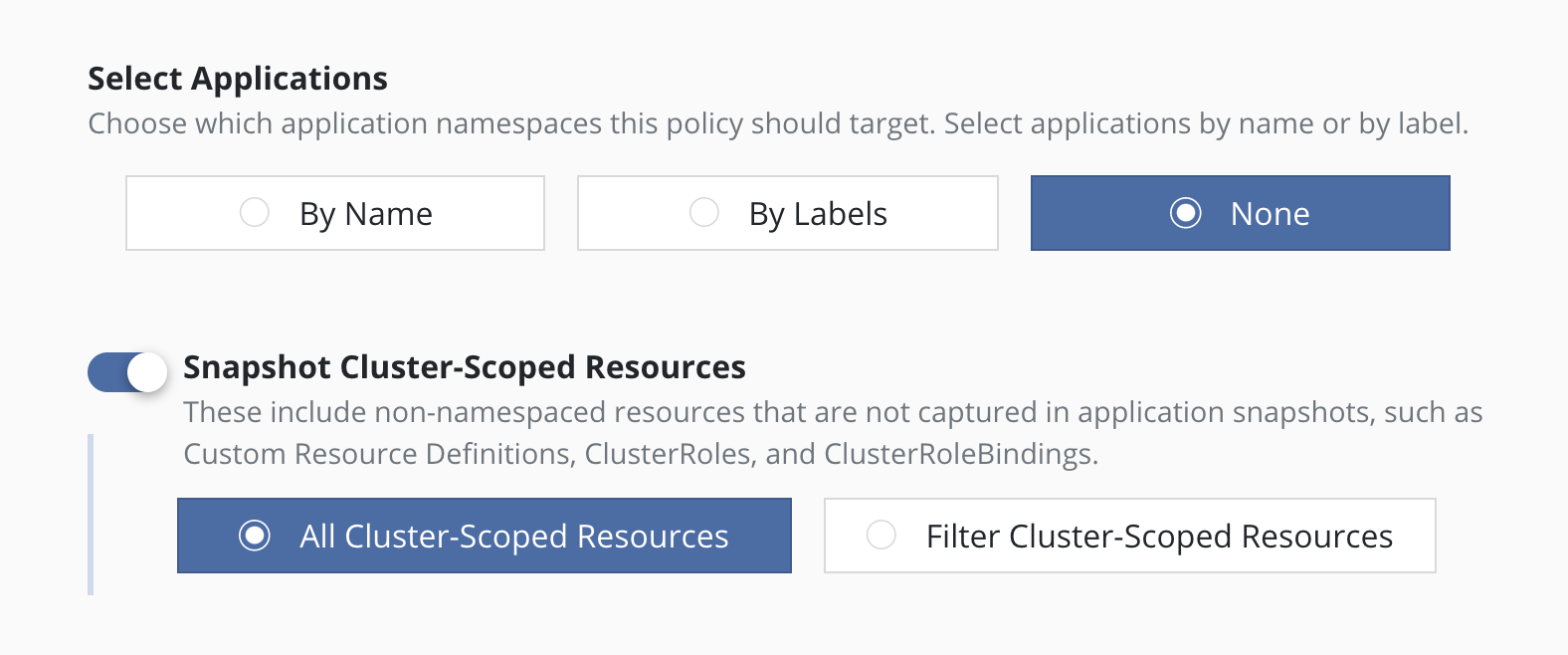

Selecting No Applications

For policies that protect only cluster-scoped resources and do not target any applications, you can select "None". For more information about protecting cluster-scoped resources, see Cluster-Scoped Resources.

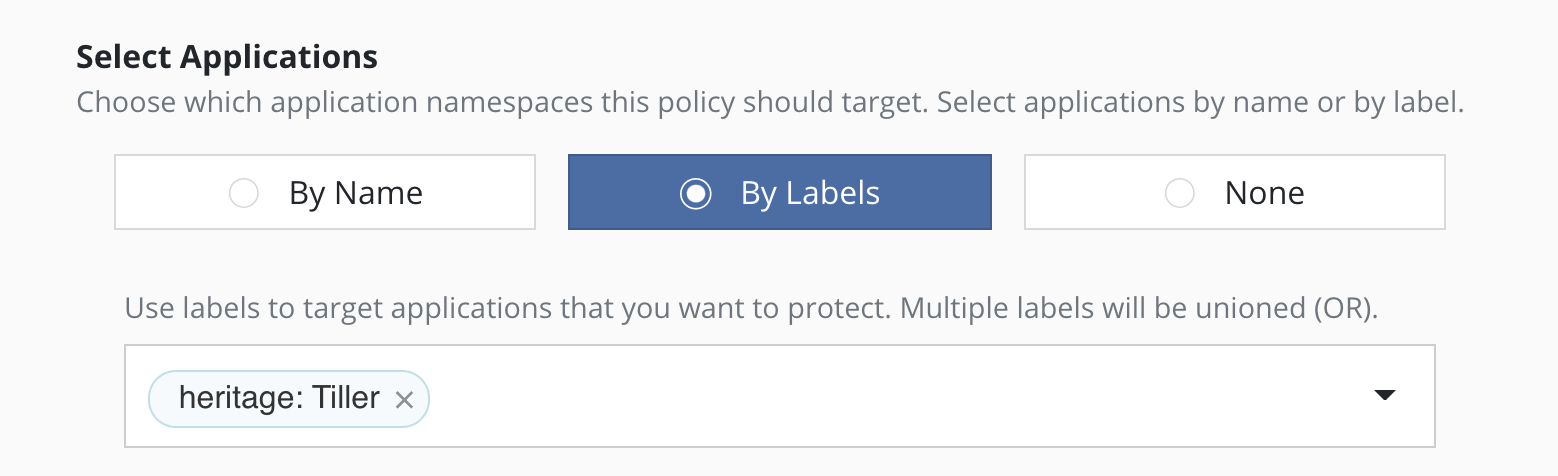

Selecting By Labels

For policies that need to span multiple applications (e.g., protect all applications that use MongoDB or applications that have been annotated with the gold label), you can select applications by label. Any application (namespace) that has a matching label as defined in the policy will be selected. Matching occurs on labels applied to namespaces, deployments, and statefulsets. If multiple labels are selected, a union (logical OR) will be performed when deciding to which applications the policy will be applied. All applications with at least one matching label will be selected.

Note that label-based selection can be used to create forward-looking

policies as the policy will automatically apply to any future

application that has the matching label. For example, using the

heritage: Tiller (Helm v2) or heritage: Helm (Helm v3)

selector will apply the policy you are creating to any new

Helm-deployed applications as the Helm package manager

automatically adds that label to any Kubernetes workload it creates.

Namespace Exclusion

Even if a namespace is covered by a policy, it is possible to have the

namespace be ignored by the policy. You can add the

k10.kasten.io/ignorebackuppolicy annotation to the namespace(s)

you want to be ignored. Namespaces that are tagged with the

k10.kasten.io/ignorebackuppolicy annotation will be skipped during

scheduled backup operations.

Exceptions

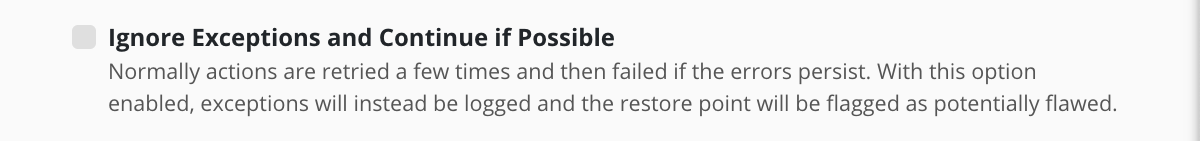

Normally K10 retries when errors occur and then fails the action or policy run if errors persist. In some circumstances it is desirable to treat errors as exceptions and continue the action if possible.

Examples of when K10 does this automatically include:

When a Snapshot policy selects multiple applications by label and creates durable backups by exporting snapshots, K10 treats failures across applications independently.

If the snapshot for an application fails after all retries, that application is not exported. If snapshots for some applications succeed and snapshots for other applications fail, the failures are reported as exceptions in the policy run. If snapshots for all applications fail, the policy run fails and the export is skipped.

If the export of a snapshot for an application fails after retries, that application is not exported. If the export of snapshots for some applications succeed and others fail, the failures are reported as exceptions in the export action and policy run. If no application is successfully exported, the export action and policy run fail.

In some cases, treating errors as exceptions is optional:

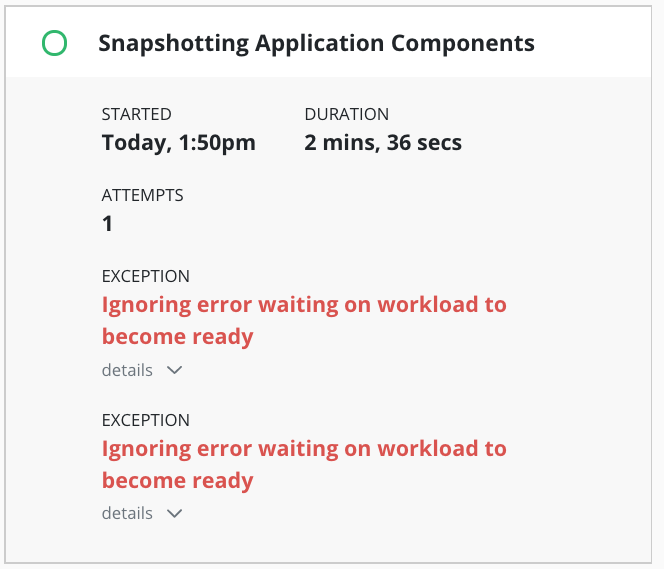

K10 normally waits for workloads (e.g., Deployments or StatefulSets) to become ready before taking snapshots and fails the action if a workload does not become ready after retries. In some cases the desired path for a backup action or policy might be to ignore such timeouts and to proceed to capture whatever it can in a best-effort manner and store that as a restore point.

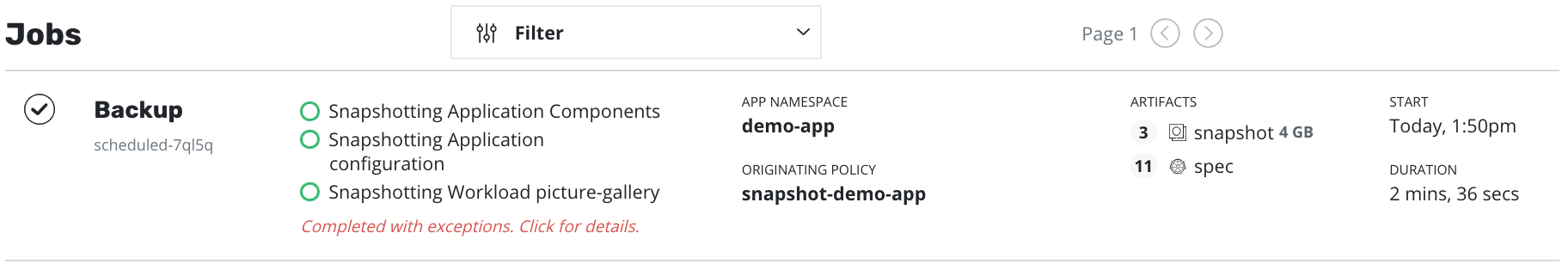

When an exception occurs, the job will be completed with exception(s):

Details of the exceptions can be seen with job details:

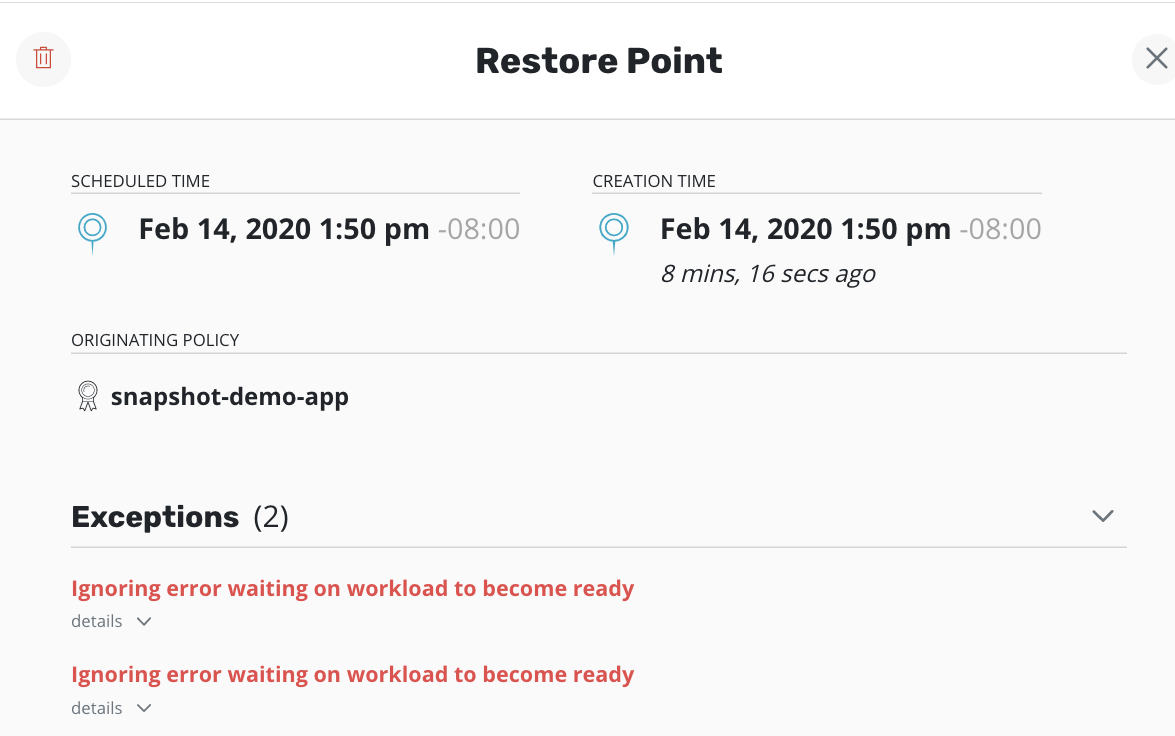

Any exception(s) ignored when creating a restore point are noted in the restore point:

Resource Filtering

This section describes how specific application resources can either be included or excluded from capture or restoration.

Warning

Filters should be used with care. It is easy to accidentally define a policy that might leave out essential components of your application.

Resource filtering is supported for both backup policies and restore actions. The recommended best practice is to create backup policies that capture all resources to future-proof restores and to use filters to limit what is restored.

In K10, filters describe which Kubernetes resources should be included or excluded in the backup. If no filters are specified, all the API resources in a namespace are captured by the BackupActions created by this Policy.

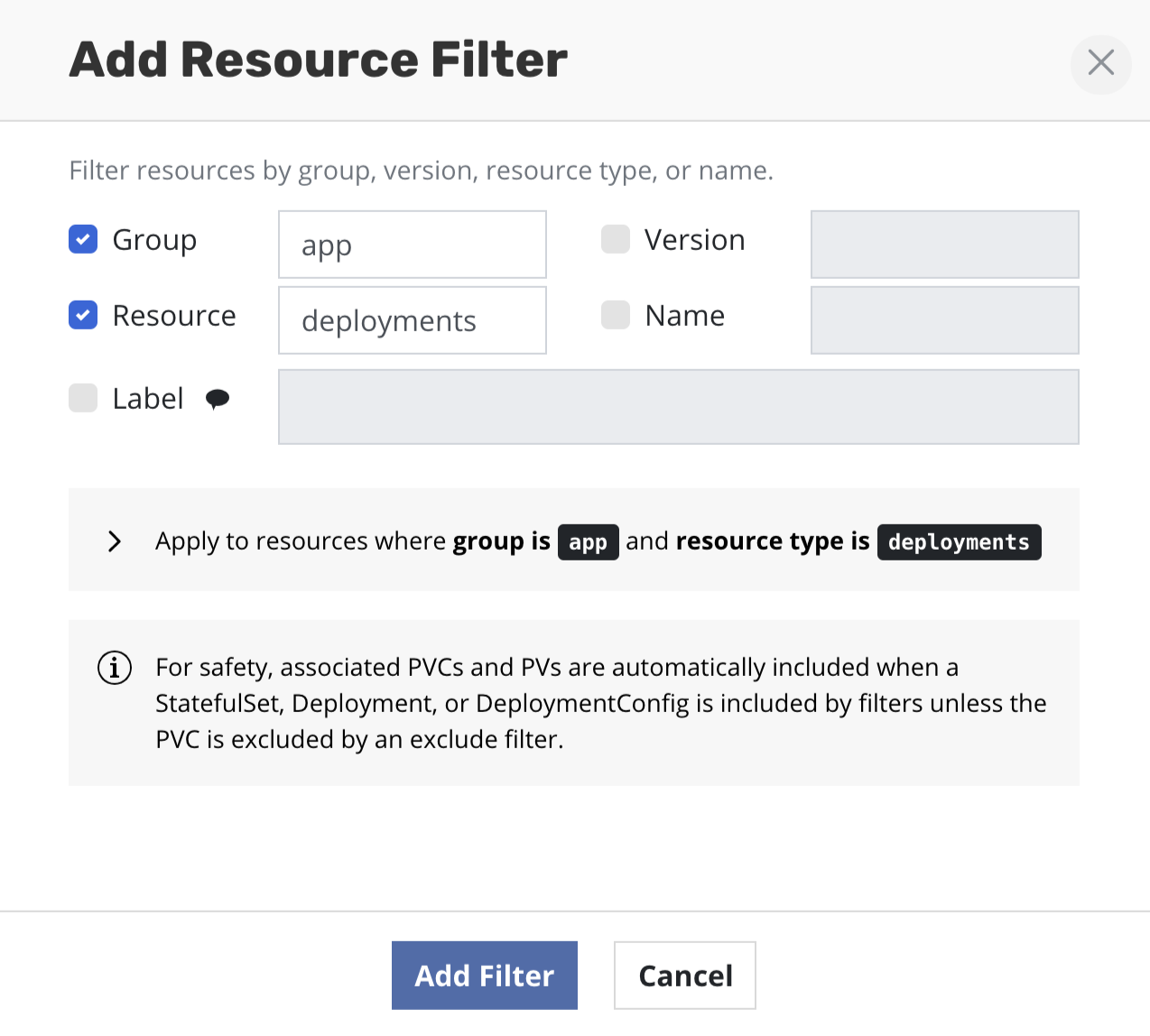

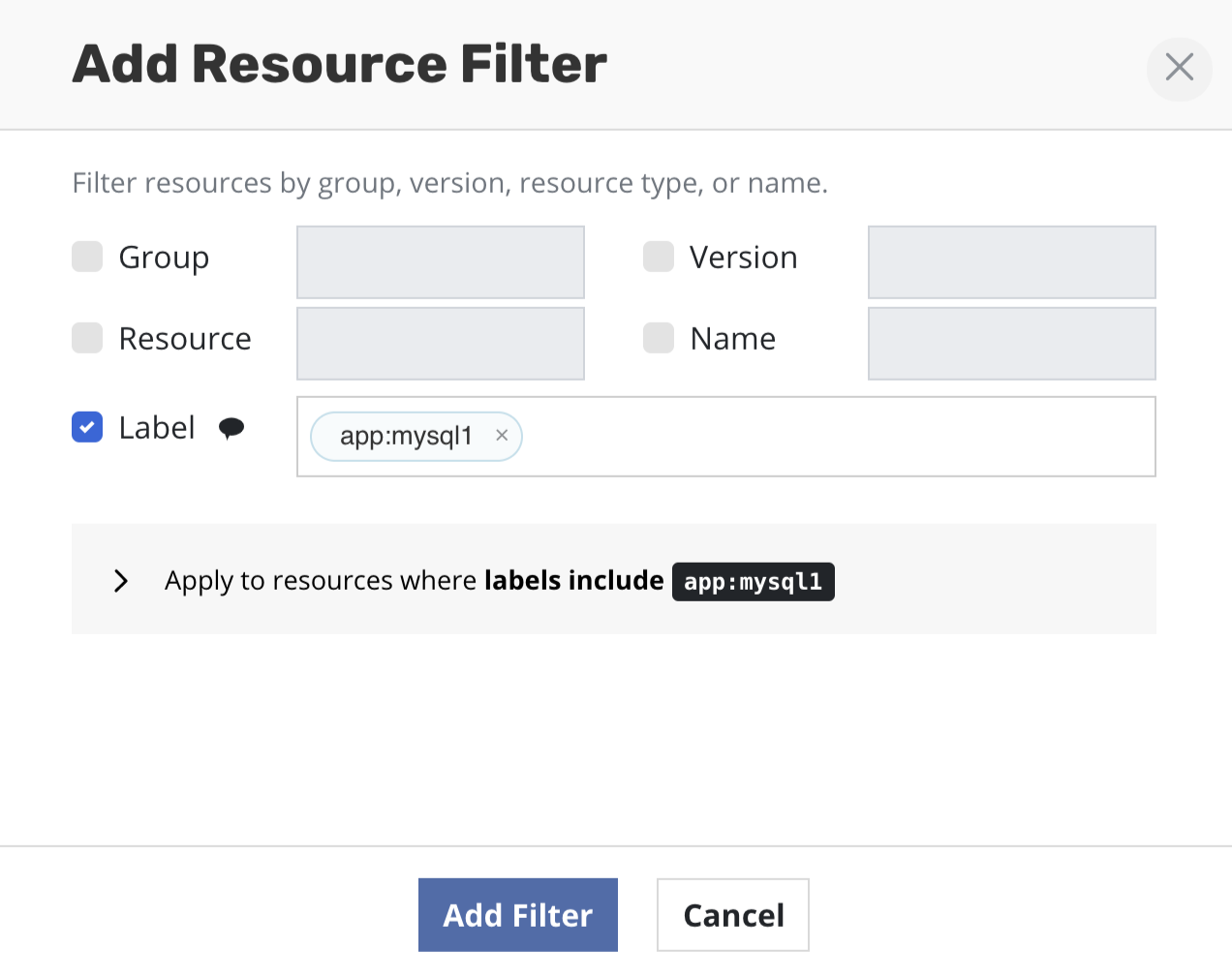

Filtering Resources by GVRN

Resource types are identified by group, version, and resource type

names, or GVR

(e.g., networking.k8s.io/v1/networkpolicies). Core Kubernetes

types do not have a group name and are identified by just a version

and resource type name (e.g., v1/configmaps). Individual

resources are identified by their resource type and resource name, or

GVRN. In a filter, an empty or omitted group, version, resource type

or resource name matches any value. For example, if you set Group:

apps and Resource: deployments, it will capture all

Deployments no matter the API Version (e.g., v1 or

v1beta1).

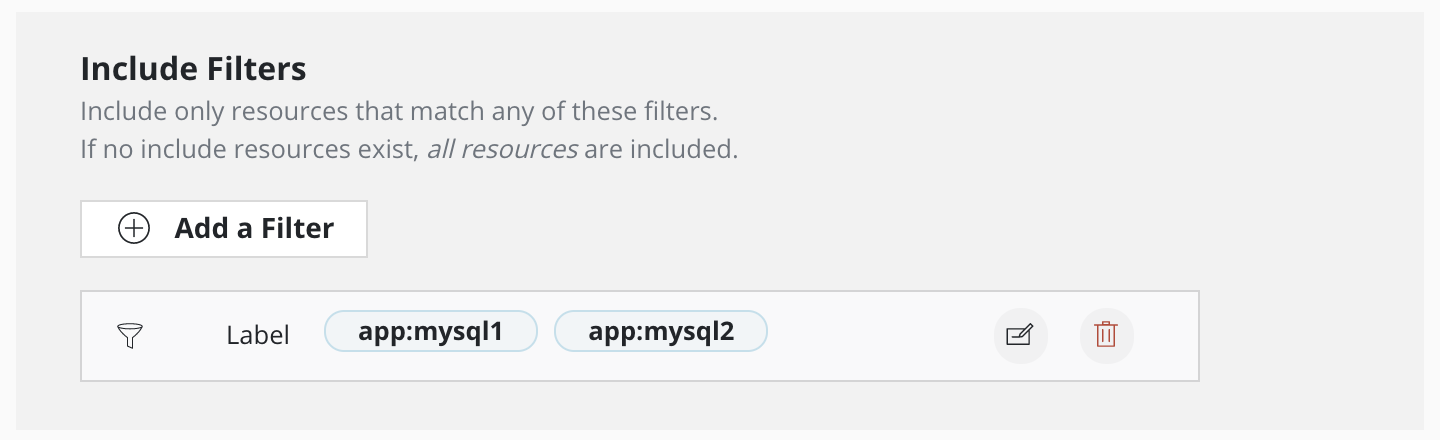

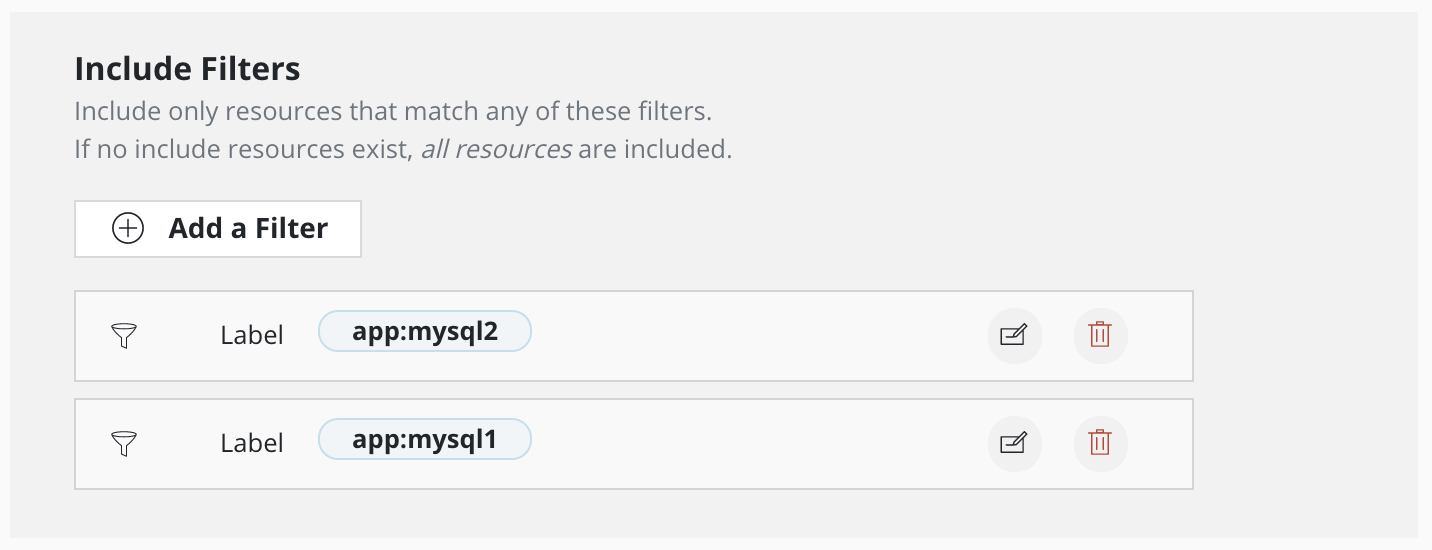

Filters reduce the resources in the backup by first selectively including and then excluding resources:

If no include or exclude filters are specified, all the API resources belonging to an application are included in the set of resources to be backed up

If only include filters are specified, resources matching any GVRN entry in the include filter are included in the set of resources to be backed up

If only exclude filters are specified, resources matching any GVRN entry in the exclude filter are excluded from the set of resources to be backed up

If both include and exclude filters are specified, the include filters are applied first and then exclude filters will be applied only on the GVRN resources selected by the include filter

For a full list of API resources in your cluster, run kubectl

api-resources.

Filtering Resources by Labels

Note

Non-namespaced objects, such as StorageClasses, may not be affected by label filters.

K10 also supports filtering resources by labels when taking a backup. This is particularly useful when there are multiple apps running in a single namespace. By leveraging label filters, it is possible to selectively choose which application to backup. The rules from the previous section describing the use of include and exclude filters apply to label filters as well.

Multiple labels can be provided as a part of the same filter if they are to be applied together. Conversely, multiple filters each with their own label can be provided together signifying that any of the labels should match.

Safe Backup

For safety, K10 automatically includes namespaced and non-namespaced resources such as associated volumes (PVCs and PVs) and StorageClasses when a StatefulSet, Deployment, or DeploymentConfig is included by filters. Such auto-included resources can be omitted by specifying an exclude filter.

Similarly, given the strict dependency between the objects, K10 protects Custom Resource Definitions (CRDs) if a Custom Resource (CR) is included in a backup. However, it is not possible today to exclude a CRD via exclude filters and any failure to exclude will be silently ignored.

Working With Policies

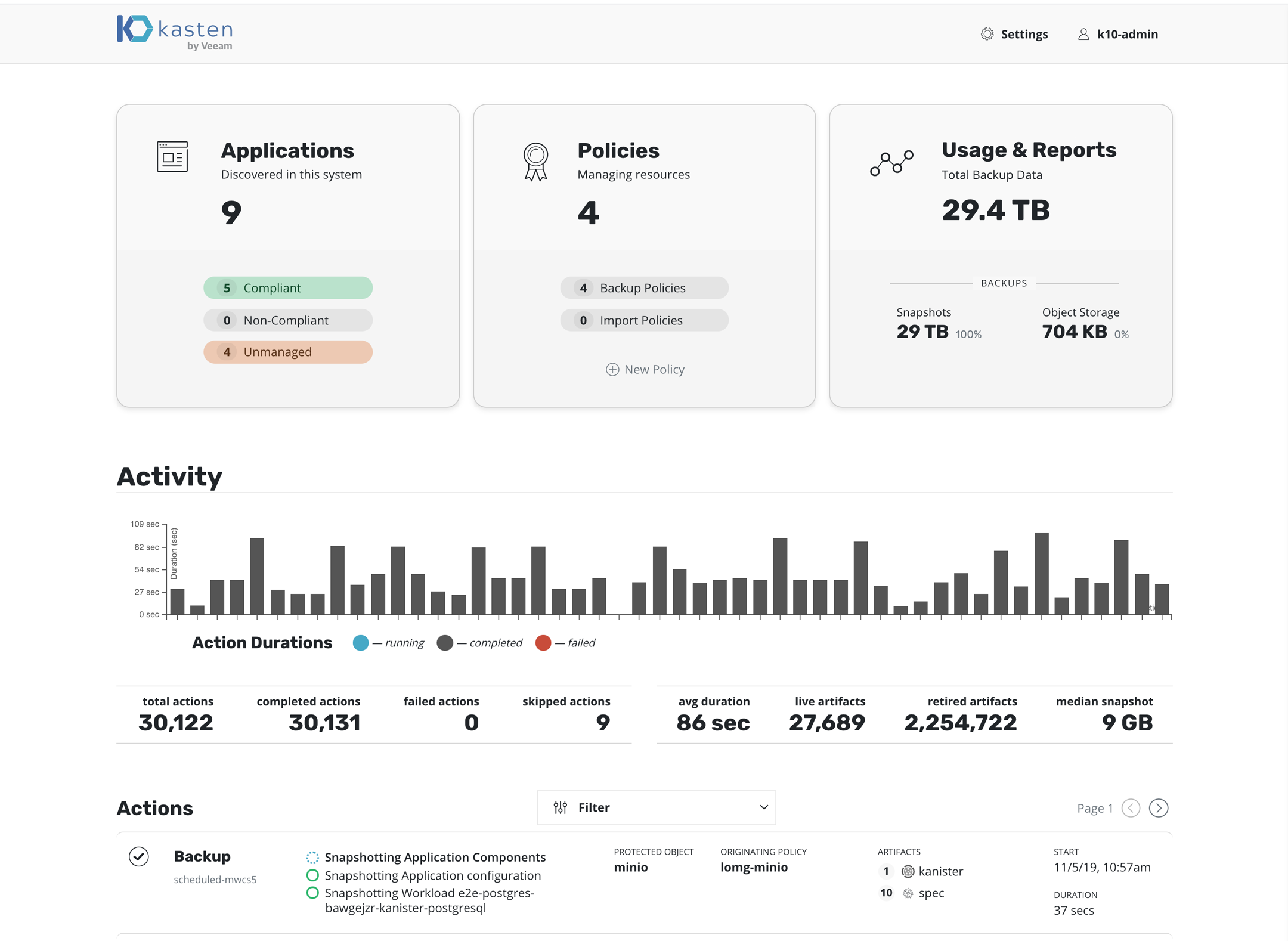

Viewing Policy Activity

Once you have created a policy and have navigated back to the main dashboard, you will see the selected applications quickly switch from unmanaged to non-compliant (i.e., a policy covers the objects but no action has been taken yet). They will switch to compliant as snapshots and backups are run as scheduled or manually and the application enters a protected state. You can also scroll down on the page to see the activity, how long each snapshot took, and the generated artifacts. Your page will now look similar to this:

More detailed job information can be obtained by clicking on the in-progress or completed jobs.

Manual Policy Runs

It is possible to manually create a policy run by going to the policy

page and clicking the run once button on the desired policy. Note that

any artifacts created by this action will not be eligible for

automatic retirement and will need to be manually cleaned up.

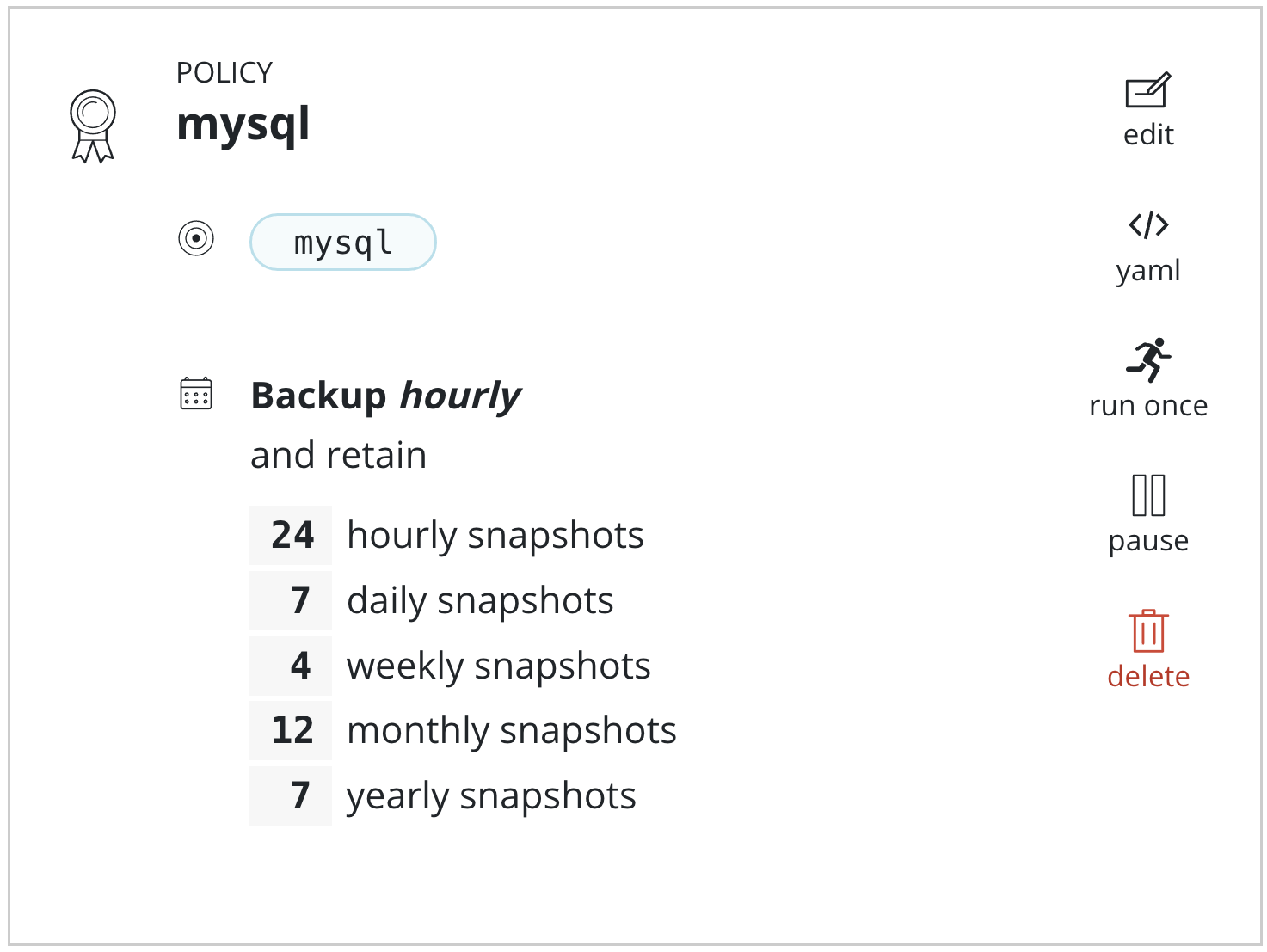

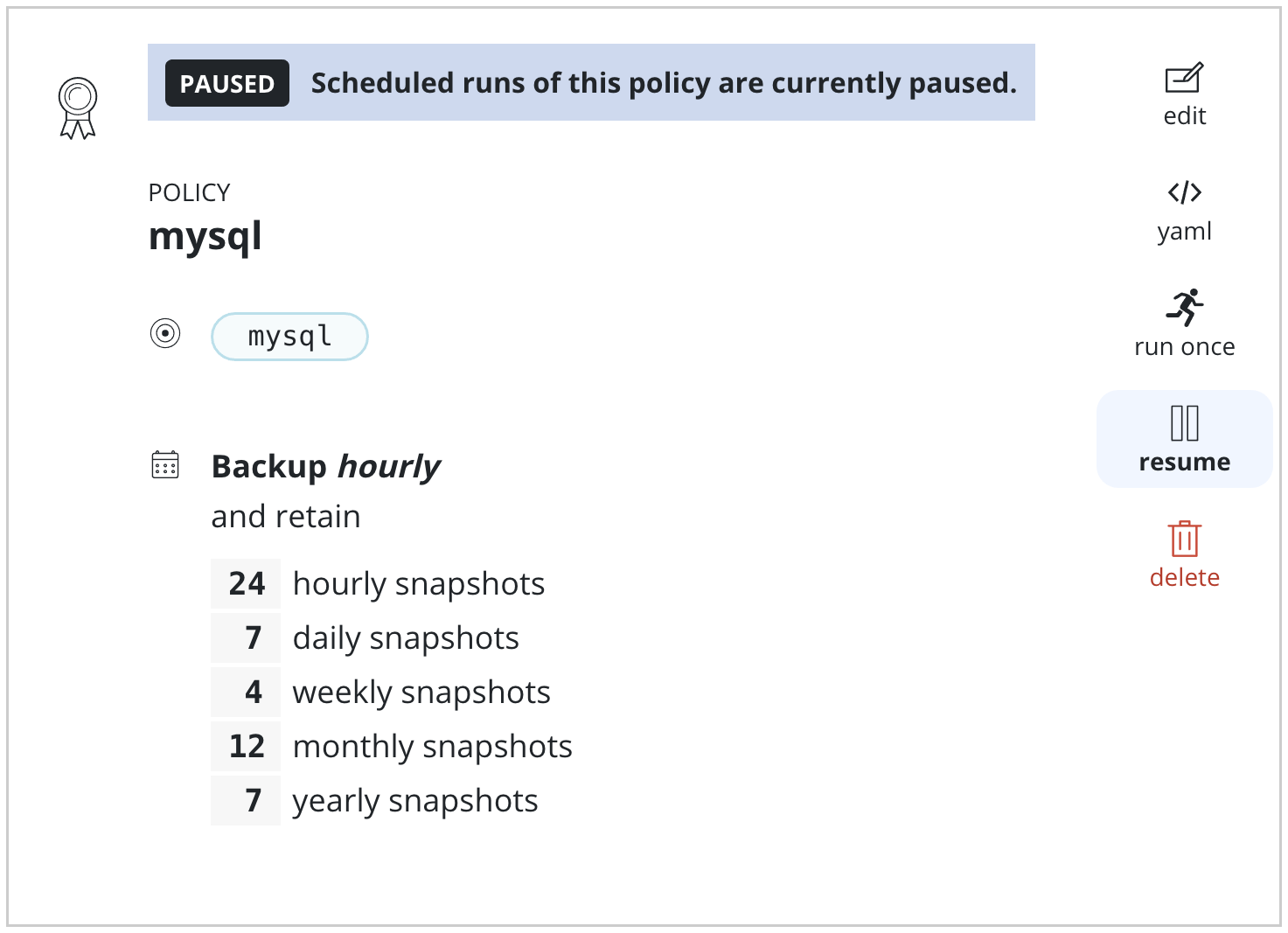

Pausing Policies

It is possible to pause new runs of a policy by going to

the policy page and clicking the pause button on the desired policy.

Once a policy is resumed by clicking the resume button, the next policy

run will be at the next scheduled

time after the policy is resumed.

Paused policies do not generate skipped jobs and are ignored for the purposes of compliance. Applications that are only protected by paused policies are marked as unmanaged.

Editing Policies

It is also possible to edit created policies by clicking the edit button on the policies page.

Policies, a Kubernetes Custom Resource (CR), can also be edited directly by manually modifying the CR's YAML through the dashboard or command line.

Changes made to the policy (e.g., new labels added or resource filtering applied) will take effect during the next scheduled policy run.

Careful attention should be paid to changing a policy's retention schedule as that action will automatically retire and delete restore points that no longer fall under the new retention scheme.

Editing retention counts can change the number of restore points retained at each tier during the next scheduled policy run. The retention counts at the start of a policy run apply to all restore points created by the policy, including those created when the policy had different retention counts. Editing Advanced Schedule Options can change when a policy runs in the future and which restore points created by future policy runs will graduate and be retained by which retention tiers.

Restore points graduate to higher retention tiers according to the retention schedule in effect when the restore point is created. This protects previous restore points when the retention schedule changes.

For example, consider a policy that runs hourly at 20 minutes after the hour and retains 1 hourly and 7 daily snapshots with the daily coming at 22:20. At steady state that policy will have 7 or 8 restore points. If that policy is edited to run at 30 minutes after the hour and retain the 23:30 snapshot as a daily, when the policy next runs at 23:30 it will retain the newly created snapshot as both an hourly and a daily. The 6 most recent snapshots created at 22:20 will be retained, and the oldest snapshot from 22:20 will be retired.

Disabling Backup Exports In A Policy

Care should be taken when disabling backup/exports from a policy. If no independent export retention schedule existed, no new exports will be created and the prior exports will be retired as before. The exported artifacts will be retired in the future at the same time as the snapshot artifacts from each policy run.

If an independent retention schedule existed for export, editing the policy to remove exports will remove the independent export retention counts from the policy. Upon the next successful policy run, the snapshot retirement schedule will determine which previous artifacts to retain and which to retire based upon the policy’s retention table. Retiring a policy run will retire both snapshot and export artifacts. Either snapshot or export artifacts for a retiring policy run may already have been retired if the prior export retention values were higher or lower than the policy retention values.

Deleting A Policy

You can easily delete a policy from the policies page or using the API. However, in the interests of safety, deleting a policy will not delete all the restore points that were generated by it. Restore points from deleted policies can be manually deleted from the Application restore point view or via the API.