Storage Integration¶

K10 supports direct integration with public cloud storage vendors, direct Ceph support, as well as CSI integration. While most integrations are transparent, the below sections document the configuration needed for the exceptions.

Direct Provider Integration¶

K10 supports seamless and direct storage integration with a number of storage providers. The following storage providers are either automatically discovered and configured within K10 or can be configured for direct integration:

Container Storage Interface (CSI)¶

Apart from direct storage provider integration, K10 also supports invoking volume snapshots operations via the Container Storage Interface (CSI). To ensure that this works correctly, please ensure the following requirements are met.

CSI Requirements¶

Kubernetes v1.14.0 or higher

The

VolumeSnapshotDataSourcefeature has been enabled in the Kubernetes clusterA CSI driver that has Volume Snapshot support. Please look at the list of CSI drivers to confirm snapshot support.

Pre-Flight Checks¶

Assuming that the default kubectl context is pointed to a cluster

with CSI enabled, CSI pre-flight checks can be run by deploying the

primer tool with a specified StorageClass. This tool runs in a

pod in the cluster and performs the following operations:

Creates a sample application with a persistent volume and writes some data to it

Takes a snapshot of the persistent volume

Creates a new volume from the persistent volume snapshot

Validates the data in the new persistent volume

First, run the following command to derive the list of provisioners along with their StorageClasses and VolumeSnapshotClasses.

$ curl -s https://docs.kasten.io/tools/k10_primer.sh | bash

Then, run the following command with a valid StorageClass to deploy the pre-check tool:

$ curl -s https://docs.kasten.io/tools/k10_primer.sh | bash /dev/stdin -s ${STORAGE_CLASS}

CSI Snapshot Configuration¶

For each CSI driver, ensure that a VolumeSnapshotClass has been added

with K10 annotation (k10.kasten.io/is-snapshot-class: "true").

Note that CSI snapshots are not durable. In particular, CSI snapshots

have a namespaced VolumeSnapshot object and a non-namespaced

VolumeSnapshotContent object. With the default (and recommended)

deletionPolicy, if there is a deletion of a volume or the

namespace containing the volume, the cleanup of the namespaced

VolumeSnapshot object will lead to the cascading delete of the

VolumeSnapshotContent object and therefore the underlying storage

snapshot.

Setting deletionPolicy to Delete isn't sufficient either as

some storage systems will force snapshot deletion if the associated

volume is deleted (snapshot lifecycle is not independent of the

volume). Similarly, it might be possible to force-delete snapshots

through the storage array's native management interface. Enabling

backups together with volume snapshots is therefore required for a

durable backup.

K10 creates a clone of the original VolumeSnapshotClass with the DeletionPolicy set to 'Retain'. When restoring a CSI VolumeSnapshot, an independent replica is created using this cloned class to avoid any accidental deletions of the underlying VolumeSnapshotContent.

VolumeSnapshotClass Configuration¶

apiVersion: snapshot.storage.k8s.io/v1alpha1

snapshotter: hostpath.csi.k8s.io

kind: VolumeSnapshotClass

metadata:

annotations:

k10.kasten.io/is-snapshot-class: "true"

name: csi-hostpath-snapclass

apiVersion: snapshot.storage.k8s.io/v1beta1

driver: hostpath.csi.k8s.io

kind: VolumeSnapshotClass

metadata:

annotations:

k10.kasten.io/is-snapshot-class: "true"

name: csi-hostpath-snapclass

Given the configuration requirements, the above code illustrates a correctly-configured VolumeSnapshotClass for K10. If the VolumeSnapshotClass does not match the above template, please follow the below instructions to modify it. If the existing VolumeSnapshotClass cannot be modified, a new one can be created with the required annotation.

Whenever K10 detects volumes that were provisioned via a CSI driver, it will look for a VolumeSnapshotClass with K10 annotation for the identified CSI driver and use it to create snapshots. You can easily annotate an existing VolumeSnapshotClass using:

$ kubectl annotate volumesnapshotclass ${VSC_NAME} \ k10.kasten.io/is-snapshot-class=true

Verify that only one VolumeSnapshotClass per storage provisioner has the K10 annotation. Currently, if no VolumeSnapshotClass or more than one has the K10 annotation, snapshot operations will fail.

# List the VolumeSnapshotClasses with K10 annotation $ kubectl get volumesnapshotclass -o json | \ jq '.items[] | select (.metadata.annotations["k10.kasten.io/is-snapshot-class"]=="true") | .metadata.name' k10-snapshot-class

StorageClass Configuration¶

As an alternative to the above method, a StorageClass can be

annotated with the following-

(k10.kasten.io/volume-snapshot-class: "VSC_NAME").

All volumes created with this StorageClass will be snapshotted by

the specified VolumeSnapshotClass:

$ kubectl annotate storageclass ${SC_NAME} \ k10.kasten.io/volume-snapshot-class=${VSC_NAME}

Migration Requirements¶

If application migration across clusters is needed, ensure that the VolumeSnapshotClass names match between both clusters. As the VolumeSnapshotClass is also used for restoring volumes, an identical name is required.

CSI Snapshotter Minimum Requirements¶

Finally, ensure that the csi-snapshotter container for all CSI

drivers you might have installed has a minimum version of v1.2.2. If

your CSI driver ships with an older version that has known bugs, it

might be possible to transparently upgrade in place using the

following code.

# For example, if you installed the GCP Persistent Disk CSI driver

# in namespace ${DRIVER_NS} with a statefulset (or deployment)

# name ${DRIVER_NAME}, you can check the snapshotter version as below:

$ kubectl get statefulset ${DRIVER_NAME} --namespace=${DRIVER_NS} \

-o jsonpath='{range .spec.template.spec.containers[*]}{.image}{"\n"}{end}'

gcr.io/gke-release/csi-provisioner:v1.0.1-gke.0

gcr.io/gke-release/csi-attacher:v1.0.1-gke.0

quay.io/k8scsi/csi-snapshotter:v1.0.1

gcr.io/dyzz-csi-staging/csi/gce-pd-driver:latest

# Snapshotter version is old (v1.0.1), update it to the required version.

$ kubectl set image statefulset/${DRIVER_NAME} csi-snapshotter=quay.io/k8scsi/csi-snapshotter:v1.2.2 \

--namespace=${DRIVER_NS}

Pure Storage¶

For integrating K10 with Pure Storage, please follow Pure Storage's instructions on deploying the Pure Storage Orchestrator and the VolumeSnapshotClass.

Once the above two steps are completed, follow the instructions for K10 CSI integration. In particular, the Pure VolumeSnapshotClass needs to be edited using the following commands.

$ kubectl annotate volumesnapshotclass pure-snapshotclass \

k10.kasten.io/is-snapshot-class=true

NetApp Trident¶

For integrating K10 with NetApp Trident, please follow NetApp's instructions on deploying Trident as a CSI provider and then follow the instructions above.

Ceph¶

K10 currently supports Ceph RBD snapshots and backups. CephFS support is planned for the near future.

CSI Integration¶

Note

If you are using Rook to install Ceph, K10 only supports Rook v1.3.0 and above. Previous versions had bugs that prevented restore from snapshots.

K10 supports integration with Ceph (RBD) via its CSI interface by following the instructions for CSI integration. In particular, the Ceph VolumeSnapshotClass needs to be edited using the following commands.

$ kubectl annotate volumesnapshotclass csi-rbdplugin-snapclass \

k10.kasten.io/is-snapshot-class=true

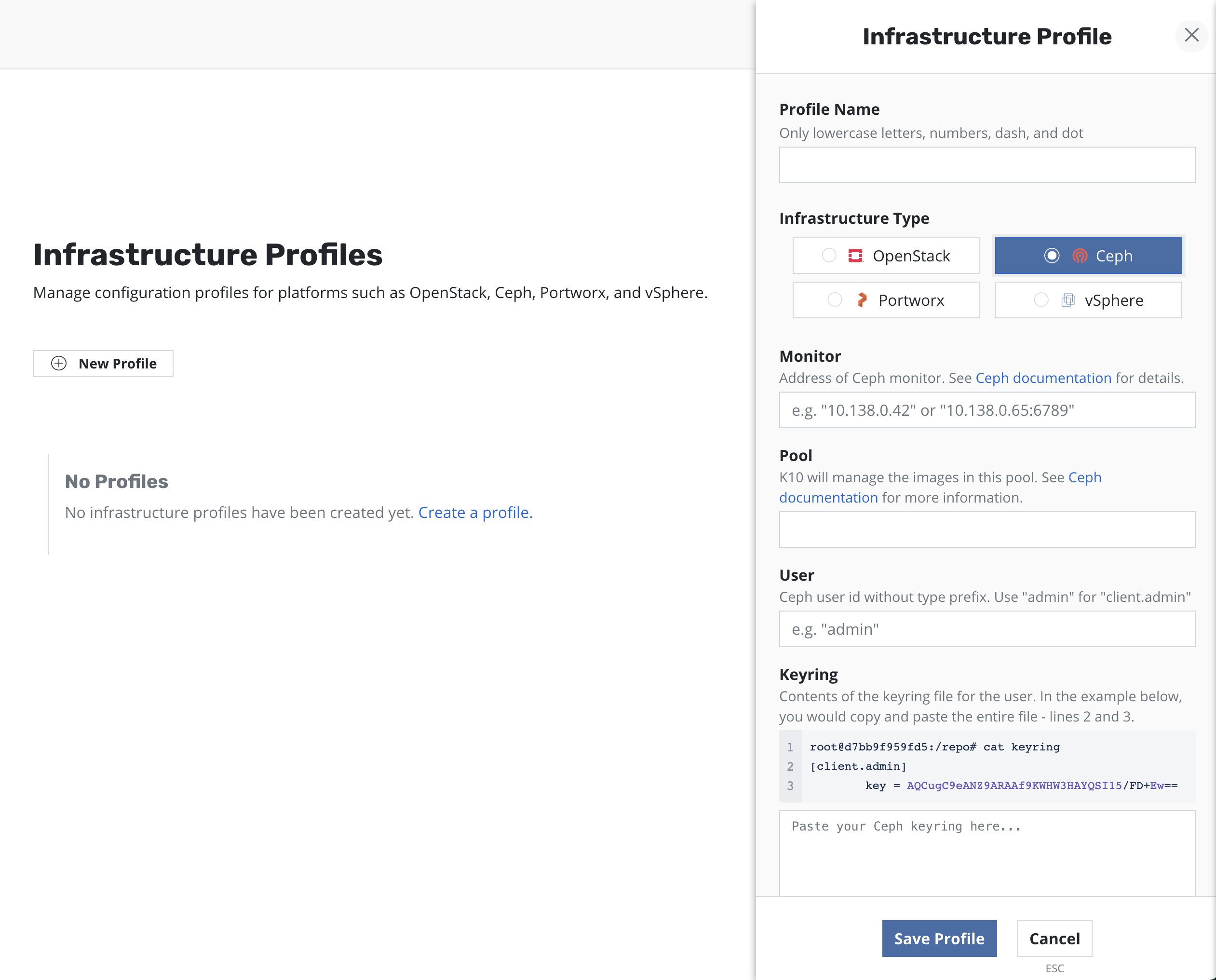

Direct Ceph Integration¶

Note

Non-CSI support for Ceph will be deprecated in an upcoming release in favor of direct CSI integration

Apart from integration with Ceph's CSI driver, K10 also has native

support for Ceph (RBD) to protect persistent volumes provisioned using

the Ceph RBD provisioner. The imageFormat: "2" and

imageFeatures: "layering" parameters are required in the Ceph StorageClass

definition. A correctly configured Ceph StorageClass can be seen here.

An infrastructure profile must be created from the Settings menu.

The Monitor, Pool, User and Keyring fields must be specified.

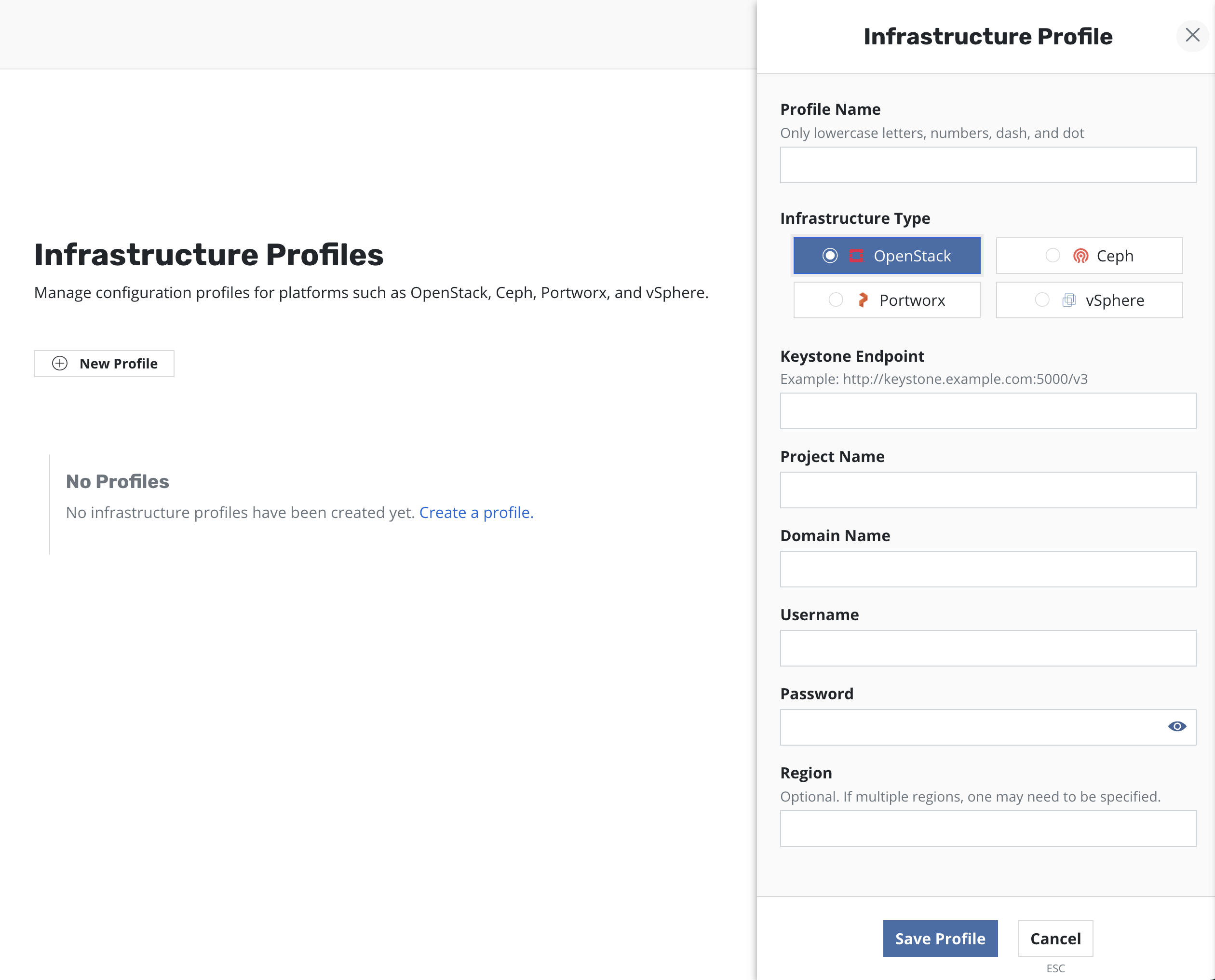

Cinder/OpenStack¶

K10 supports snapshots and backups of OpenStack's Cinder block storage.

To enable K10 to take snapshots, an OpenStack infrastructure profile must be created from the settings menu.

The Keystone Endpoint, Project Name, Domain Name, Username

and Password are required fields.

If the OpenStack environment spans multiple regions then the Region field

must also be specified.

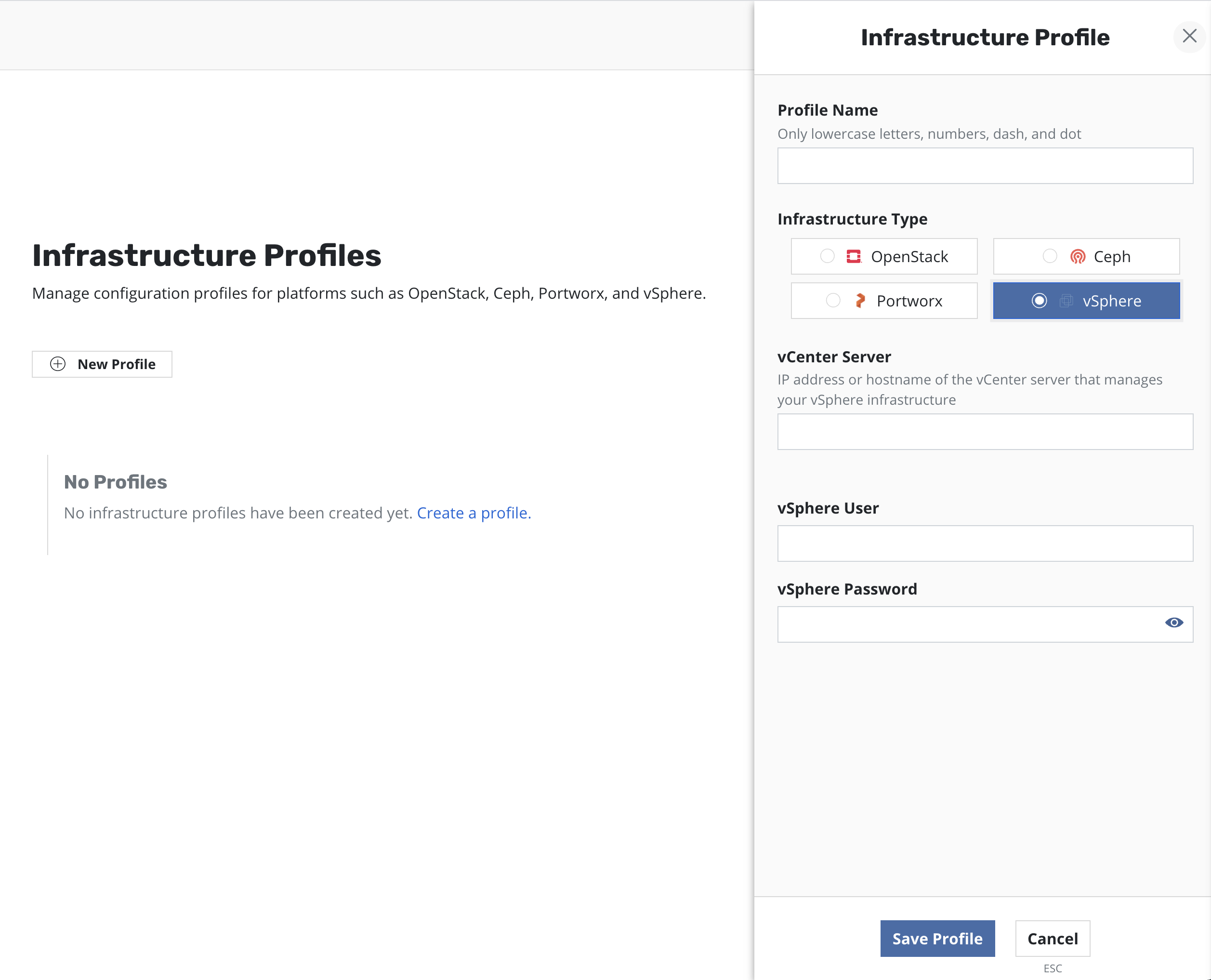

vSphere¶

K10 supports vSphere storage integration and can be enabled by creating a vSphere infrastructure profile from the Settings menu. Persistent Volumes must be provisioned using the vSphere CSI provisioner.

The vCenter Server is required and must be a valid IP address or hostname

that points to the vSphere infrastructure.

The vSphere User and vSphere Password fields are also required.

Note

It is recommended that a dedicated user account be created for K10. To authorize the account, create a role with the following privileges:

-

Allocate space

Browse datastore

Low level file operations

-

Disable methods

Enable methods

Licenses

Virtual Machine Snapshot Management Privileges

Create snapshot

Remove snapshot

Revert to snapshot

Assign this role to the dedicated K10 user account on the following objects:

The root vCenter object

The datacenter objects (propagate down each subtree to reach datastore and virtual machine objects)

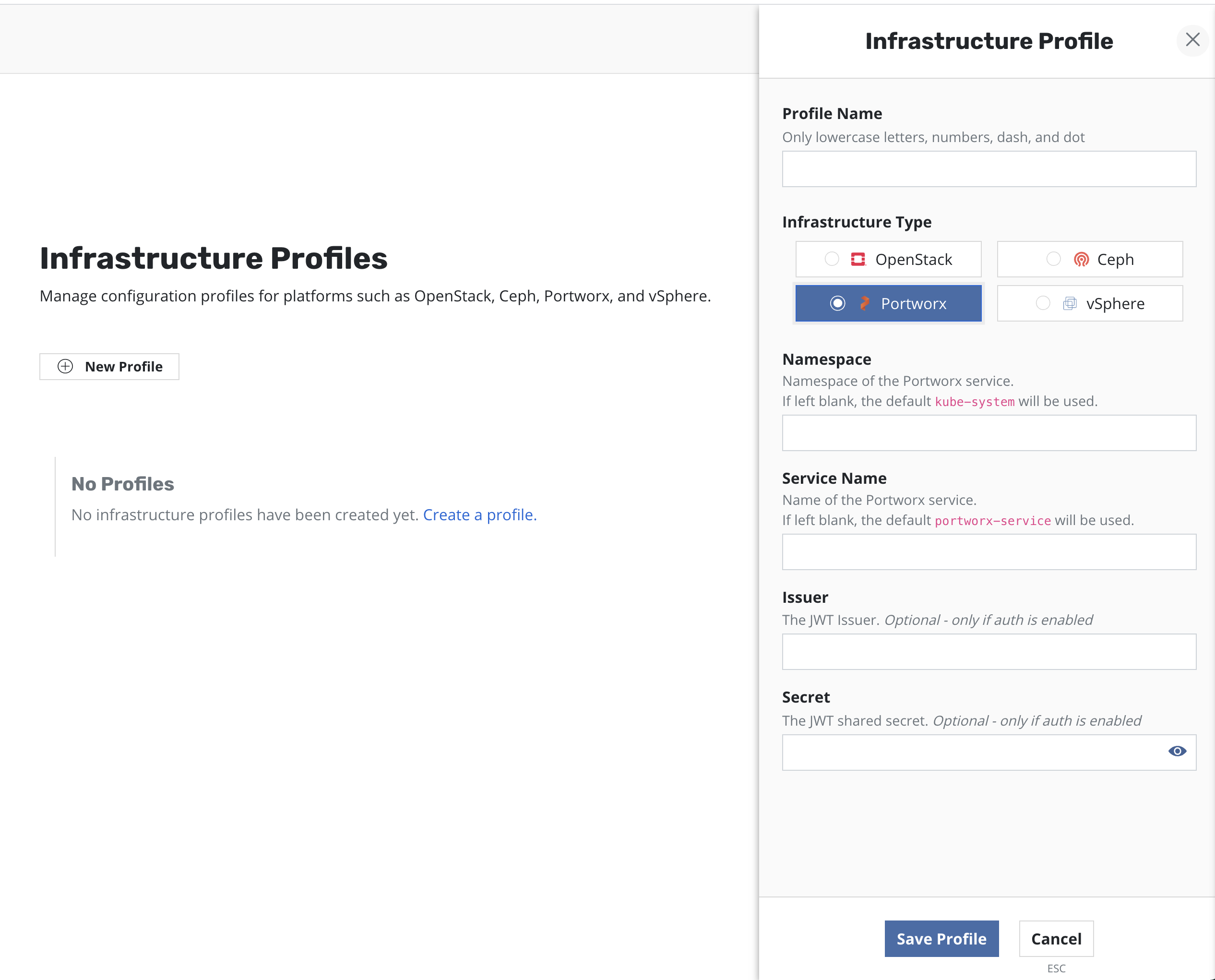

Portworx¶

Apart from CSI-level support, K10 also directly integrates with the Portworx storage platform.

To enable K10 to take snapshots and restore volumes from Portworx, an infrastructure profile must be created from the settings menu.

The Namespace and Service Name fields are used to determine the

Portworx endpoint. If these fields are left blank the Portworx defaults of

kube-system and portworx-service will be used respectively.

In an authorization-enabled Portworx setup, the Issuer and Secret

fields must be set.

The Issuer must represent the JWT issuer. The Secret is the JWT shared

secret, which is represented by the Portworx environment variable-

PORTWORX_AUTH_JWT_SHAREDSECRET. Refer to Portworx Security

for more information.