etcd Backup (Kubeadm)

Assuming the Kubernetes cluster is set up through Kubeadm,

the etcd pods will be running in the kube-system namespace.

Before taking a backup of the etcd cluster, a Secret needs to

be created in a temporary new or an existing namespace,

containing details about the authentication mechanism used by

etcd. In the case of kubeadm, it is likely that etcd will

have been deployed using TLS-based authentication. A temporary

namespace and a Secret to access etcd can be created by running

the following command:

$ kubectl create namespace etcd-backup

namespace/etcd-backup created

$ kubectl create secret generic etcd-details \

--from-literal=cacert=/etc/kubernetes/pki/etcd/ca.crt \

--from-literal=cert=/etc/kubernetes/pki/etcd/server.crt \

--from-literal=endpoints=https://127.0.0.1:2379 \

--from-literal=key=/etc/kubernetes/pki/etcd/server.key \

--from-literal=etcdns=kube-system \

--from-literal=labels=component=etcd,tier=control-plane \

--namespace etcd-backup

Note

If the correct path of the server keys and certificate is not provided,

backups will fail. These paths can be discovered from the command that

gets run inside the etcd pod, by describing the pod or looking into the

static pod manifests. The value for the flags etcdns and labels

should be the namespace where etcd pods are running and etcd pods' labels

respectively.

To avoid any other workloads from etcd-backup namespace being backed

up, Secret etcd-details can be labeled to make sure only this Secret

is included in the backup. The below command can be executed to label the

Secret:

$ kubectl label secret -n etcd-backup etcd-details include=true

Backup

To create the Blueprint resource that will be used by Veeam Kasten to backup etcd, run the below command:

$ kubectl --namespace kasten-io apply -f \

https://raw.githubusercontent.com/kanisterio/kanister/0.111.0/examples/etcd/etcd-in-cluster/k8s/etcd-incluster-blueprint.yaml

Alternatively, use the Blueprints page on Veeam Kasten Dashboard to create the Blueprint resource.

Once the Blueprint is created, the Secret that was created above needs to be annotated to instruct Veeam Kasten to use the Blueprint to perform backups on the etcd pod. The following command demonstrates how to annotate the Secret with the name of the Blueprint that was created earlier.

$ kubectl annotate secret -n etcd-backup etcd-details kanister.kasten.io/blueprint='etcd-blueprint'

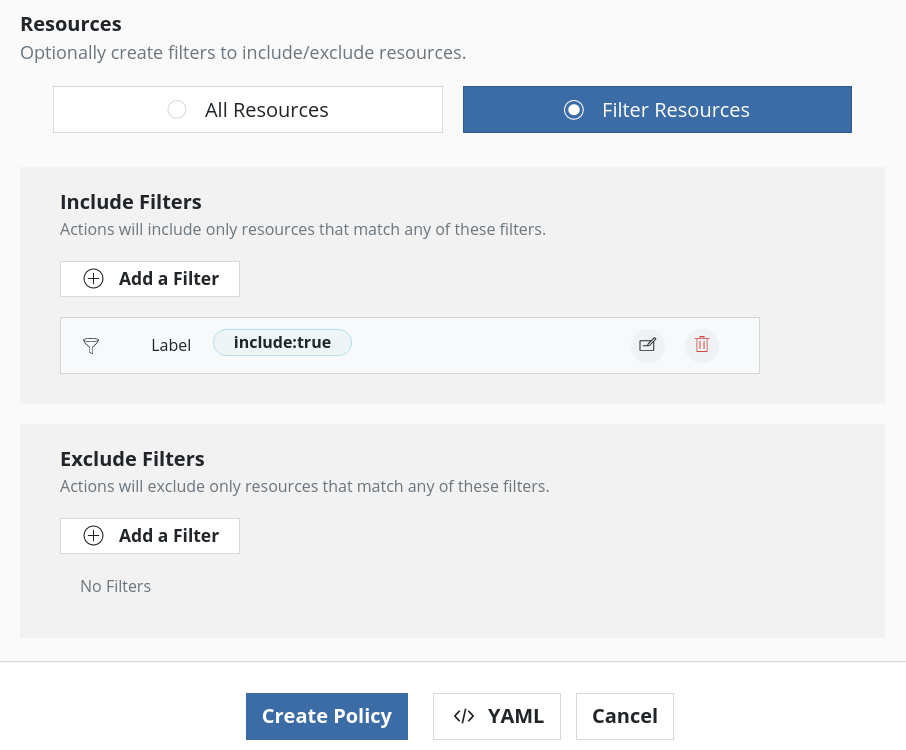

Once the Secret is annotated, use Veeam Kasten to backup etcd using the new namespace. If the Secret is labeled, as mentioned in one of the previous steps, while creating the policy just that Secret can be included in the backup by adding resource filters like below:

Note

The backup location of etcd can be found by looking at the Kanister artifact of the created restore point.

Restore

To restore the etcd backup, log in to the host (most likely the Kubernetes

control plane nodes) where the etcd pod is running. Obtain the restore path

by looking into the artifact details of the backup action on the Veeam Kasten

dashboard, and download the snapshot to a specific location on the etcd pod

host machine (e.g., /tmp/etcd-snapshot.db). Downloading the snapshot is

going to be dependent on the backup storage target in use. For example, if

AWS S3 was used as object storage, the AWS CLI will be needed to obtain

the backup.

Once the snapshot is downloaded from the backup target, use the etcdctl CLI

tool to restore that snapshot to a specific location, for example

/var/lib/etcd-from-backup on the host. The below command can be used to

restore the etcd backup:

$ ETCDCTL_API=3 etcdctl --endpoints=https://127.0.0.1:2379 \

--cacert=/etc/kubernetes/pki/etcd/ca.crt \

--cert=/etc/kubernetes/pki/etcd/server.crt \

--key=/etc/kubernetes/pki/etcd/server.key \

--data-dir="/var/lib/etcd-from-backup" \

--initial-cluster="master=https://127.0.0.1:2380" \

--name="master" \

--initial-advertise-peer-urls="https://127.0.0.1:2380" \

--initial-cluster-token="etcd-cluster-1" \

snapshot restore /tmp/snapshot-pre-boot.db

All the values that are provided for the above flags can be discovered from the

etcd pod manifest (static pod). The two important flags are

--data-dir and --initial-cluster-token. --data-dir is the

directory where etcd stores its data into and --initial-cluster-token

is the flag that defines the token for new members to join this etcd cluster.

Once the backup is restored into a new directory

(e.g., /var/lib/etcd-from-backup), the etcd manifest (static pod)

needs to be updated to point its data directory to this new directory

and the --initial-cluster-token=etcd-cluster-1 needs to be

specified in the etcd command argument. Apart from that the volumes

and volumeMounts fields should also be changed to point to new data-dir

that we restored the backup to.

Multi-Member etcd Cluster

In the cases when the cluster is running a multi-member etcd cluster, the same steps that we followed earlier can be followed to restore the cluster with some minor changes. As mentioned in the official etcd documentation all the members of etcd can be restored from the same snapshot.

Among the leader nodes, choose one that will be used as a restore node and stop the static pods on all other leader nodes. After making sure that the static pods have been stopped on the other leader nodes, the previous step should be followed on those nodes sequentially.

The below command, used to restore the etcd backup, needs to be changed from the previous example before running it on other leader nodes:

$ ETCDCTL_API=3 etcdctl --endpoints=https://127.0.0.1:2379 \

--cacert=/etc/kubernetes/pki/etcd/ca.crt \

--cert=/etc/kubernetes/pki/etcd/server.crt \

--key=/etc/kubernetes/pki/etcd/server.key \

--data-dir="/var/lib/etcd-from-backup" \

--initial-cluster="master=https://127.0.0.1:2380" \

--name="master" \

--initial-advertise-peer-urls="https://127.0.0.1:2380" \

--initial-cluster-token="etcd-cluster-1" \

snapshot restore /tmp/snapshot-pre-boot.db

The name of the host for the flags --initial-cluster and --name

should be changed based on the host (leader) on which the command is being run.

To explore more about how etcd backup and restore work, this Kubernetes documentation can be followed.

In reaction to the change in the static pod manifest, the kubelet will

automatically recreate the etcd pod with the cluster state that was backed up

when the etcd backup was performed.