Generic Storage Backup and Restore

Note

Effective with the release of Kasten K10 6.5.0, currently targeted for Q4 CY2023, Generic Storage Backup will be disabled for all new deployments of Kasten K10, as well as existing deployments when upgraded to 6.5.0 or later. For more details, refer to this page.

Applications can often be deployed using non-shared storage (e.g., local SSDs) or on systems where K10 does not currently support the underlying storage provider. To protect data in these scenarios, K10 with Kanister gives you the ability, with extremely minor application modifications to add functionality to backup, restore, and migrate this application data in an efficient and transparent manner.

While a complete example is provided below, the only changes needed are the addition of a sidecar to your application deployment that can mount the application data volume and an annotation that requests generic backup.

Using Sidecars

The sidecar can be added either by leveraging K10's sidecar injection feature or by manually patching the resource as described below.

Enable Kanister Sidecar Injection

K10 implements a Mutating Webhook Server which mutates workload

objects by injecting a Kanister sidecar into the workload when the

workload is created. The Mutating Webhook Server also adds the

k10.kasten.io/forcegenericbackup annotation to the targeted

workloads to enforce generic backup. By default, the sidecar injection

feature is disabled. To enable this feature, the following options

need to be used when installing K10 via the Helm chart:

--set injectKanisterSidecar.enabled=true

Once enabled, Kanister sidecar injection will be enabled for all

workloads in all namespaces. To perform sidecar injections on

workloads only in specific namespaces, the namespaceSelector

labels can be set using the following option:

--set-string injectKanisterSidecar.namespaceSelector.matchLabels.key=value

By setting namespaceSelector labels, the Kanister sidecar will be

injected only in the workloads which will be created in the namespace

matching labels with namespaceSelector labels.

Similarly, to inject the sidecar for only specific workloads,

the objectSelector option can be set as shown below:

--set-string injectKanisterSidecar.objectSelector.matchLabels.key=value

Warning

It is recommended to add at least one namespaceSelector or

objectSelector when enabling the injectKanisterSidecar feature.

Otherwise, K10 will try to inject a sidecar into every new workload.

In the common case, this will lead to undesirable results and potential

performance issues.

For example, to inject sidecars into workloads that match the label

component: db and are in namespaces that are labeled with

k10/injectKanisterSidecar: true, the following options should be

added to the K10 Helm install command:

--set injectKanisterSidecar.enabled=true \

--set-string injectKanisterSidecar.objectSelector.matchLabels.component=db \

--set-string injectKanisterSidecar.namespaceSelector.matchLabels.k10/injectKanisterSidecar=true

The labels set with namespaceSelector and objectSelector are

mutually inclusive. This means that if both the options are set to

perform sidecar injection, the workloads should have labels matching

the objectSelector labels AND they have to be created in the

namespace with labels that match the namespaceSelector

labels. Similarly, if multiple labels are specified for either

namespaceSelector or objectSelector, they will all needed to

match for a sidecar injection to occur.

For the sidecar to choose a security context that can read data from the volume, K10 performs the following checks in order:

If the primary container has a SecurityContext set, it will be used in the sidecar. If there are multiple primary containers, the list of containers will be iterated over and the first one which has a SecurityContext set will be used.

If the workload PodSpec has a SecurityContext set, the sidecar does not need an explicit specification and will automatically use the context from the PodSpec.

If the above criteria are not met, by default, no SecurityContext will be set.

Note

When the helm option for providing a Root CA to K10,

i.e cacertconfigmap.name, is enabled, the Mutating Webhook will create a

new ConfigMap, if it does not already exist, in the application namespace to

provide the Root CA to the sidecar. This ConfigMap in the application

namespace would be a copy of the Root CA ConfigMap residing in the K10

namespace.

Note

Sidecar injection for standalone Pods is not currently supported. Refer to the following section to manually add the the Kanister sidecar to standalone Pods.

Update the resource manifest

Alternatively, the Kanister sidecar can be added by updating the

resource manifest with the Kanister sidecar. An example, where

/data is used as an sample mount path, can be seen in the below

specification. Note that the sidecar must be named

kanister-sidecar and the sidecar image version should be pinned to

the latest Kanister release.

- name: kanister-sidecar

image: ghcr.io/kanisterio/kanister-tools:0.96.0

command: ["bash", "-c"]

args:

- "tail -f /dev/null"

volumeMounts:

- name: data

mountPath: /data

Alternatively, the below command can be run to add the sidecar into the

workload. Make sure to specify correct values for the specified

placeholders resource_type, namespace, resource_name,

volume-name and volume-mount-path:

$ kubectl patch <resource_type> \

-n <namespace> \

<resource_name> \

--type='json' \

-p='[{"op": "add", "path": "/spec/template/spec/containers/0", "value": {"name": "kanister-sidecar", "image": "ghcr.io/kanisterio/kanister-tools:0.96.0", "command": ["bash", "-c"], "args": ["tail -f /dev/null"], "volumeMounts": [{"name": "<volume-name>", "mountPath": "<volume-mount-path>"}] } }]'

Note

After injecting the sidecar manually, workload pods will be recreated. If the deployment strategy used for the workload is RollingUpdate, the workload should be scaled down and scaled up so that the volumes are mounted into the newly created pods.

Once the above change is made, K10 will be able to automatically extract data and, using its data engine, efficiently deduplicate data and transfer it into an object store or NFS file store.

If you have multiple volumes used by your pod, you simply need to mount them all within this sidecar container. There is no naming requirement on the mount path as long as they are unique.

Note that a backup operation can take up to 800 MB of memory for some larger workloads. To ensure the pod containing the kanister-sidecar is scheduled on a node with sufficient memory for a particularly intensive workload, you can add a resource request to the container definition.

resources:

requests:

memory: 800Mi

Generic Backup Annotation

Generic backups can be requested by adding the

k10.kasten.io/forcegenericbackup annotation to the workload as shown in the

example below.

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo-app

labels:

app: demo

annotations:

k10.kasten.io/forcegenericbackup: "true"

The following is a kubectl example to add the annotation to a running

deployment:

# Add annotation to force generic backups

$ kubectl annotate deployment <deployment-name> k10.kasten.io/forcegenericbackup="true" --namespace=<namespace-name>

Even when snapshot support from the storage provider is available,

generic backups can be enforced by adding the

k10.kasten.io/forcegenericbackup annotation to the workload as

described above.

Finally, note that the Kanister sidecar and Location profile must both be present for generic backups to work.

OpenShift Container Platform(OCP) v4.11 and newer

OCP introduced more restrictive default SCCs in the 4.11 release - Pod Security Admission. The change affects the ability to perform rootless Generic Storage Backup. Since K10 5.5.8 rootless is a default behavior for K10.

To use Generic Storage Backup with OCP 4.11 and above, the following capabilities must be allowed:

FOWNER

CHOWN

DAC_OVERRIDE

Previous version of restricted SCC can be used as a template.

Change the allowedCapabilities field as follows:

allowedCapabilities:

- CHOWN

- DAC_OVERRIDE

- FOWNER

End-to-End Example

The below section provides a complete end-to-end example of how to extend your application to support generic backup and restore. A dummy application is used below but it should be straightforward to extend this example.

Prerequisites

Make sure you have installed K10 with

injectKanisterSidecarenabled.(Optional)

namespaceSelectorlabels are set forinjectKanisterSidecar.

injectKanisterSidecar can be enabled by passing the following flags while

installing K10 helm chart

...

--set injectKanisterSidecar.enabled=true \

--set-string injectKanisterSidecar.namespaceSelector.matchLabels.k10/injectKanisterSidecar=true # Optional

Deploy the application

The following specification contains a complete example of how to

exercise generic backup and restore functionality. It consists of a an

application Deployment that use a Persistent Volume Claim (mounted

internally at /data) for storing data.

Saving the below specification as a file, deployment.yaml, is

recommended for reuse later.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: demo-pvc

labels:

app: demo

pvc: demo

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo-app

labels:

app: demo

spec:

replicas: 1

selector:

matchLabels:

app: demo

template:

metadata:

labels:

app: demo

spec:

containers:

- name: demo-container

image: alpine:3.7

resources:

requests:

memory: 256Mi

cpu: 100m

command: ["tail"]

args: ["-f", "/dev/null"]

volumeMounts:

- name: data

mountPath: /data

volumes:

- name: data

persistentVolumeClaim:

claimName: demo-pvc

Create a namespace:

$ kubectl create namespace <namespace>If

injectKanisterSidecar.namespaceSelectorlabels are set while installing K10, add the labels to namespace to match withnamespaceSelector$ kubectl label namespace <namespace> k10/injectKanisterSidecar=true

Deploy the above application as follows:

# Deploying in a specific namespace $ kubectl apply --namespace=<namespace> -f deployment.yaml

Check status of deployed application:

List pods in the namespace. The demo-app pods can be seen created with two containers.

# List pods $ kubectl get pods --namespace=<namespace> | grep demo-app # demo-app-56667f58dc-pbqqb 2/2 Running 0 24s

Describe the pod and verify the

kanister-sidecarcontainer is injected with the samevolumeMounts.volumeMounts: - name: data mountPath: /data

Create a Location Profile

If you haven't done so already, create a Location profile with the appropriate Location and Credentials information from the K10 settings page. Instructions for creating location profiles can be found here

Warning

Generic storage backup and restore workflows are not compatible with immutable backups location profiles. Immutable backups enabled location profiles can be used with these workflows, but will be treated as a non-immutability-enabled profile: the protection period will be ignored, and no point-in-time restore functionality will be provided. Please note that use of an object-locking bucket for such cases can amplify storage usage without any additional benefit.

Insert Data

The easiest way to insert data into the demo application is to simply copy it in:

# Get pods for the demo application from its namespace

$ kubectl get pods --namespace=<namespace> | grep demo-app

# Copy required data manually into the pod

$ kubectl cp <file-name> <namespace>/<pod>:/data/

# Verify if the data was copied successfully

$ kubectl exec --namespace=<namespace> <pod> -- ls -l /data

Backup Data

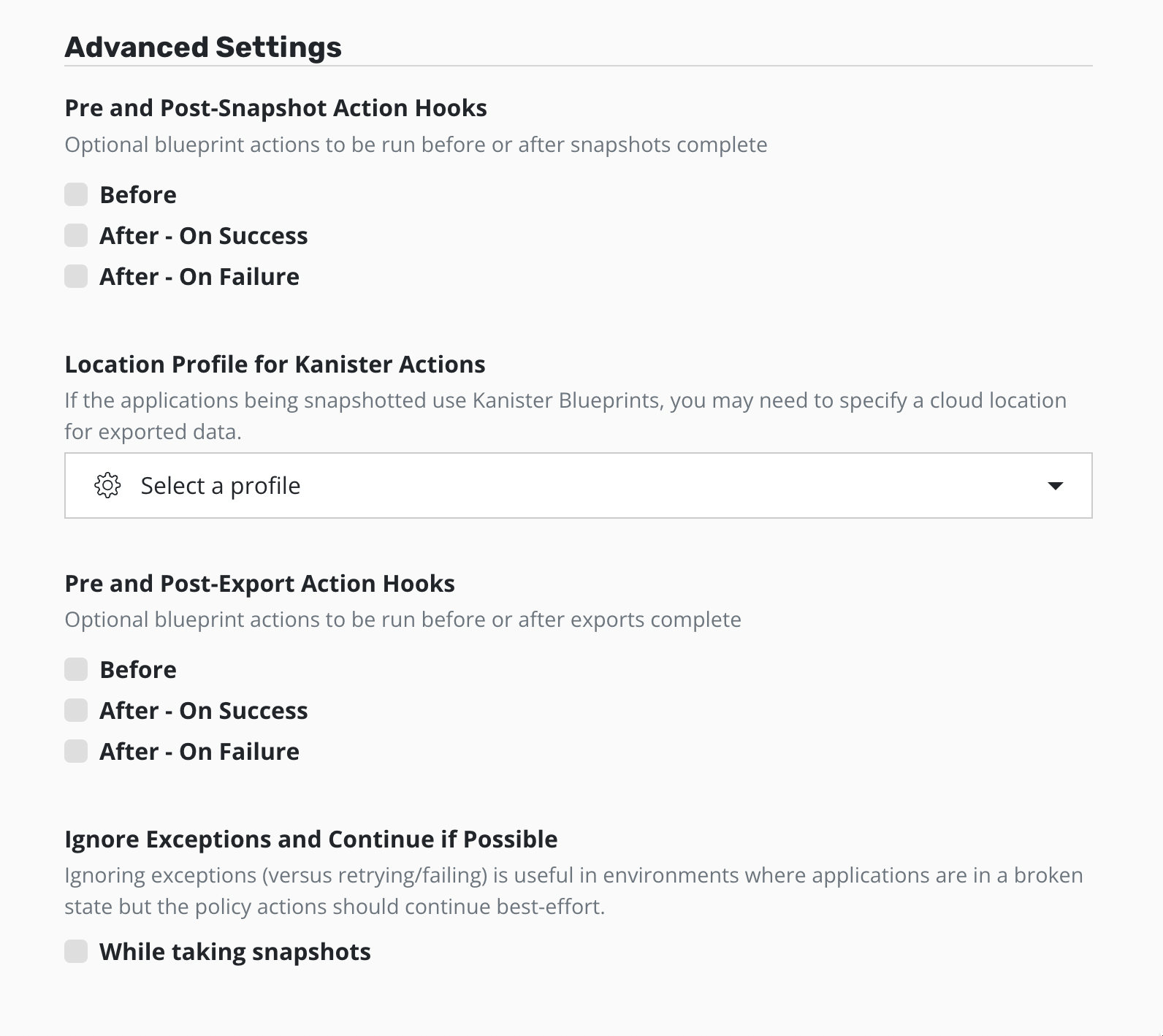

Backup the application data either by creating a Policy or running a Manual Backup from K10. This assumes that the application is running on a system where K10 does not support the provisioned disks (e.g., local storage). Make sure to specify the location profile in the advanced settings for the policy. This is required to perform Kanister operations.

This policy covers an application running in the namespace sampleApp.

apiVersion: config.kio.kasten.io/v1alpha1

kind: Policy

metadata:

name: sample-custom-backup-policy

namespace: kasten-io

spec:

comment: My sample custom backup policy

frequency: '@daily'

subFrequency:

minutes: [30]

hours: [22,7]

weekdays: [5]

days: [15]

retention:

daily: 14

weekly: 4

monthly: 6

actions:

- action: backup

backupParameters:

profile:

name: my-profile

namespace: kasten-io

selector:

matchLabels:

k10.kasten.io/appNamespace: sampleApp

For complete documentation of the Policy CR, refer to Policy API Type.

Destroy Data

To destroy the data manually, run the following command:

# Using kubectl

$ kubectl exec --namespace=<namespace> <pod> -- rm -rf /data/<file-name>

Alternatively, the application and the PVC can be deleted and recreated.

Restore Data

Restore the data using K10 by selecting the appropriate restore point.

Verify Data

After restore, you should verify that the data is intact. One way to verify this is to use MD5 checksum tool.

# MD5 on the original file copied

$ md5 <file-name>

# Copy the restored data back to local env

$ kubectl get pods --namespace=<namespace> | grep demo-app

$ kubectl cp <namespace>/<pod>:/data/<filename> <new-filename>

# MD5 on the new file

$ md5 <new-filename>

The MD5 checksums should match.

Generic Storage Backup and Restore on Unmounted PVCs

Generic Storage Backup and Restore on unmounted PVCs can be enabled by adding

k10.kasten.io/forcegenericbackup annotation to the StorageClass with which

the volumes have been provisioned.