K10 Disaster Recovery

K10 Disaster Recovery (DR) aims to protect K10 from the underlying infrastructure failures. In particular, this feature provides the ability to recover the K10 platform in case of a variety of disasters such as the accidental deletion of K10, failure of underlying storage that K10 uses for its catalog, or even the accidental destruction of the Kubernetes cluster on which K10 is deployed.

Overview

K10 enables Disaster Recovery with the help of an internal policy to backup its own data stores and store these in an object storage bucket or an NFS file storage location configured using a Location Profile.

External Storage Configuration

To enable K10 Disaster Recovery, a Location Profile needs to be configured. This will use an object storage bucket or an NFS file storage location to store data from K10's internal data stores and the cluster will need to have write permissions to this location.

Enabling K10 Disaster Recovery

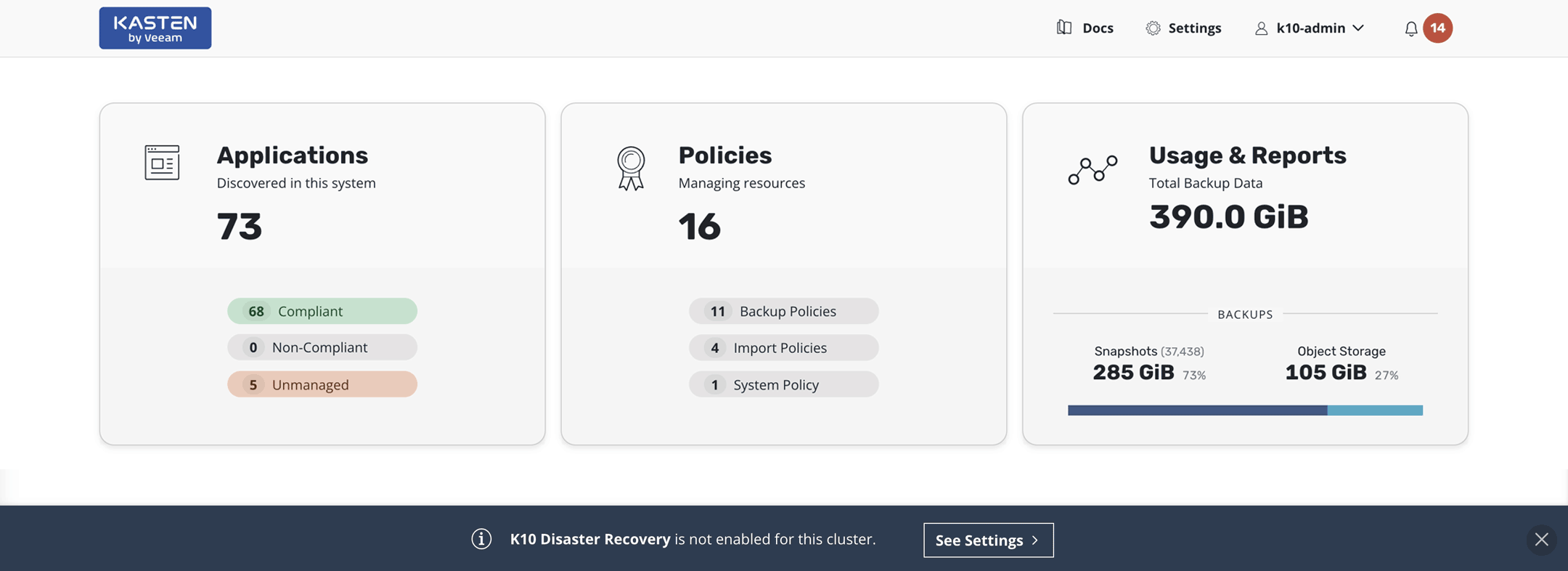

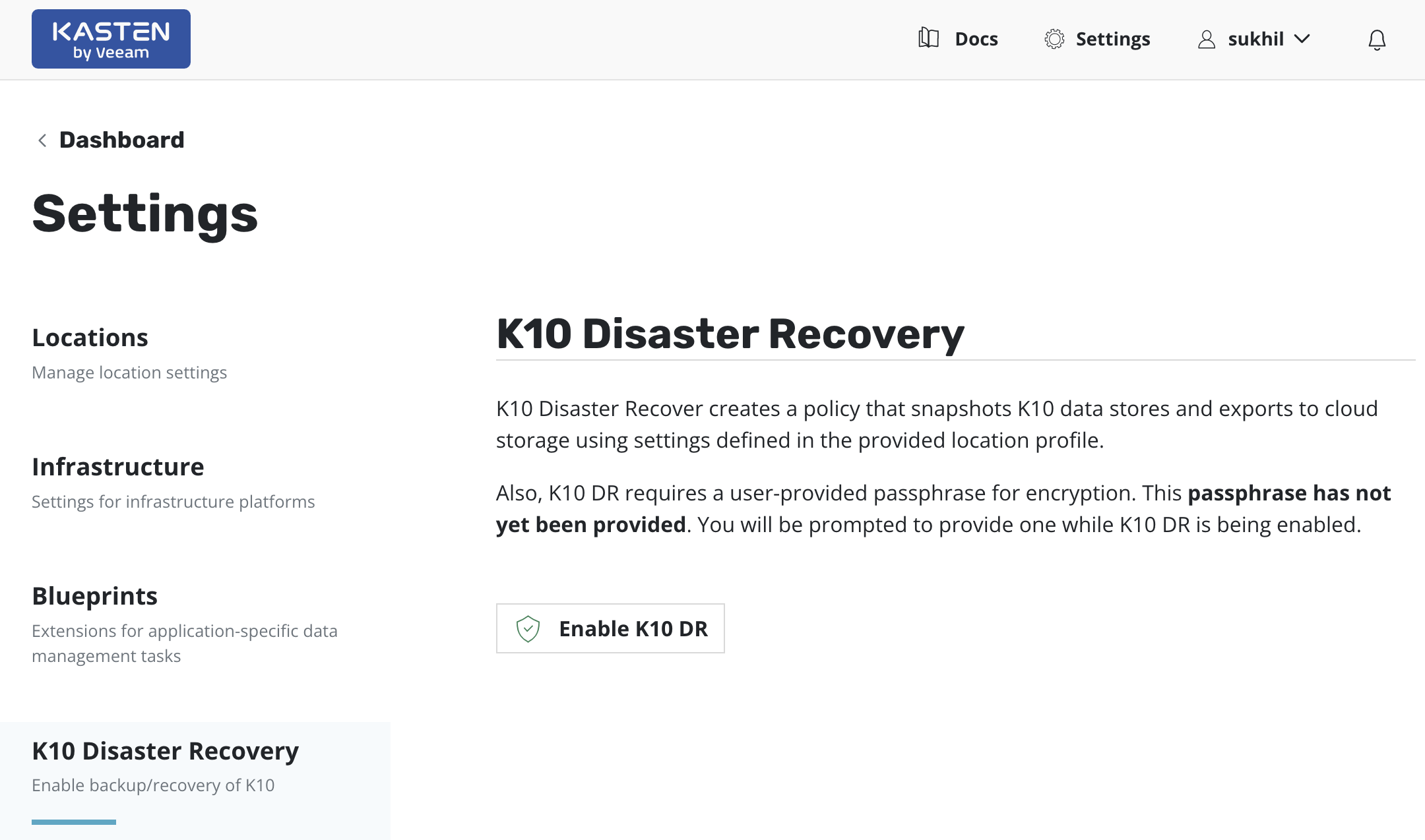

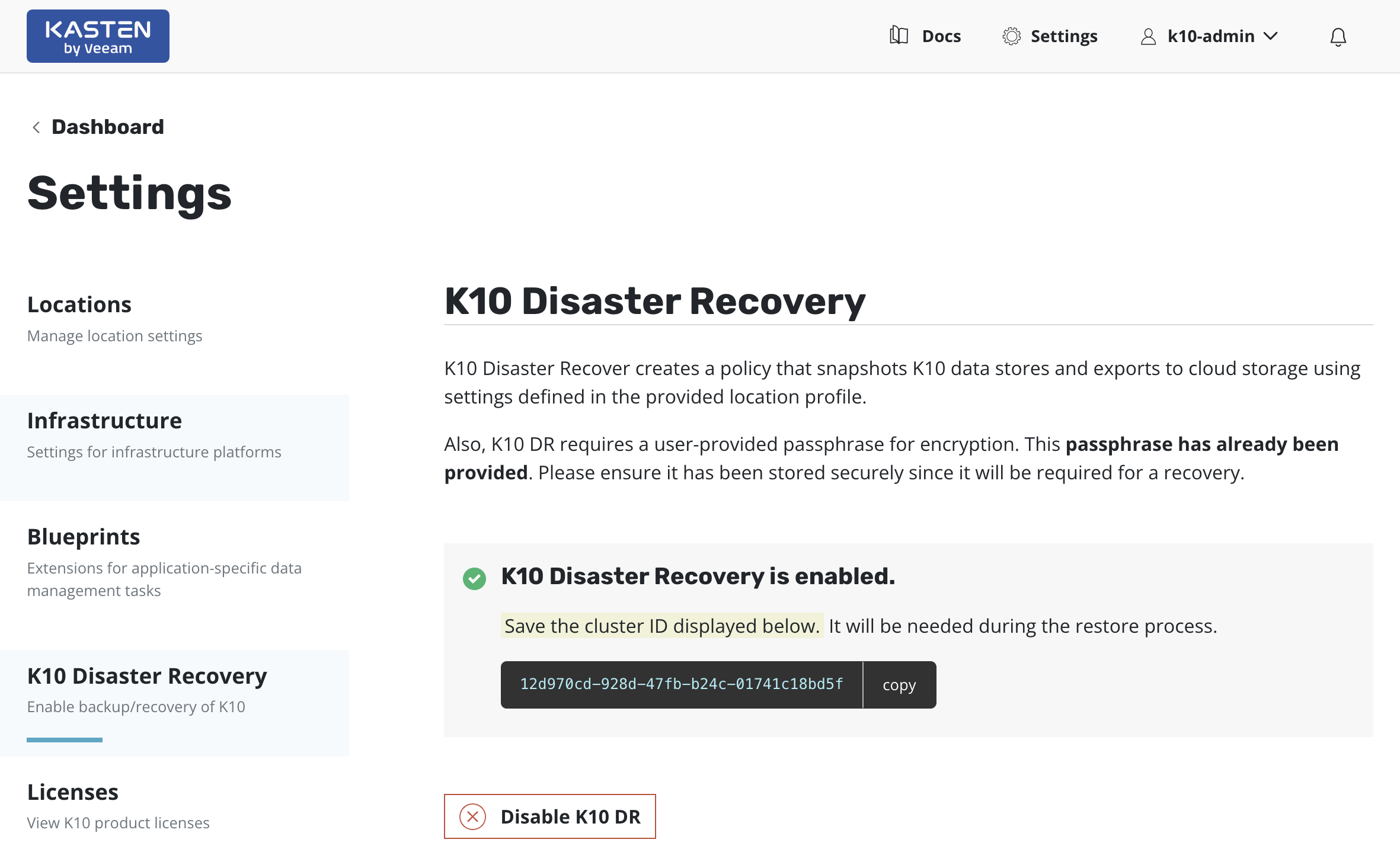

K10 Disaster Recovery settings can be accessed from the Settings icon in

the top-right corner of the dashboard or, for a new install, via the

prompt at the bottom of the dashboard.

From the Settings page, select K10 Disaster Recovery and then click

the Enable K10 DR button to start the process.

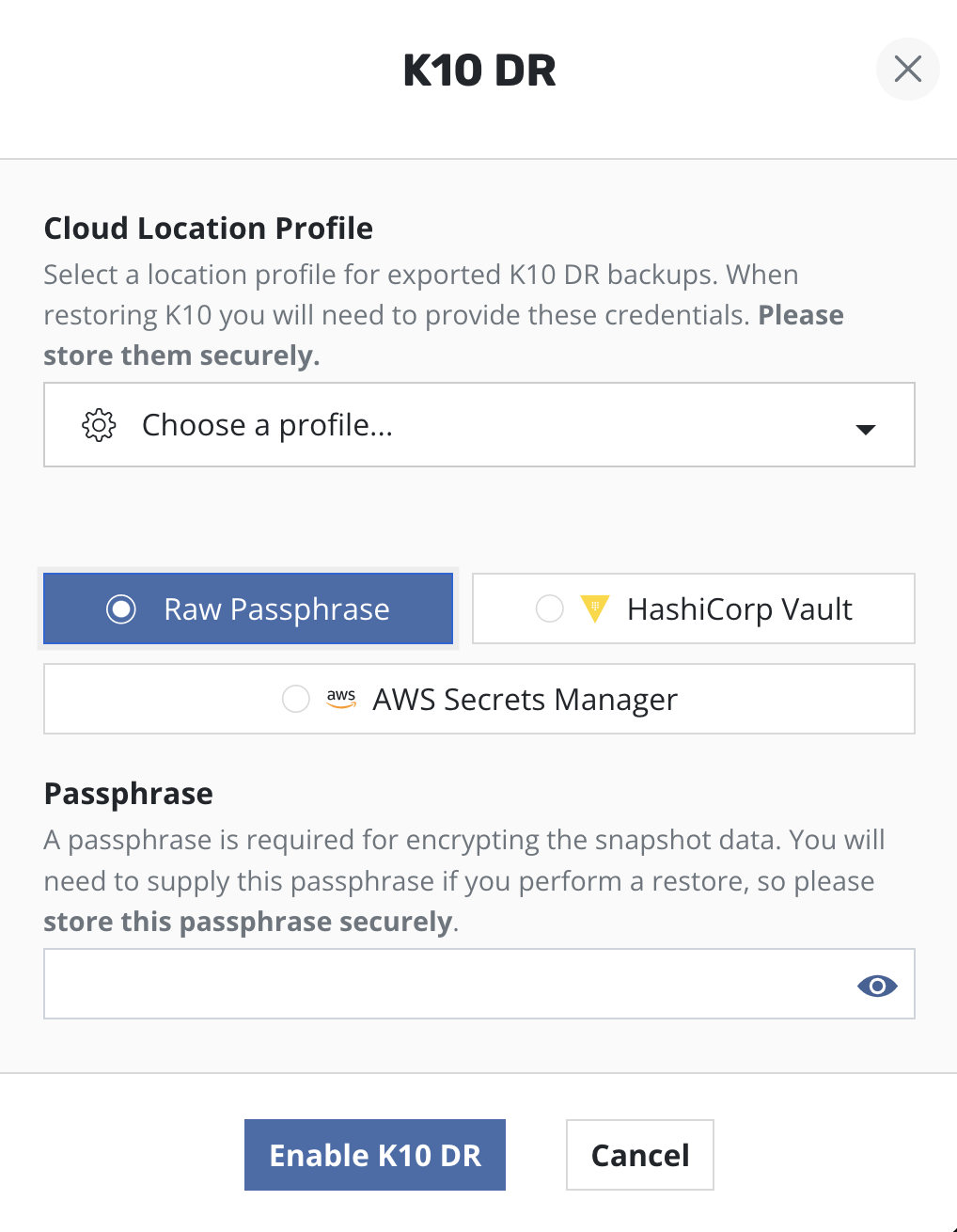

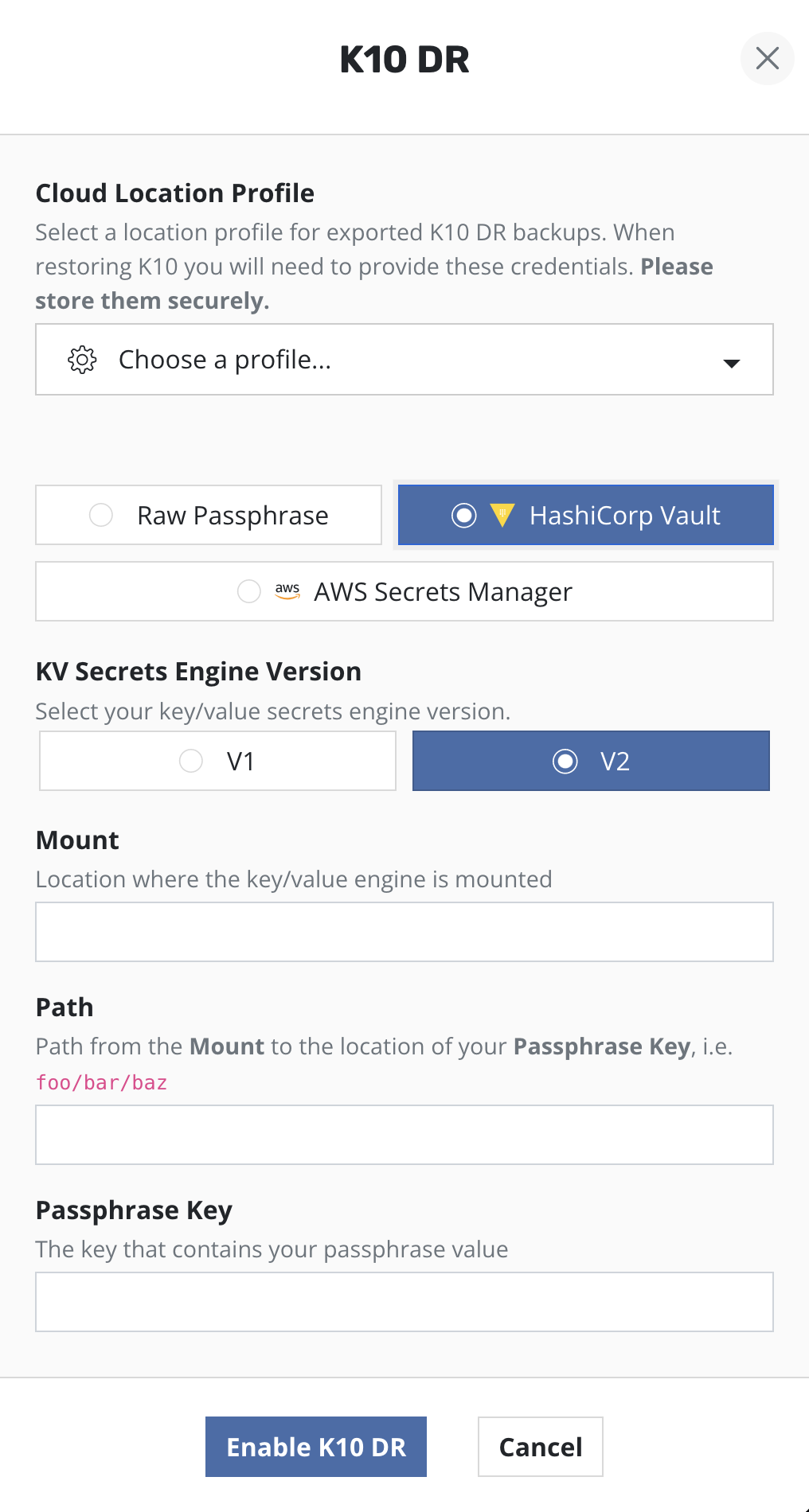

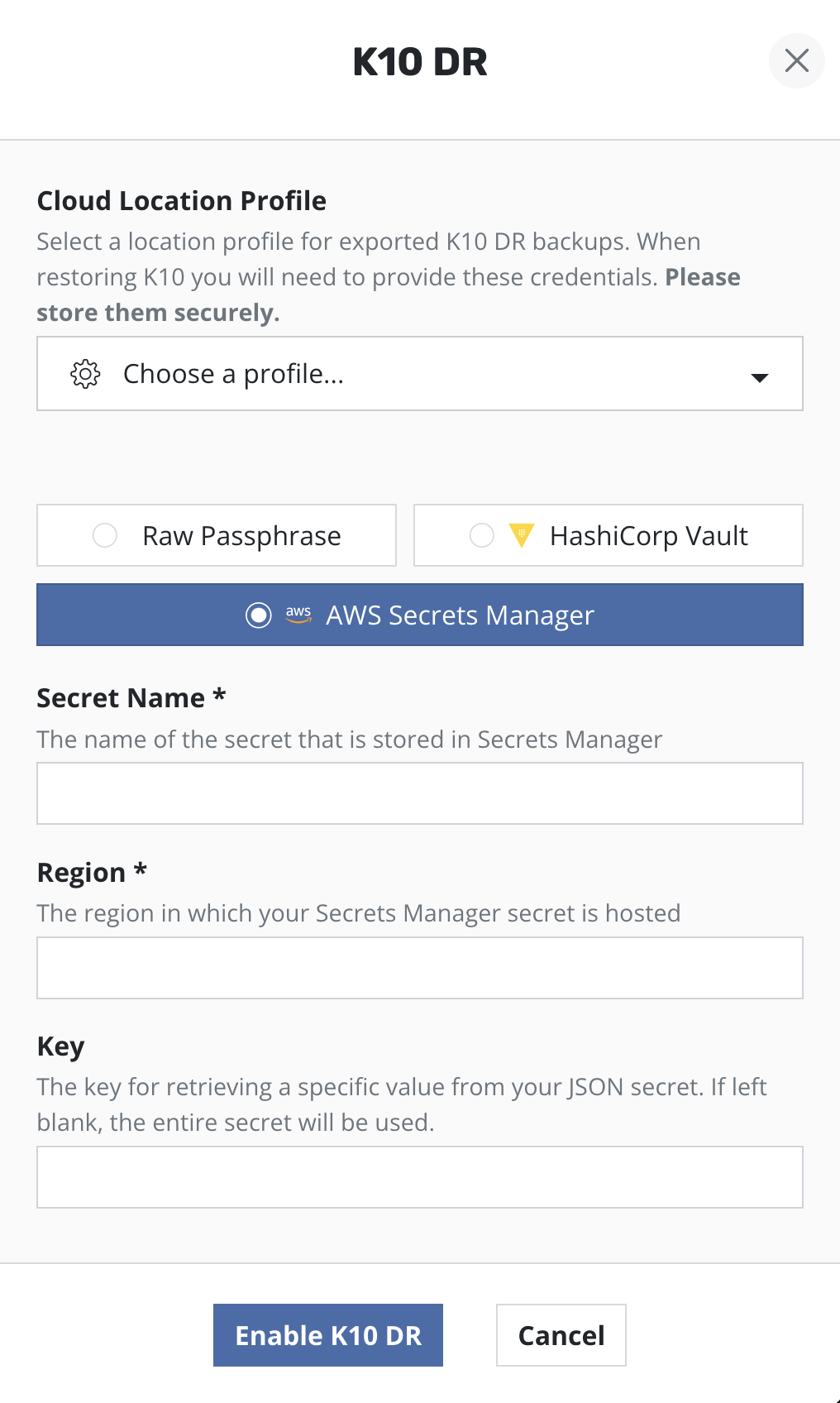

Enabling K10 Disaster Recovery requires selecting a Location Profile for the exported K10 Disaster Recovery backups and providing a passphrase for encrypting the snapshot data.

The passphrase can be provided as a raw string or as reference to a secret in HashiCorp Vault or AWS Secrets Manager.

Enable Disaster Recovery by clicking on the Enable K10 DR button.

Note

If providing a raw passphrase, save it securely outside the cluster.

Note

Using HashiCorp Vault requires that K10 is configured to access Vault.

Note

Using AWS Secrets Manager requires that an AWS Infrastructure Profile exists with the adequate permissions

Cluster ID

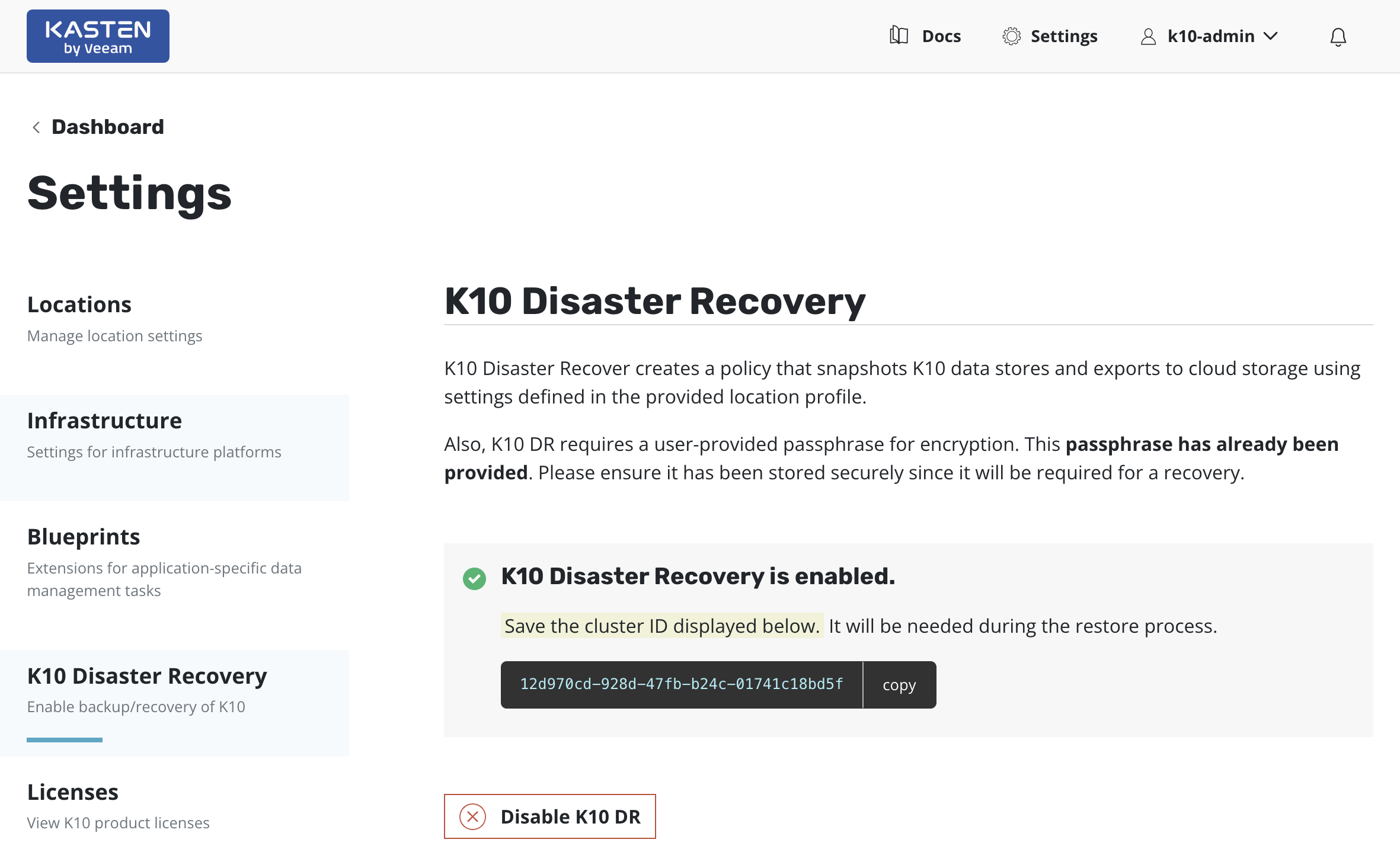

A confirmation message with the cluster ID will be displayed when Disaster

Recovery is enabled. This ID is used as a prefix to the object storage or NFS

file storage location where K10's data store's exported backups are saved.

Note

Save the cluster ID safely, it is required to recover K10 from a disaster.

The cluster ID value can also be accessed by using the

following kubectl command.

# Extract UUID of the `default` namespace

$ kubectl get namespace default -o jsonpath="{.metadata.uid}{'\n'}"

K10 Disaster Recovery Policy

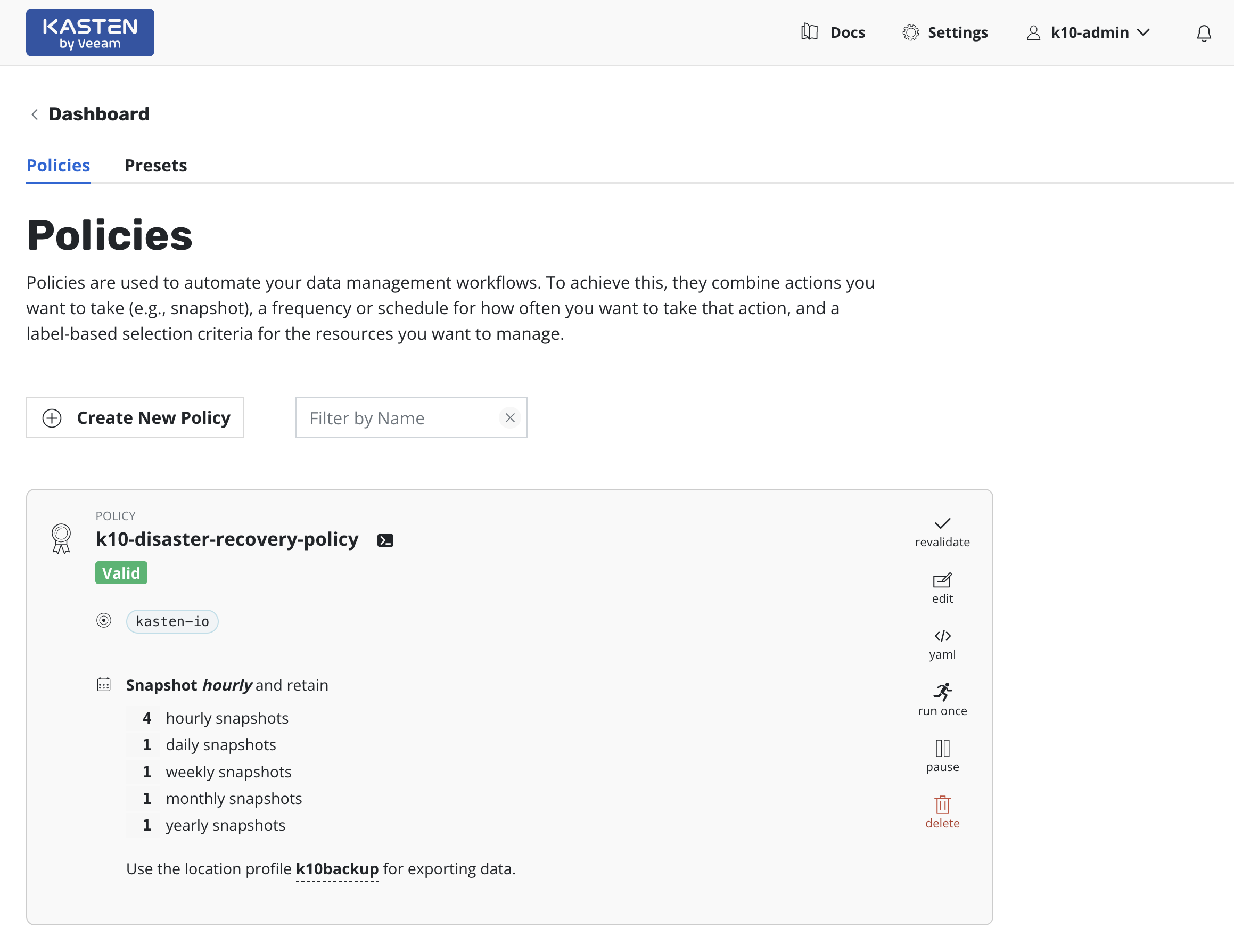

A policy called k10-disaster-recovery-policy which implements K10 Disaster

Recovery will automatically be created when Disaster Recovery is enabled.

This policy can be viewed from the Policies page.

Click Run Once on the k10-disaster-recovery-policy to start a backup.

The data exported by K10 for Disaster Recovery purposes will be encrypted

via AES-256-GCM.

Warning

After enabling K10 Disaster Recovery, it is essential that you copy and save the following to successfully recover K10 from a disaster:

The cluster ID displayed on the disaster recovery page

The Disaster Recovery passphrase provided above

The credentials and object storage bucket or the NFS file storage information (used in the Location Profile configuration above)

Without this information, K10 Disaster Recovery will not be possible.

Disabling K10 Disaster Recovery

K10 Disaster Recovery can be disabled by clicking the Disable K10 DR

button on the K10 Disaster Recovery settings page, which can be accessed

from the Settings icon in the top-right corner of the dashboard.

Recovering K10 From a Disaster

Recovering from a K10 backup involves the following sequence of actions:

Create a Kubernetes Secret,

k10-dr-secret, using the passphrase provided while enabling Disaster RecoveryInstall a fresh K10 instance in the same namespace as the above Secret

Provide bucket information and credentials for the object storage location or NFS file storage location where previous K10 backups are stored

Restoring the K10 backup

Uninstalling the k10restore instance after recovery is recommended

Note

If K10 backup is stored using an NFS File Storage Location, it is important that the same NFS share is reachable from the recovery cluster and is mounted on all nodes where K10 is installed.

Specifying a Disaster Recovery Passphrase

Currently, K10 Disaster Recovery encrypts all artifacts via the use of the

AES-256-GCM algorithm. The passphrase entered while enabling Disaster Recovery

is used for this encryption. On the cluster used for K10 recovery, the

Secret k10-dr-secret needs to be therefore created using that same

passphrase in the K10 namespace (default kasten-io)

The passphrase can be provided as a raw string or reference a secret in HashiCorp Vault or AWS Secrets Manager.

Specifying the passphrase as a raw string:

$ kubectl create secret generic k10-dr-secret \

--namespace kasten-io \

--from-literal key=<passphrase>

Specifying the passphrase as a HashiCorp Vault secret:

$ kubectl create secret generic k10-dr-secret \

--namespace kasten-io \

--from-literal source=vault \

--from-literal vault-kv-version=<version-of-key-value-secrets-engine> \

--from-literal vault-mount-path=<path-where-key-value-engine-is-mounted> \

--from-literal vault-secret-path=<path-from-mount-to-passphrase-key> \

--from-literal key=<name-of-passphrase-key>

# Example

$ kubectl create secret generic k10-dr-secret \

--namespace kasten-io \

--from-literal source=vault \

--from-literal vault-kv-version=KVv1 \

--from-literal vault-mount-path=secret \

--from-literal vault-secret-path=k10 \

--from-literal key=passphrase

The supported values for vault-kv-version are KVv1 and KVv2.

Note

Using a passphrase from HashiCorp Vault also requires enabling

HashiCorp Vault authentication when installing the kasten/k10restore

helm chart. Refer: Enabling HashiCorp Vault using

Token Auth or

Kubernetes Auth.

Specifying the passphrase as an AWS Secrets Manager secret:

$ kubectl create secret generic k10-dr-secret \

--namespace kasten-io \

--from-literal source=aws \

--from-literal aws-region=<aws-region-for-secret> \

--from-literal key=<aws-secret-name>

# Example

$ kubectl create secret generic k10-dr-secret \

--namespace kasten-io \

--from-literal source=aws \

--from-literal aws-region=us-east-1 \

--from-literal key=k10/dr/passphrase

Reinstall K10

Note

If you are reinstalling K10 on the same cluster, it is important to clean up the namespace in which K10 was previously installed before the above passphrase creation.

# Delete the kasten-io namespace.

$ kubectl delete namespace kasten-io

K10 must be reinstalled before recovery. Please follow the instructions here.

Provide External Storage Configuration

Create a Location Profile with the object storage location or NFS file storage location where K10 backups are stored.

Restore K10 Backup

Requirements:

Source

cluster IDName of Location Profile from the previous step

# Install the helm chart that creates the K10 restore job and wait for completion of the `k10-restore` job

# Assumes that K10 is installed in 'kasten-io' namespace.

$ helm install k10-restore kasten/k10restore --namespace=kasten-io \

--set sourceClusterID=<source-clusterID> \

--set profile.name=<location-profile-name>

For an OpenShift environment, --set scc.create=true is also required.

The restore job always restores the restore point catalog and artifact

information. If the restore of other resources (options include profiles,

policies, secrets) needs to be skipped, the skipResource flag can be used.

# e.g. to skip restore of profiles and policies, helm install command will be as follows:

$ helm install k10-restore kasten/k10restore --namespace=kasten-io \

--set sourceClusterID=<source-clusterID> \

--set profile.name=<location-profile-name> \

--set skipResource="profiles\,policies"

The timeout of the entire restore process can be configured by the helm field

restore.timeout. The type of this field is int and the value is in minutes.

# e.g. to specify the restore timeout, helm install command will be as follows:

$ helm install k10-restore kasten/k10restore --namespace=kasten-io \

--set sourceClusterID=<source-clusterID> \

--set profile.name=<location-profile-name> \

--set restore.timeout=<timeout-in-minutes>

If the Disaster Recovery Location Profile was configured for

Immutable Backups, K10 can be restored

to an earlier point in time. The protection period chosen when creating the

profile dictates how far in the past the point-in-time can be. Set the

pointInTime helm value to the desired time stamp.

# e.g. to restore K10 to 15:04:05 UTC on Jan 2, 2022:

$ helm install k10-restore kasten/k10restore --namespace=kasten-io \

--set sourceClusterID=<source-clusterID> \

--set profile.name=<location-profile-name> \

--set pointInTime="2022-01-02T15:04:05Z"

See Immutable Backups Workflow for additional information.

Enable HashiCorp Vault using Token Auth

Create a Kubernetes secret with the Vault token.

kubectl create secret generic vault-creds \

--namespace kasten-io \

--from-literal vault_token=<vault-token>

Warning

This may cause the token to be stored in shell history.

Use these additional parameters when installing the kasten/k10restore

helm chart.

--set vault.enabled=true \

--set vault.address=<vault-server-address> \

--set vault.secretName=<name-of-secret-with-vault-creds>

Enable HashiCorp Vault using Kubernetes Auth

Refer to Configuring Vault Server For Kubernetes Auth prior to installing the kasten/k10restore helm chart.

Use these additional parameters when installing the

kasten/k10restore helm chart.

--set vault.enabled=true \

--set vault.address=<vault-server-address> \

--set vault.role=<vault-kubernetes-authentication-role_name> \

--set vault.serviceAccountTokenPath=<service-account-token-path> # optional

vault.role is the name of the Vault Kubernetes authentication role binding

the K10 service account and namespace to the Vault policy.

vault.serviceAccountTokenPath is optional and defaults to

/var/run/secrets/kubernetes.io/serviceaccount/token.

Restore K10 Backup in Air-Gapped environment

In case of air-gapped installations, it's assumed that k10offline tool is

used to push the images to a private container registry.

Below command can be used to instruct k10restore to run in air-gapped mode.

# Install the helm chart that creates the K10 restore job and wait for completion of the `k10-restore` job

# Assumes that K10 is installed in 'kasten-io' namespace.

$ helm install k10-restore kasten/k10restore --namespace=kasten-io \

--set airgapped.repository=repo.example.com \

--set sourceClusterID=<source-clusterID> \

--set profile.name=<location-profile-name>

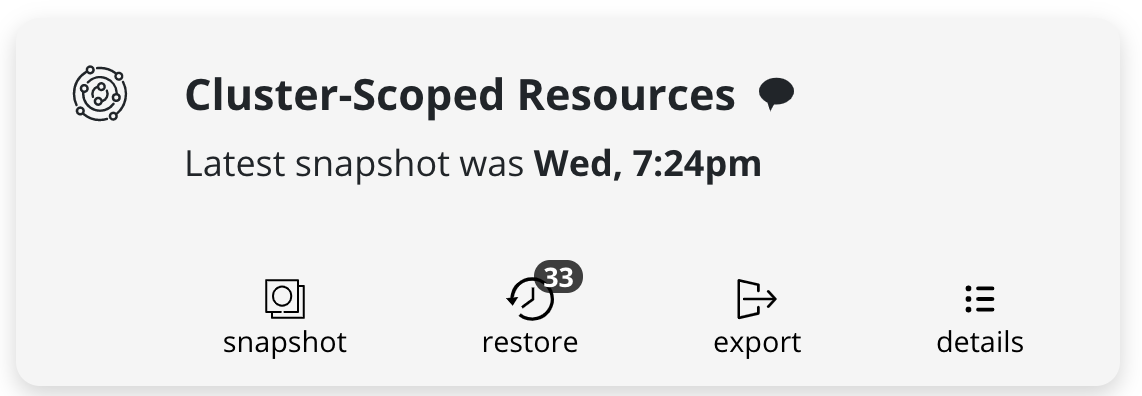

Cluster-Scoped Resource Recovery

Prior to recovering applications, it may be desirable to restore cluster-scoped resources. Cluster-scoped resources may be needed for cluster configuration or as part of application recovery.

Upon completion of the Disaster Recovery Restore job, go to the Applications

card, hover on the Cluster-Scoped Resources card, click on the

restore icon, and select a cluster restore point to recover from.

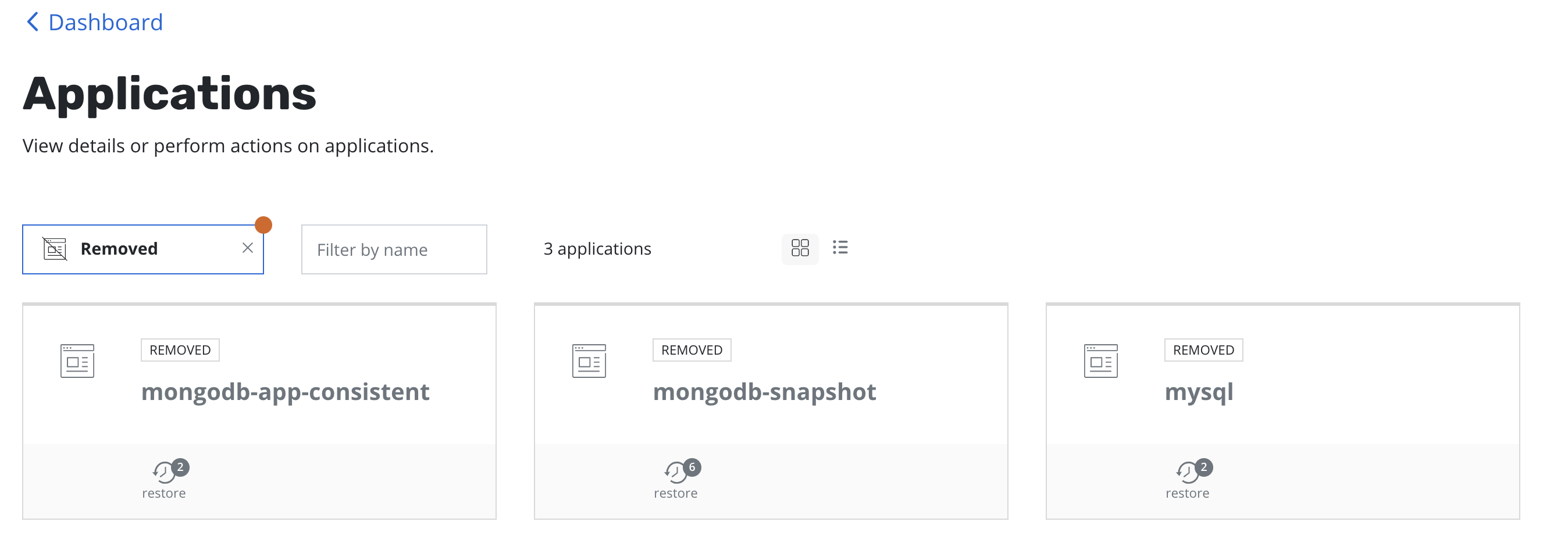

Application Recovery

Upon completion of the Disaster Recovery Restore job, go to the Applications

card, select Removed under the Filter by status drop-down menu.

Click restore under the application and select a restore point

to recover from.

Uninstall k10restore

The K10restore instance can be uninstalled with the helm uninstall command.

# e.g. to uninstall K10restore from the kasten-io namespace

$ helm uninstall k10-restore kasten/k10restore --namespace=kasten-io

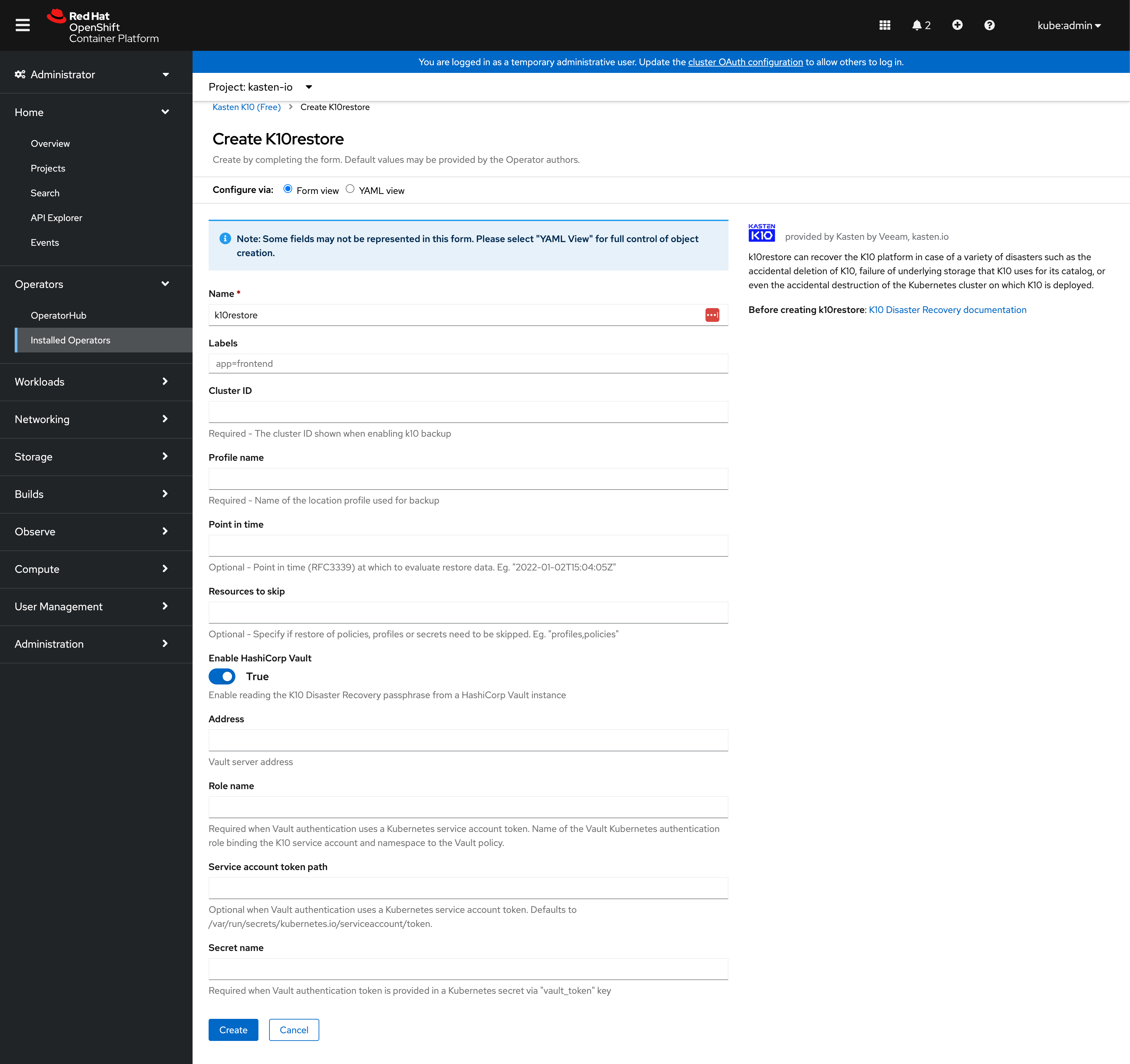

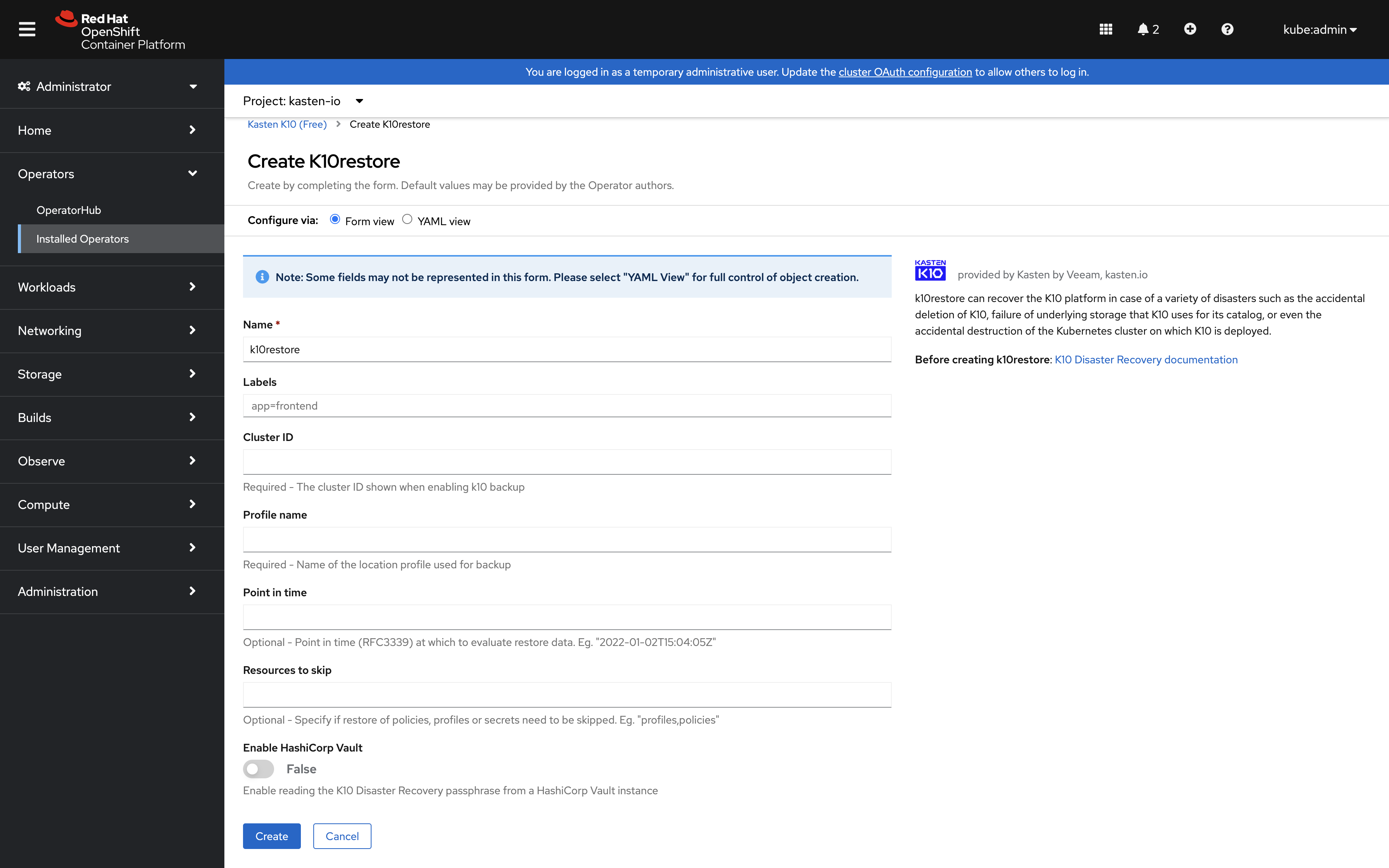

Recovering with the Operator

Recovering from a K10 backup involves the following sequence of actions:

Install a fresh K10 instance.

Configure a Location Profile from where the K10 backup will be restored.

Create a Kubernetes Secret named

k10-dr-secretin the same namespace as the K10 install, with the passphrase given when disaster recovery was enabled on the previous K10 instance. The commands are detailed here.Create a K10restore instance. The required values are

Cluster ID - value given when disaster recovery was enabled on the previous K10 instance.

Profile name - name of the Location Profile configured in Step 2.

and the optional values are

Point in time - time (RFC3339) at which to evaluate restore data. Example "2022-01-02T15:04:05Z".

Resources to skip - can be used to skip restore of specific resources. Example "profile,policies".

After recovery, deleting the k10restore instance is recommended.

Operator K10restore form view with Enable HashiCorp Vault set to False

Operator K10restore form view with Enable HashiCorp Vault set to True