Authentication

K10 offers a variety of different ways to secure access to its dashboard and APIs:

Direct Access

When exposing the K10 dashboard externally, it is required that an authentication method is properly configured to secure access.

If accessing the K10 API directly or using kubectl, any

authentication method configured for the cluster is acceptable. For

more information, see

Kubernetes authentication.

Basic Authentication

Basic Authentication allows you to protect access to the K10 dashboard with a user name and password. To enable Basic Authentication, you will first need to generate htpasswd credentials by either using an online tool or via the htpasswd binary found on most systems. Once generated, you need to supply the resulting string with the helm install or upgrade command using the following flags.

--set auth.basicAuth.enabled=true \

--set auth.basicAuth.htpasswd='example:$apr1$qrAVXu.v$Q8YVc50vtiS8KPmiyrkld0'

Alternatively, you can use an existing secret that contains a file created

with htpasswd. The secret must be in the K10 namespace. This secret

must be created with the key named auth and the value as the password

generated using htpasswd in the data field of the secret.

--set auth.basicAuth.enabled=true \

--set auth.basicAuth.secretName=my-basic-auth-secret

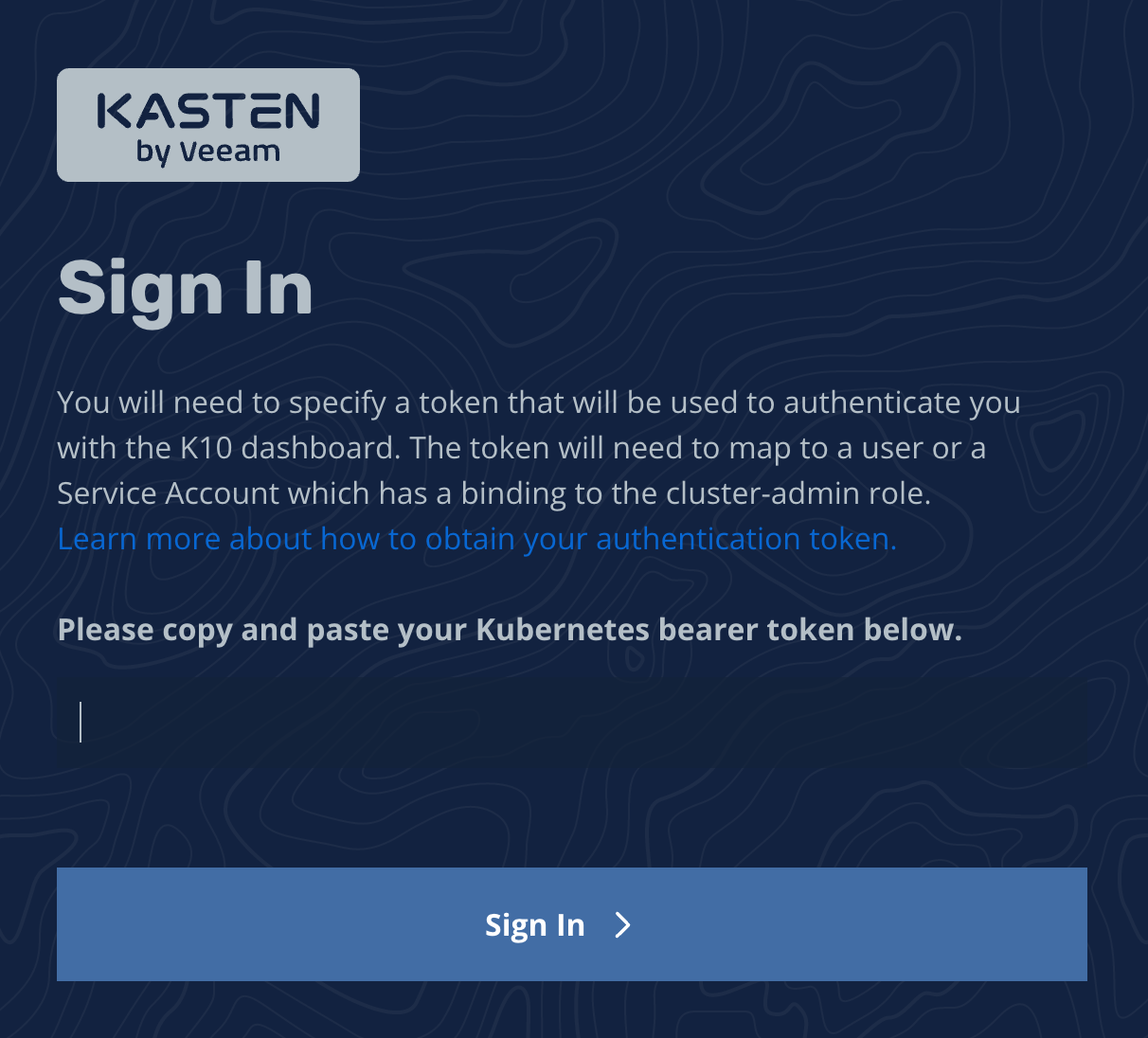

Token Authentication

To enable token authentication use the following flag as part of the initial Helm install or subsequent Helm upgrade command.

--set auth.tokenAuth.enabled=true

Once the dashboard is configured, you will be prompted to provide a bearer token that will be used when accessing the dashboard.

Obtaining Tokens

Token authentication allows using any token that can be verified by the Kubernetes server. For details on the supported token types see Authentication Strategies.

The most common token type that you can use is a service account bearer token.

You can use kubectl to obtain such a token for a service account

that you know has the proper permissions.

For example, assuming that K10 is installed in the kasten-io namespace and

the ServiceAccount is named my-kasten-sa:

Generate a token with an expiration period (recommended practice):

$ kubectl --namespace kasten-io create token my-kasten-sa --duration=24h

Note

kubectl client version of 1.24 or higher is required to create a token resource.

Create a secret for the desired service account and fetch a permanent token:

$ desired_token_secret_name=my-kasten-sa-token $ kubectl apply --namespace=kasten-io --filename=- <<EOF apiVersion: v1 kind: Secret type: kubernetes.io/service-account-token metadata: name: ${desired_token_secret_name} annotations: kubernetes.io/service-account.name: "my-kasten-sa" EOF $ kubectl get secret ${desired_token_secret_name} --namespace kasten-io -ojsonpath="{.data.token}" | base64 --decode

Prior to Kubernetes 1.24, the token must be extracted from a service account's secret:

$ sa_secret=$(kubectl get serviceaccount my-kasten-sa -o jsonpath="{.secrets[0].name}" --namespace kasten-io)

$ kubectl get secret $sa_secret --namespace kasten-io -ojsonpath="{.data.token}{'\n'}" | base64 --decode

If a suitable service account doesn't already exist, one can be created with:

$ kubectl create serviceaccount my-kasten-sa --namespace kasten-io

The new service account will need appropriate role bindings or cluster role bindings in order to use it within K10. To learn more about the necessary K10 permissions, see Authorization.

Token-Based Authentication with AWS EKS

For more details on how to set up token-based authentication with AWS EKS, please follow the following documentation.

Obtaining Tokens with Red Hat OpenShift

An authentication token can be obtained from Red Hat OpenShift via the

OpenShift Console by clicking on your user name in the top right

corner of the console and selecting Copy Login

Command. Alternatively, the token can also be obtained by using the

following command:

$ oc whoami --show-token

OAuth Proxy with Red Hat OpenShift (Preview)

The OpenShift OAuth proxy can be used for authenticating access to K10. The following resources have to be deployed in order to setup OAuth proxy in the same namespace as K10.

Configuration

ServiceAccount

Create a ServiceAccount that is to be used by the OAuth proxy deployment

apiVersion: v1

kind: ServiceAccount

metadata:

name: k10-oauth-proxy

namespace: kasten-io

Cookie Secret

Create a Secret that is used for encrypting the cookie created by the proxy. The name of the Secret will be used in the configuration of the OAuth proxy.

$ oc --namespace kasten-io create secret generic oauth-proxy-secret \

--from-literal=session-secret=$(head /dev/urandom | tr -dc A-Za-z0-9 | head -c43)

ConfigMap for OpenShift Root CA

Create a ConfigMap annotated with the inject-cabundle OpenShift

annotation. The annotation results in the injection of OpenShift's root

CA into the ConfigMap. The name of this ConfigMap is used in the

configuration of the OAuth proxy.

apiVersion: v1

kind: ConfigMap

metadata:

annotations:

service.beta.openshift.io/inject-cabundle: "true"

name: service-ca

namespace: kasten-io

NetworkPolicy

Create a NetworkPolicy to allow ingress traffic on port 8080 and port 8083 to to be forwarded to the OAuth proxy service.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-external-oauth-proxy

namespace: kasten-io

spec:

ingress:

- ports:

- port: 8083

protocol: TCP

- port: 8080

protocol: TCP

podSelector:

matchLabels:

service: oauth-proxy-svc

policyTypes:

- Ingress

Service

Deploy a Service for OAuth proxy. This needs to be annotated with the

serving-cert-secret-name annotation. This will result in OpenShift

generating a TLS private key and certificate that will be used by the

OAuth proxy for secure connections to it. The name of the Secret used

with the annotation must match with the name used in the OAuth proxy

deployment.

apiVersion: v1

kind: Service

metadata:

annotations:

service.beta.openshift.io/serving-cert-secret-name: oauth-proxy-tls-secret

labels:

service: oauth-proxy-svc

name: oauth-proxy-svc

namespace: kasten-io

spec:

ports:

- name: https

port: 8083

protocol: TCP

targetPort: https

- name: http

port: 8080

protocol: TCP

targetPort: http

selector:

service: oauth-proxy-svc

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

type: ClusterIP

status:

loadBalancer: {}

Deployment

Next, a Deployment for OAuth proxy needs to be created. It is recommended

that a separate OpenShift OAuth client be registered for this purpose.

The name of the client and its Secret will be used with the --client-id

and --client-secret configuration options respectively.

When an OpenShift ServiceAccount was used as the OAuth client, it was observed that the token generated by the proxy did not have sufficient scopes to operate K10. It is therefore not recommended to deploy the proxy using an OpenShift ServiceAccount as the OAuth client.

It is also important to configure the --pass-access-token with the proxy

so that it includes the OpenShift token in the

X-Forwarded-Access-Token header when forwarding a request to K10.

The --scope configuration must have the user:full scope to ensure that

the token generated by the proxy has sufficient scopes for operating K10.

The --upstream configuration must point to the K10 gateway Service.

apiVersion: apps/v1

kind: Deployment

metadata:

name: oauth-proxy-svc

namespace: kasten-io

spec:

progressDeadlineSeconds: 600

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

service: oauth-proxy-svc

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

service: oauth-proxy-svc

spec:

containers:

- args:

- --https-address=:8083

- --http-address=:8080

- --tls-cert=/tls/tls.crt

- --tls-key=/tls/tls.key

- --provider=openshift

- --client-id=oauth-proxy-client

- --client-secret=oauthproxysecret

- --openshift-ca=/etc/pki/tls/cert.pem

- --openshift-ca=/var/run/secrets/kubernetes.io/serviceaccount/ca.crt

- --openshift-ca=/service-ca/service-ca.crt

- --scope=user:full user:info user:check-access user:list-projects

- --cookie-secret-file=/secret/session-secret

- --cookie-secure=true

- --upstream=http://gateway:8000

- --pass-access-token

- --redirect-url=http://openshift.example.com/oauth2/callback

- --email-domain=*

image: openshift/oauth-proxy:latest

imagePullPolicy: Always

name: oauth-proxy

ports:

- containerPort: 8083

name: https

protocol: TCP

- containerPort: 8080

name: http

protocol: TCP

resources:

requests:

cpu: 10m

memory: 20Mi

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /service-ca

name: service-ca

readOnly: true

- mountPath: /secret

name: oauth-proxy-secret

readOnly: true

- mountPath: /tls

name: oauth-proxy-tls-secret

readOnly: true

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: k10-oauth-proxy

serviceAccountName: k10-oauth-proxy

terminationGracePeriodSeconds: 30

volumes:

- configMap:

defaultMode: 420

name: service-ca

name: service-ca

- name: oauth-proxy-tls-secret

secret:

defaultMode: 420

secretName: oauth-proxy-tls-secret

- name: oauth-proxy-secret

secret:

defaultMode: 420

secretName: oauth-proxy-secret

OAuth Client

As mentioned earlier, it is recommended that a new OpenShift OAuth client be. registered.

The redirectURIs has to point to the domain name where K10 is accessible.

For example if K10 is available at https://example.com/k10, the redirect

URI should be set to https://example.com.

The name of this client must match with the --client-id configuration in

the OAuth proxy deployment.

The Secret in this client must match with the --client-secret configuration

in the OAuth proxy deployment.

The grantMethod can be either prompt or auto.

kind: OAuthClient

apiVersion: oauth.openshift.io/v1

metadata:

name: oauth-proxy-client

secret: "oauthproxysecret"

redirectURIs:

- "http://openshift4-4.aws.kasten.io"

grantMethod: prompt

Forwarding Traffic to the Proxy

Traffic meant for K10 must be forwarded to the OAuth proxy for

authentication before it reaches K10. Ensure that ingress traffic

on port 80 is forwarded to port 8080 and traffic on port 443 is forwarded to

port 8083 of the oauth-proxy-svc Service respectively.

Here is one example of how to forward traffic to the proxy. In this example K10 was deployed with an external gateway Service. The gateway Service's ports were modified to forward traffic like so:

ports:

- name: https

nodePort: 30229

port: 443

protocol: TCP

targetPort: 8083

- name: http

nodePort: 31658

port: 80

protocol: TCP

targetPort: 8080

selector:

service: oauth-proxy-svc

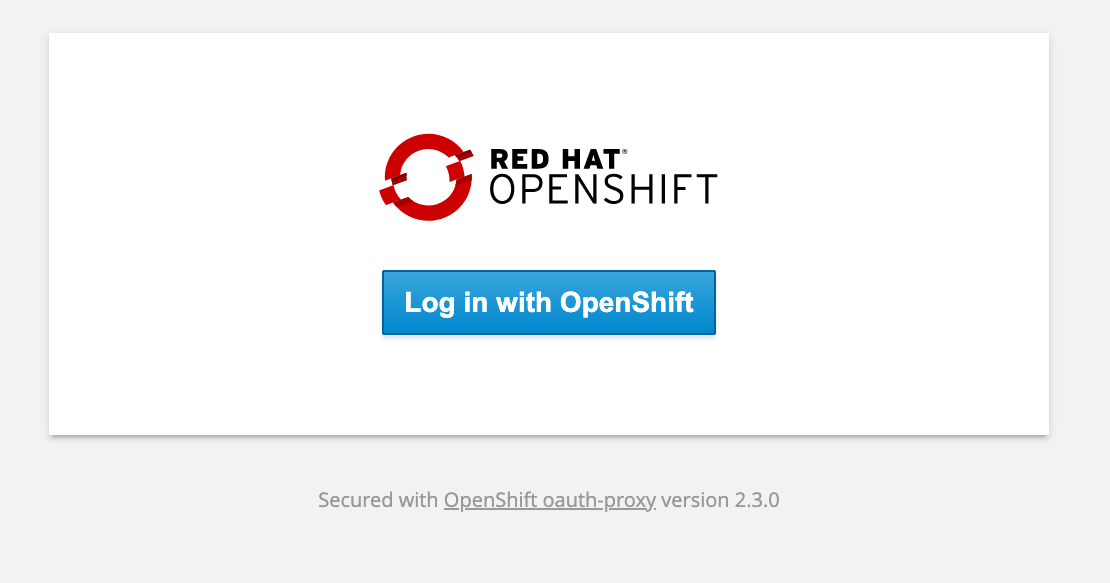

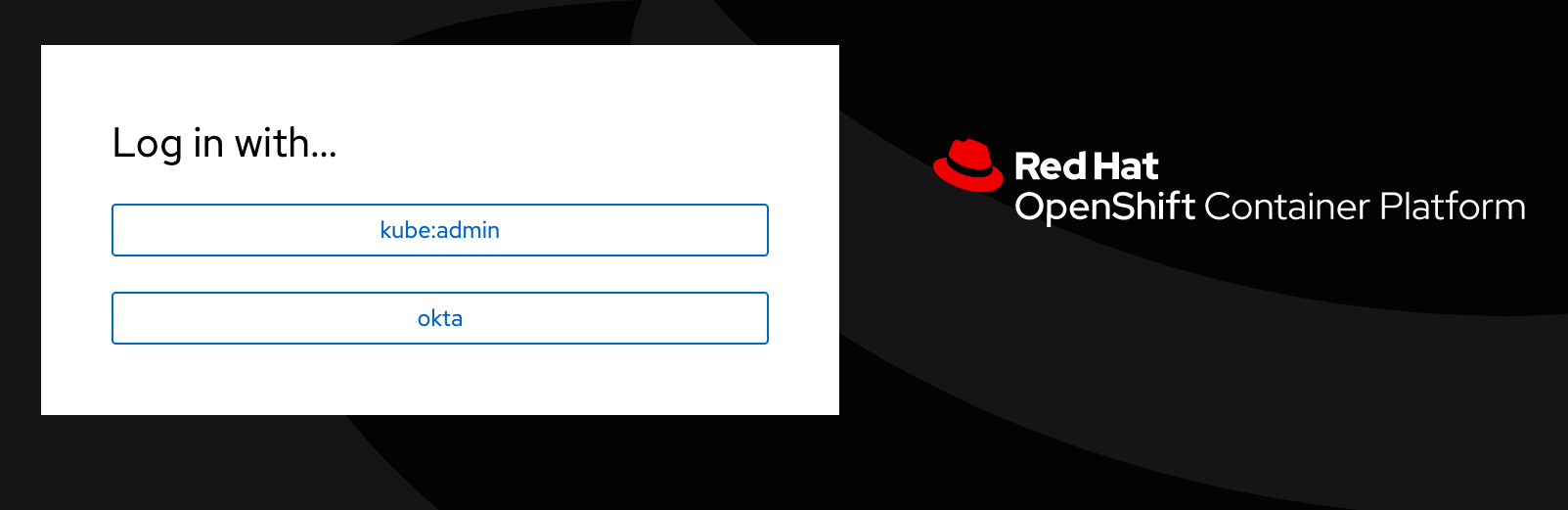

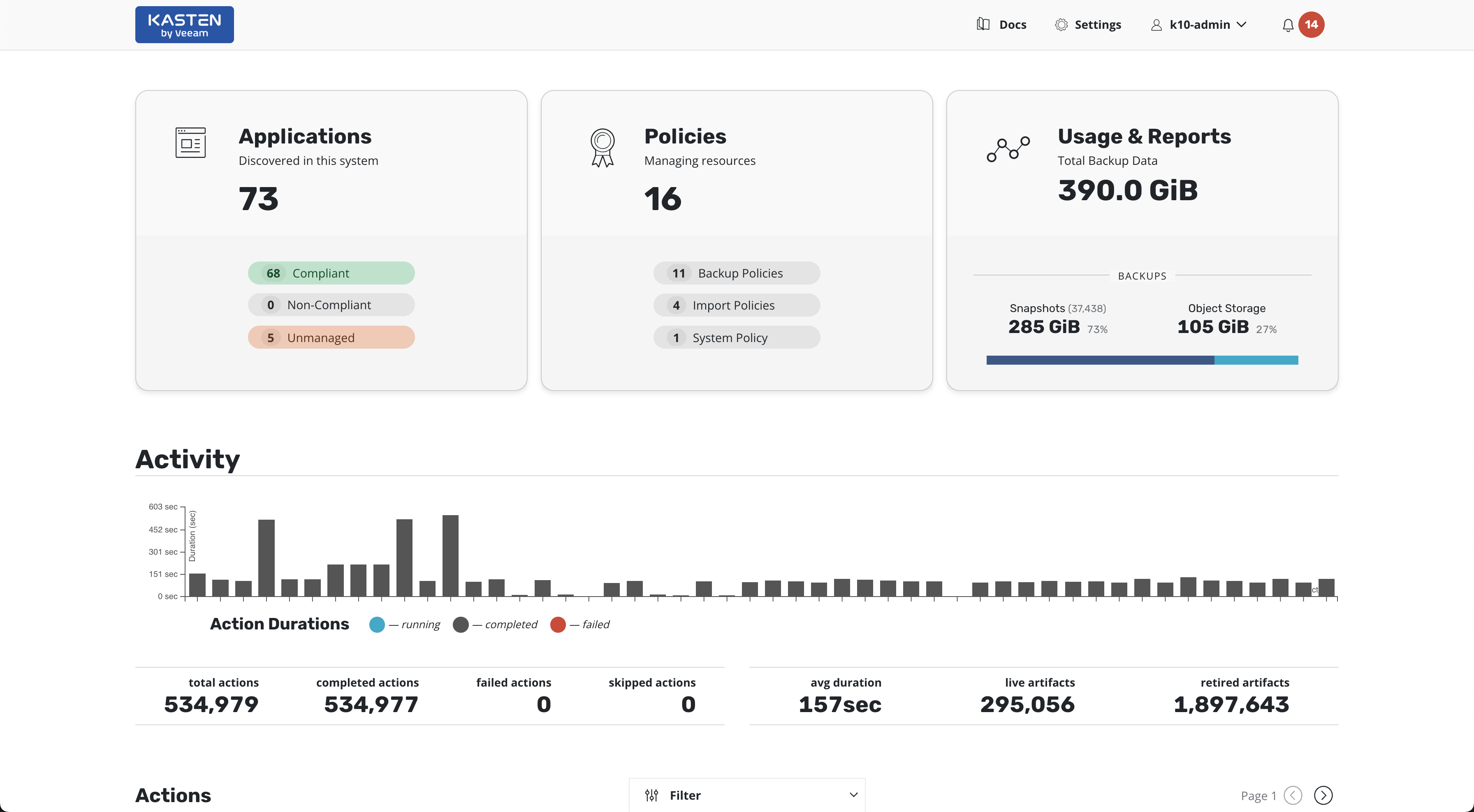

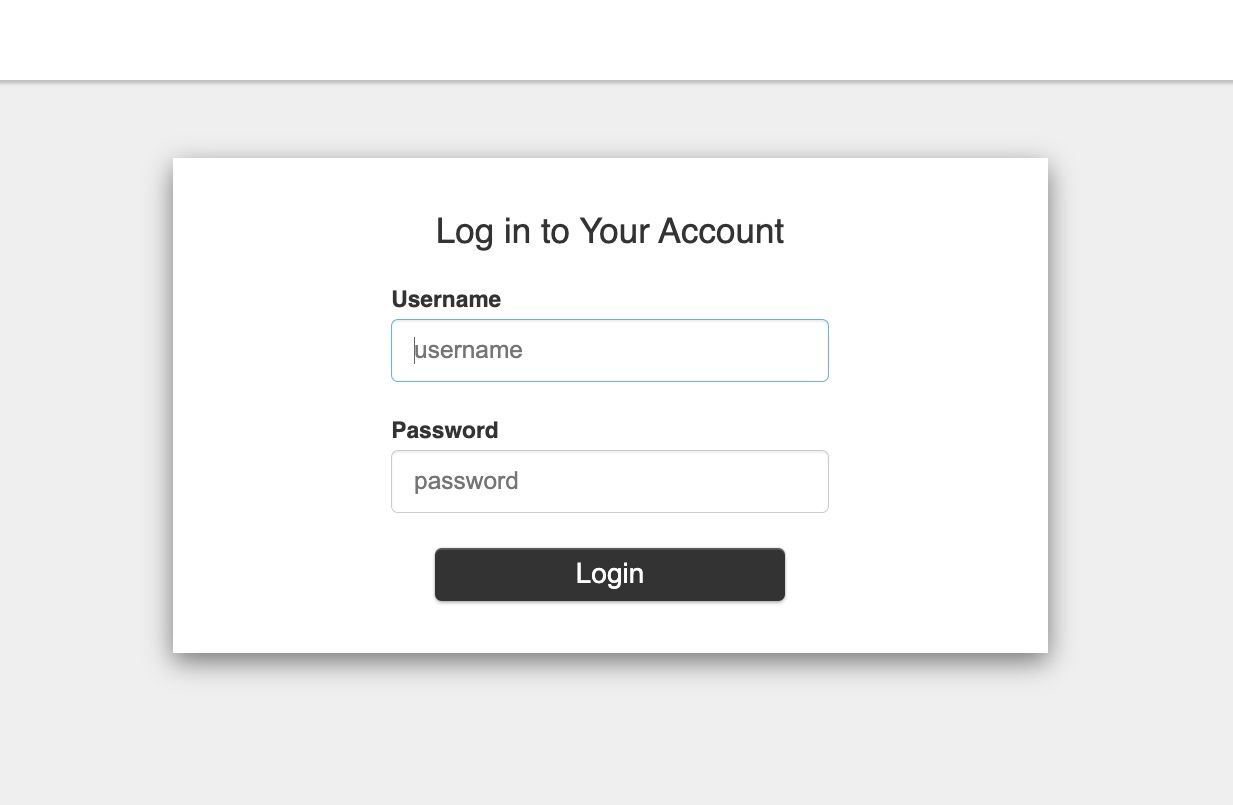

Sample Auth Flow with Screenshots

This section is meant to provide an example of configuring authentication and authorization for operating K10 in an OpenShift cluster to help provide an end to end picture of the Auth flow when working with the OAuth proxy.

Oktawas configured as the OIDC provider in the OpenShift cluster.An OpenShift group called

k10-adminswas created and users were added to this group.A cluster role binding was created to bind the

k10-adminsgroup to thek10-admincluster role.A role binding was created to map the

k10-adminsgroup to thek10-ns-adminrole in the K10 namespace.When the user navigates to the K10 dashboard, the request reaches the proxy. The proxy presents a login screen to the user.

After clicking the login button, the user is forwarded to the OpenShift login screen. The OpenShift screen will provide the option of selecting

kube:adminor the OIDC option if it has been configured in the cluster.After clicking on the OIDC option

oktain this example, the OIDC provider's login screen is shown.When authentication with the OIDC provider succeeds, the user is redirected to the K10 dashboard.

Additional Documentation

For more information about the OpenShift OAuth proxy, refer to the documentation here.

OpenID Connect Authentication

K10 supports the ability to obtain a token from an OIDC provider and then use that token for authentication. K10 extracts the user's ID from the token and uses Kubernetes User Impersonation with that ID to ensure that user-initiated actions (via the API, CLI or dashboard) are attributed to the authenticated user.

Cluster Setup

K10 works with your OIDC provider irrespective of whether the Kubernetes cluster is configured with the same OIDC provider, a different OIDC provider, or without any identity providers.

For configuring a cluster with OIDC Tokens see OpenID Connect(OIDC) Token.

For more information on the Kubernetes API configuration options, see Configuring the API Server.

When working with a hosted Kubernetes offering (e.g. GKE, AKS, IKS) there will usually be specific instruction on how to enable this since you may not be able to explicitly configure the Kubernetes API server.

Overall, this portion of the configuration is beyond the scope of the K10 product and is part of the base setup of your Kubernetes cluster.

K10 Setup

K10 Configuration

The final step is providing K10 with the settings needed to initiate the OIDC workflow and obtain a token.

Enable OIDC

To enable OIDC based authentication use the following flag as part of the initial Helm install or subsequent Helm upgrade command.

--set auth.oidcAuth.enabled=trueOIDC Provider

This is a URL for OIDC provider. If the Kubernetes API server and K10 share the same OIDC provider, use the same URL that was used when configuring the --oidc-issuer-url option of the API server.

Use the following Helm option:

--set auth.oidcAuth.providerURL=<provider URL>Redirect URL

This is the URL to the K10 gateway service.

Use

https://<URL to k10 gateway service>for K10 exposed externally orhttp://127.0.0.1:<forwarding port>for K10 exposed through kubectl port-forward.Use the following Helm option:

--set auth.oidcAuth.redirectURL=<gateway URL>OIDC Scopes

This option defines the scopes that should be requested from the OIDC provider. If the Kubernetes API server and K10 share the same OIDC provider, use the same claims that were requested when configuring the --oidc-username-claim option of the API server.

Use the following Helm option:

--set auth.oidcAuth.scopes=<space separated scopes. Quoted if multiple>OIDC Prompt

If provided, this option specifies whether the OIDC provider must prompt the user for consent or re-authentication. The well known values for this field are

select_account,login,consent, andnone. Check the OIDC provider's documentation to determine what value is supported. The default value isselect_account.Use the following Helm option:

--set auth.oidcAuth.prompt=<prompt>OIDC Client ID

This option defines the Client ID that is registered with the OIDC Provider. If the Kubernetes API server and K10 share the same OIDC provider, use the same client ID specified when configuring the --oidc-client-id option of the API server.

Use the following Helm option:

--set auth.oidcAuth.clientID=<client id string>OIDC Client Secret

This option defines the Client Secret that corresponds to the Client ID registered. You should have received this value from the OIDC provider when registering the Client ID.

Use the following Helm option:

--set auth.oidcAuth.clientSecret=<secret string>OIDC User Name Claim

This option defines the OpenID claim that has to be used by K10 as the user name. It will be used by K10 for impersonating the user while interacting with the Kubernetes API server for authorization. If not provided, the default claim is

sub. This user name must match the User defined in the role bindings described here: K10 RBAC.Use the following Helm option:

--set auth.oidcAuth.usernameClaim=<username claim>OIDC User Name Prefix

If provided, all usernames will be prefixed with this value. If not provided, username claims other than

emailare prefixed by the provider URL to avoid clashes. To skip any prefixing, provide the value-.Use the following Helm option:

--set auth.oidcAuth.usernamePrefix=<username prefix>OIDC Group Name Claim

If provided, this specifies the name of a custom OpenID Connect claim to be used by K10 to identify the groups that a user belongs to. The groups and the username will be used by K10 for impersonating the user while interacting with the Kubernetes API server for authorization.

To ensure that authorization for the user is successful, one of the groups should match with a Kubernetes group that has the necessary role bindings to allow the user to access K10.

If the user is an admin user, then the user is most likely set up with all the required permissions for accessing K10 and no new role bindings are necessary.

To avoid creating new role bindings for non-admin users every time a new user needs to be added to the list of users who will operate K10, consider adding the user to a group such as

my-K10-adminsin the OIDC provider and add that user to the same group in the Kubernetes cluster. Create role bindings to associate themy-K10-adminsgroup with a cluster role -k10-adminand namespace scoped role -k10-ns-admin(see K10 RBAC for more information about these roles that are created by K10 as part of the installation process). This ensures that once a user is authenticated successfully with the OIDC provider, if the groups information from the provider matches the groups information in Kubernetes, it will authorize the user for accessing K10.Note that instead of

my-k10-admins, if the user is added tok10:adminsin the OIDC provider and to the same group in the Kubernetes cluster, no additional role bindings need to be created since K10 creates them as a part of the installation process.For more information about role bindings - K10 RBAC.

Use the following Helm option:

--set auth.oidcAuth.groupClaim=<group claim>OIDC Group Prefix

If provided, all groups will be prefixed with this value to prevent conflicts. To disable the group prefix, either remove this setting or set it to

"".Use the following Helm option:

--set auth.oidcAuth.groupPrefix=<group prefix>

Below is a summary of all the options together. These options can be included as part of the initial install command or can be used with a helm upgrade (see more about upgrade at Upgrading K10) command to modify an existing installation.

--set auth.oidcAuth.enabled=true \

--set auth.oidcAuth.providerURL="https://okta.example.com" \

--set auth.oidcAuth.redirectURL="https://k10.example.com/" \

--set auth.oidcAuth.scopes="groups profile email" \

--set auth.oidcAuth.prompt="select_account" \

--set auth.oidcAuth.clientID="client ID" \

--set auth.oidcAuth.clientSecret="client secret" \

--set auth.oidcAuth.usernameClaim="email" \

--set auth.oidcAuth.usernamePrefix="-" \

--set auth.oidcAuth.groupClaim="groups" \

--set auth.oidcAuth.groupPrefix=""

OpenShift Authentication

This mode can be used to authenticate access to K10 using OpenShift's OAuth server.

Create OAuth Client and Client Secret

Before installing/upgrading K10, a Service Account has to be created in a namespace where K10 is going to be installed. This Service Account represents an OAuth client that will interact with OpenShift's OAuth server.

If the K10 namespace currently does not exist, it must be created

before creating the Service Account in it. Assuming that K10 will be installed

in a namespace called kasten-io.

$ kubectl create namespace kasten-io

Assuming K10 is deployed with the release name k10 and the URL for

accessing K10 is https://example.com/k10, use the following command

to create a Service Account named k10-dex-sa annotated with the

serviceaccounts.openshift.io/oauth-redirecturi.dex annotation.

This annotation registers the Service Account as an OAuth client with the

OpenShift OAuth server.

$ cat > oauth-sa.yaml <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: k10-dex-sa

namespace: kasten-io

annotations:

serviceaccounts.openshift.io/oauth-redirecturi.dex: https://example.com/k10/dex/callback

EOF

$ kubectl create -f oauth-sa.yaml

It is necessary to retrieve the Service Account token to be used as a Helm

option auth.openshift.clientSecret. To retrieve this token run the

following commands:

Create the Secret that will be mapped with k10-dex-sa Service Account.

$ desired_secret_name="k10-dex-sa-secret"

$ kubectl apply --namespace=kasten-io --filename=- <<EOF

apiVersion: v1

kind: Secret

type: kubernetes.io/service-account-token

metadata:

name: ${desired_secret_name}

annotations:

kubernetes.io/service-account.name: "k10-dex-sa"

EOF

Get the token from the Secret using this command:

$ my_token=$(kubectl -n kasten-io get secret $desired_secret_name -o jsonpath='{.data.token}' | base64 -d)

Install Root CA in K10's namespace

Depending on the OpenShift cluster's configuration, there are two methods to obtain a certificate. If a cluster-wide proxy is not used, then use the first method documented below. Otherwise use the second method.

Obtain certificate from the Openshift Ingress Operator

In the OpenShift-Ingress-Operator namespace, the Secret Router is responsible for routing encrypted traffic between the client and the target service. This encrypted traffic is usually in the form of HTTPS requests. The Secret Router uses a certificate stored in a Kubernetes Secret object to encrypt the traffic.

$ oc get secret router-ca -n openshift-ingress-operator -o jsonpath=‘{.data.tls\.crt}’ | \

base64 --decode > custom-ca-bundle.pem

Obtain certificate from the OpenShift cluster-wide proxy

The OpenShift Proxy is responsible for routing incoming requests to the appropriate service or pod in the cluster. The proxy can perform various functions such as load balancing, SSL termination, URL rewriting, and request forwarding.

$ oc get proxy cluster -n openshift-config -o=jsonpath=‘{.spec.trustedCA.name}’ | base64 --decode > custom-ca-bundle.pem

Note

The name of the Root CA certificate must be custom-ca-bundle.pem

Create a ConfigMap that will contain the certificate

# Choose any name for the ConfigMap

$ oc --namespace kasten-io create configmap custom-ca-bundle-store --from-file=custom-ca-bundle.pem

The Root CA certificate needs to be provided to K10, add the following to the Helm install command.

--set cacertconfigmap.name=<name-of-the-configmap>

To enable this mode of authentication, enable the following Helm options while installing/upgrading K10. Each Helm value is described in more detail in the next section.

$ helm upgrade k10 kasten/k10 --namespace kasten-io --reuse-values \

--set auth.openshift.enabled=true \

--set auth.openshift.serviceAccount="service account" \

--set auth.openshift.clientSecret=${my_token} \

--set auth.openshift.dashboardURL="K10's dashboard URL" \

--set auth.openshift.openshiftURL="OpenShift API server's URL" \

--set auth.openshift.insecureCA=false \

--set cacertconfigmap.name=<name-of-the-configmap>

Install K10 with OpenShift authentication

Enable OpenShift Authentication

To enable OpenShift based authentication use the following flag as part of the initial Helm install or subsequent Helm upgrade command.

--set auth.openshift.enabled=trueOpenShift Service Account

Provide the name of the Service Account created in the

Prerequisitesection using the following Helm option.--set auth.openshift.serviceAccount="k10-dex-sa"OpenShift Service Account Token

Provide the token corresponding to the Service Account used with the

auth.openshift.serviceAccountHelm option. Use the following helm option.--set auth.openshift.clientSecret="Token from the service account k10-dex-sa"K10's Dashboard URL

Provide the URL used for accessing K10's dashboard using the following Helm option.

--set auth.openshift.dashboardURL="https://<URL to k10 gateway service>/<k10 release name>"OpenShift API Server URL

Provide the URL for accessing OpenShift's API server using the following Helm option.

--set auth.openshift.openshiftURL="OpenShift API server's URL"Disabling TLS verification to OpenShift API server

The default value for this setting is

false. This means that connections to the API server are secure by default. The TLS connections to the API server are verified.To disable TLS verification, set this value to

true.Use the following Helm option to enable or disable TLS verification of connections to OpenShift's API server.

--set auth.openshift.insecureCA=falseConfigMap that will contain the certificate

Name of the ConfigMap that contains a certificate for a trusted root certificate authority

--set cacertconfigmap.name=<name-of-the-configmap>

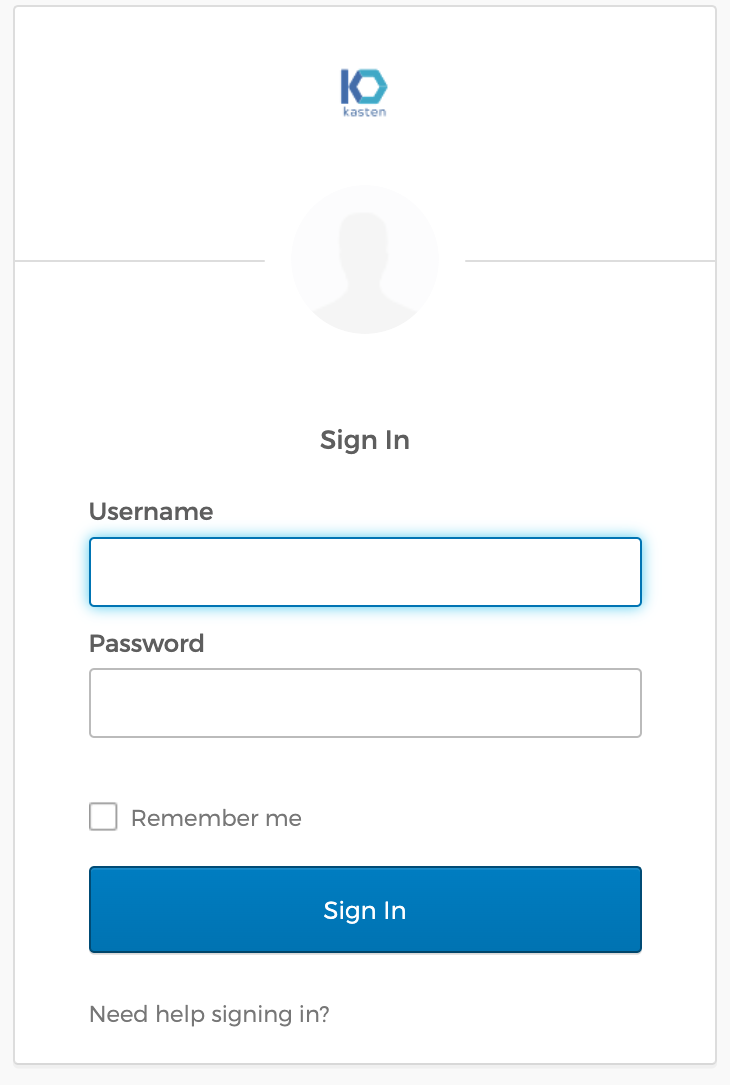

Sample Auth Flow with Screenshots

This section shows screenshots depicting the Auth flow when K10 is installed with OpenShift authentication.

Oktawas configured as the OIDC provider in the OpenShift cluster.An OpenShift group called

k10-adminswas created and users were added to this group.A cluster role binding was created to bind the

k10-adminsgroup to thek10-admincluster role.A role binding was created to map the

k10-adminsgroup to thek10-ns-adminrole in the K10 namespace.When the user navigates to the K10 dashboard, the user is redirected to OpenShift's login screen.

After clicking on the OIDC option

oktain this example, the OIDC provider's login screen is shown.When authentication with the OIDC provider succeeds, the user is redirected to the K10 dashboard.

Active Directory Authentication

This mode can be used to authenticate access to K10 using an Active Directory/LDAP server.

To enable this mode of authentication, enable the following required Helm options while installing/upgrading K10. The required and other optional Helm values are described in more detail in the next section.

$ helm upgrade k10 kasten/k10 --namespace kasten-io --reuse-values \

--set auth.ldap.enabled=true \

--set auth.ldap.dashboardURL="K10's dashboard URL" \

--set auth.ldap.host="host:port" \

--set auth.ldap.bindDN="DN used for connecting to the server" \

--set auth.ldap.bindPWSecretName="Secret containing password for connecting to the server" \

--set auth.ldap.userSearch.baseDN="base DN for user search" \

--set auth.ldap.userSearch.username="uid" \

--set auth.ldap.userSearch.idAttr="uid" \

--set auth.ldap.userSearch.emailAttr="uid" \

--set auth.ldap.userSearch.nameAttr="uid" \

--set auth.ldap.userSearch.preferredUsernameAttr="uid" \

--set auth.ldap.groupSearch.baseDN="base DN for group search" \

--set auth.ldap.groupSearch.nameAttr="Attribute representing a group's name" \

--set auth.ldap.groupSearch.userMatchers[0].userAttr="dn" \

--set auth.ldap.groupSearch.userMatchers[0].groupAttr="member"

Due to the way --set option works if you need to use comma for the

LDAP values you have to escape them, for instance :

--set auth.ldap.bindDN="CN=demo tkg\,OU=ServiceAccount\,OU=my-department\,DC=my-company\,DC=demo"

Alternatively, you can define the value in a helm values file without

escaping the comma and use the file with the helm install/upgrade command's

-f option. Example: helm upgrade k10 kasten/k10 --namespace kasten-io -f path_to_values_file.

K10 Setup

Enable Active Directory Authentication

To enable Active Directory based authentication use the following flag as part of the initial Helm install or subsequent Helm upgrade command.

--set auth.ldap.enabled=trueRestart the Authentication Pod

If the Helm option

auth.ldap.bindPWSecretNamehas been used to specify the name of the secret that contains the Active Directory bind password, and if this password is modified after K10 has been installed, use this Helm option to restart the Authentication Service's Pod as part of the Helm upgrade command.--set auth.ldap.restartPod=trueK10's Dashboard URL

Provide the URL used for accessing K10's dashboard using the following Helm option.

--set auth.ldap.dashboardURL="https://<URL to k10 gateway service>/<k10 release name>"Active Directory/LDAP host

Provide the host and optional port of the AD/LDAP server in the form host:port using the following Helm option.

--set auth.ldap.host="host:port"Disable SSL

Set this field to true if the Active Directory/LDAP host is not using TLS, using the following Helm option.

--set auth.ldap.insecureNoSSL="true"Disable SSL verification

Use the following helm option to set this field to true to disable SSL verification of connections to the Active Directory/LDAP server.

--set auth.ldap.insecureSkipVerifySSL="true"Start TLS

When set to true,

ldap://is used to connect to the server followed by creation of a TLS session. When set to false,ldaps://is used.--set auth.ldap.startTLS="true"Bind Distinguished Name

Use this helm option to provide the Distinguished Name(username) used for connecting to the Active Directory/LDAP host.

--set auth.ldap.bindDN="cn=admin,dc=example,dc=org"Bind Password

Use this helm option to provide the password corresponding to the

bindDNfor connecting to the Active Directory/LDAP host.--set auth.ldap.bindPW="password"Bind Password Secret Name

Use this helm option to provide the name of the secret that contains the password corresponding to the

bindDNfor connecting to the AD/LDAP host. This option can be used instead ofauth.ldap.bindPW. If both have been configured, then this option overridesauth.ldap.bindPW.--set auth.ldap.bindPWSecretName="bind-pw-secret"User Search Base Distinguished Name

Use this helm option to provide the base Distinguished Name to start the Active Directory/LDAP user search from.

--set auth.ldap.userSearch.baseDN="ou=users,dc=example,dc=org"User Search Filter

Use this helm option to provide the optional filter to apply when searching the directory for users.

--set auth.ldap.userSearch.filter="(objectClass=inetOrgPerson)"User Search Username

Use this helm option to provide the attribute used for comparing user entries when searching the directory.

--set auth.ldap.userSearch.username="uid"User Search ID Attribute

Use this helm option to provide the Active Directory/LDAP attribute in a user's entry that should map to the user ID field in a token.

--set auth.ldap.userSearch.idAttr="uid"User Search email Attribute

Use this helm option to provide the Active Directory/LDAP attribute in a user's entry that should map to the

emailfield in a token.--set auth.ldap.userSearch.emailAttr="uid"User Search Name Attribute

Use this helm option to provide the Active Directory/LDAP attribute in a user's entry that should map to the

namefield in a token.--set auth.ldap.userSearch.nameAttr="uid"User Search Preferred Username Attribute

Use this helm option to provide the Active Directory/LDAP attribute in a user's entry that should map to the

preferred_usernamefield in a token.--set auth.ldap.userSearch.preferredUsernameAttr="uid"Group Search Base Distinguished Name

Use this helm option to provide the base Distinguished Name to start the AD/LDAP group search from.

--set auth.ldap.groupSearch.baseDN="ou=users,dc=example,dc=org"Group Search Filter

Use this helm option to provide the optional filter to apply when searching the directory for groups.

--set auth.ldap.groupSearch.filter="(objectClass=groupOfNames)"Group Search Name Attribute

Use this helm option to provide the Active Directory/LDAP attribute that represents a group's name in the directory.

--set auth.ldap.groupSearch.nameAttr="cn"Group Search User Matchers

The

userMatchershelm option represents a list. Each entry in this list consists of a pair of fields nameduserAttrandgroupAttr. This helm option is used to find users in the directory based on the condition that, the user entry's attribute represented byuserAttrmust match a group entry's attribute represented bygroupAttr.As an example, suppose a group's definition in the directory looks like the one below:

# k10admins, users, example.org dn: cn=k10admins,ou=users,dc=example,dc=org cn: k10admins objectClass: groupOfNames member: cn=user1@kasten.io,ou=users,dc=example,dc=org member: cn=user2@kasten.io,ou=users,dc=example,dc=org member: cn=user3@kasten.io,ou=users,dc=example,dc=org

Suppose

user1's entry in the directory looks like the one below:# user1@kasten.io, users, example.org dn: cn=user1@kasten.io,ou=users,dc=example,dc=org cn: User1 cn: user1@kasten.io sn: Bar1 objectClass: inetOrgPerson objectClass: posixAccount objectClass: shadowAccount userPassword:: < Removed > uid: user1@kasten.io uidNumber: 1000 gidNumber: 1000 homeDirectory: /home/user1@kasten.io

For the example directory entries above, a suitable configuration for the

userMatcherswould be like the one below. If thednfield of a user matches thememberfield in a group, then the user's record will be returned by the Active Directory/LDAP server.--set auth.ldap.groupSearch.userMatchers[0].userAttr="dn" --set auth.ldap.groupSearch.userMatchers[0].groupAttr="member"

Sample Auth Flow with Screenshots

This section shows screenshots depicting the Auth flow when K10 is installed with Active Directory authentication.

AWS Simple ADservice was setup as the Active Directory service used by K10 in a Kubernetes cluster deployed in Digital Ocean.A user named

productionadminwas created in theSimple ADservice and added to a group namedk10admins.A cluster role binding was created to bind the

k10adminsgroup to thek10-admincluster role.A role binding was created to bind the

k10adminsgroup to thek10-ns-adminrole in the K10 namespace.When the user navigates to the K10 dashboard, the user is redirected to the Active Directory/LDAP login screen.

When authentication with the Active Directory/LDAP server succeeds, the user is redirected to the K10 dashboard.

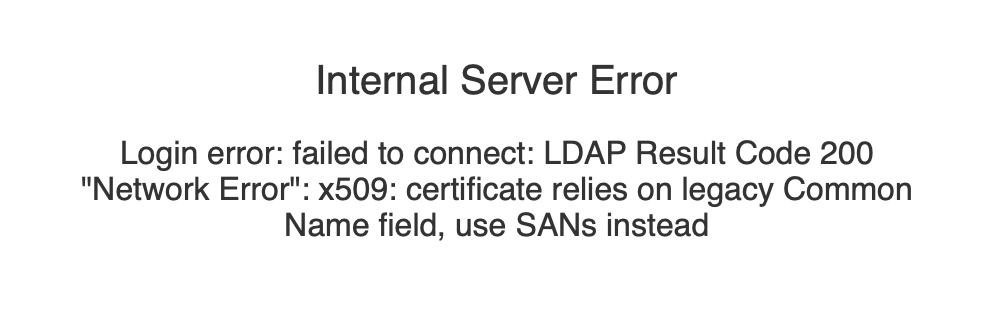

Troubleshooting

Common Name Certificates

Certificates that have a Common Name (CN), but no Subject Alternate Name (SAN) may cause an error to be displayed: "x509: certificate relies on legacy Common Name field, use SANs instead".

This is because the Common Name field of a certificate is no longer used by some clients to verify DNS names. For more information, see RFC 6125, Section 6.4.4.

To correct this error, the certificate must be updated to include the DNS name in the SAN field of the certificate.

If necessary, it is possible to run an older version of Dex until the certificate can be updated with a proper SAN.

Warning

Running with an older version of Dex is not a recommended configuration!

Older images are missing critical security patches and should only be used as a temporary workaround until a new certificate can be provisioned.

To run with an older version of Dex, use the following values:

--set dexImage.registry=quay.io \

--set dexImage.repository=dexidp \

--set dexImage.image=dex \

--set dexImage.tag=v2.25.0 \

--set dexImage.frontendDir=/web

Other Authentication Options

Group Allow List

When using authentication modes such as Active Directory, OpenShift or OIDC, after a user has successfully authenticated with the authentication provider, K10 creates a JSON Web Token(JWT) that contains information returned by the provider. This includes the groups that a user is a member of.

If the number of groups returned by an authentication provider results in a token whose size is more than 4KB, the token gets dropped and is not returned to the dashboard. This results in a failed login attempt.

The helm option below can be used to reduce the number of groups in the JWT. It represents a list of groups that are allowed admin access to K10's dashboard. These groups will be appended to the list of subjects in the default ClusterRoleBinding that is created when K10 is installed to bind them to the ClusterRole named

k10-admin. If the namespace where K10 was installed iskasten-ioand the K10 ServiceAccount in that namespace is namedk10-k10, then the ClusterRoleBinding would be namedkasten-io-k10-k10-admin.--set auth.groupAllowList[0]="group1" --set auth.groupAllowList[1]="group2"

K10 Admin Groups

Suppose the

auth.groupAllowListhelm option is defined with a list of groups as "admin-group1, basic-group1, basic-group2" to restrict the number of groups included in the JSON Web Token, and if the group namedadmin-group1is the only group that needs to be setup with admin level access to K10, then use the helm option below.Instead of the groups in

auth.groupAllowList, only the groups inauth.k10AdminGroupswill be appended to the list of subjects in the default ClusterRoleBinding that is created when K10 is installed to bind them to the ClusterRole namedk10-admin. If the namespace where K10 was installed iskasten-ioand the K10 ServiceAccount in that namespace is namedk10-k10, then the ClusterRoleBinding would be namedkasten-io-k10-k10-admin.--set auth.k10AdminGroups[0]="group1" --set auth.k10AdminGroups[1]="group2"

K10 Admin Users

This helm option can be used to define a list of users who are granted admin level access to K10's dashboard. The users in

auth.k10AdminUserswill be appended to the list of subjects in the default ClusterRoleBinding that is created when K10 is installed to bind them to the ClusterRole namedk10-admin. If the namespace where K10 was installed iskasten-ioand the K10 ServiceAccount in that namespace is namedk10-k10, then the ClusterRoleBinding would be namedkasten-io-k10-k10-admin.--set auth.k10AdminUsers[0]="user1" --set auth.k10AdminUsers[1]="user2"