Advanced Install Options

FREE K10 Edition and Licensing

By default, K10 comes with an embedded free Edition license. The free edition license allows you to use the software on a cluster with at most 50 worker nodes in the first 30 days, and then 5 nodes after the 30-day period. In order to continue using the free license, regular updates to stay within the 6 month support window might be required. You can remove the node restriction of the free license by updating to Enterprise Edition and obtaining the appropriate license from the Kasten team.

Using A Custom License During Install

To install a license provided by Kasten that removes the node

restriction, please add the following to any of the helm install

commands. K10 dynamically retrieves the license key and a pod

restart is not required.

...

--set license=<license-text>

Changing Licenses

To add a new license to K10, a secret needs to be created in the K10

namespace (default is kasten-io) with the requirement that the

license text be set in a field named license. To do this from the

command line, run:

$ kubectl create secret generic <license-secret-name> \

--namespace kasten-io \

--from-literal=license="$(echo '<base64_encoded_license>' | base64 --decode)"

Multiple license secrets can exist simultaneously and K10 will check if any are valid. This license check is done periodically and so, no K10 restarts are required if a different existing license becomes required (e.g., due to a cluster expansion or an old license expiry) or when a new license is added.

The resulting license will look like:

apiVersion: v1

data:

license: Y3Vz...

kind: Secret

metadata:

creationTimestamp: "2020-04-14T23:50:05Z"

labels:

app: k10

app.kubernetes.io/instance: k10

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: k10

helm.sh/chart: k10-5.5.3

heritage: Helm

release: k10

name: k10-custom-license

namespace: kasten-io

type: Opaque

Similarly, old licenses can be removed by deleting the secret that contains it.

$ kubectl delete secret <license-secret-name> \

--namespace kasten-io

License Grace period

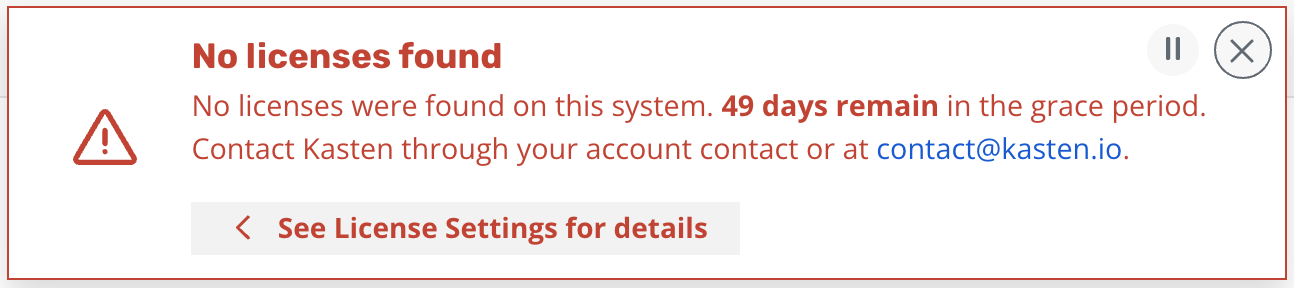

If the license status of the cluster becomes invalid (e.g., the licensed node limit is exceeded), the ability to perform manual actions or creating new policies will be disabled but your previously scheduled policies will continue to run for 50 days. The displayed warning will be look like:

By default, K10 provides a grace period of 50 days to ensure that applications remain protected while a new license is obtained or the cluster is brought back into compliance by reducing the number of nodes. K10 will stop the creation of any new jobs (scheduled or manual) after the grace period expires.

If the cluster's license status frequently swaps between valid and invalid states, the amount of time the cluster license spends in an invalid status will be subtracted from subsequent grace periods.

You can see node usage from the last two months in Settings -> Licenses. Usage starts being tracked from the installation date of 4.5.8+. From 5.0.11+ you can see the same information via Prometheus.

Manually Creating or Using an Existing Service Account

The following instructions can be used to create a new Service Account

that grants K10 the required permissions to Kubernetes resources and

the use the given Service Account as a part of the install

process. The instructions assume that you will be installing K10 in

the kasten-io namespace.

# Create kasten-io namespace if have not done it yet.

$ kubectl create namespace kasten-io

# Create a ServiceAccount for k10 k10-sa

$ kubectl --namespace kasten-io create sa k10-sa

# Create a cluster role binding for k10-sa

$ kubectl create clusterrolebinding k10-sa-rb \

--clusterrole cluster-admin \

--serviceaccount=kasten-io:k10-sa

Following the SA creation, you can install K10 using:

$ helm install k10 kasten/k10 --namespace=kasten-io \

--set rbac.create=false \

--set serviceAccount.create=false \

--set serviceAccount.name=k10-sa

Using Red Hat certified upstream container images

The upstream container images (e.g., Ambassador, Dex) that K10 uses, by default, are not Red Hat certified images. If it's required to run the Red Hat certified version of those images, this helm flag can be used:

--set global.upstreamCertifiedImages=true

Pinning K10 to Specific Nodes

While not generally recommended, there might be situations (e.g., test environments, nodes reserved for infrastructure tools, or clusters without autoscaling enabled) where K10 might need to be pinned to a subset of nodes in your cluster. You can do this easily with an existing deployment by using a combination of NodeSelectors and Taints and Tolerations.

The process to modify a deployment to accomplish this is demonstrated

in the following example. The example assumes that the nodes you want

to restrict K10 to has the label selector-key: selector-value and

a taint set to taint-key=taint-value:NoSchedule.

$ cat << EOF > patch.yaml

spec:

template:

spec:

nodeSelector:

selector-key: selector-value

tolerations:

- key: "taint-key"

operator: "Equal"

value: "taint-value"

effect: "NoSchedule"

EOF

$ kubectl get deployment --namespace kasten-io | awk 'FNR == 1 {next} {print $1}' \

| xargs -I DEP kubectl patch deployments DEP --namespace kasten-io --patch "$(cat patch.yaml)"

Running K10 Containers as a Specific User

K10 service containers run with UID and fsGroup 1000 by default.

If the storage class K10 is configured to use for its own services requires the

containers to run as a specific user, then the user can be modified.

This is often needed when using shared storage, such as NFS, where permissions on the target storage require a specific user.

To run as a specific user (e.g., root (0), add the following to the Helm install command:

--set services.securityContext.runAsUser=0 \

--set services.securityContext.fsGroup=0 \

--set prometheus.server.securityContext.runAsUser=0 \

--set prometheus.server.securityContext.runAsGroup=0 \

--set prometheus.server.securityContext.runAsNonRoot=false \

--set prometheus.server.securityContext.fsGroup=0

Other SecurityContext settings for the K10 service containers can be specified using the --set service.securityContext.<setting name> and --set prometheus.server.securityContext.<setting name> options.

Using Kubernetes Endpoints for Service Discovery

The K10 API gateway uses Kubernetes DNS to discover and route requests to K10 services.

If Kubernetes DNS is disabled or not working, K10 can be configured to use Kubernetes endpoints for service discovery.

To do this, add the following to the Helm install command:

--set apigateway.serviceResolver=endpoint

Configuring Prometheus

Prometheus is an open-source system monitoring

and alerting toolkit. K10 is bundled with Prometheus chart version 14.1.1

. Any of the Prometheus Chart values can be used during the K10 helm

installation.

When passing value from the command line, the value key has to be prefixed

with the prometheus. string:

--set prometheus.server.persistentVolume.storageClass=default.sc

When passing values in a YAML file, all prometheus settings should be

under the prometheus key:

# values.yaml

# global values - apply to both K10 and prometheus

global:

persistence:

storageClass: default-sc

# K10 specific settings

auth:

basicAuth: enabled

# prometheus specific settings

prometheus:

server:

persistentVolume:

storageClass: another-sc

Note

Only the helm values indicated in the Complete List of K10 Helm Options can be used to change the configuration of the bundled Prometheus. Any undocumented configuration is not supported and may affect the functionality of the K10.

Complete List of K10 Helm Options

The following table lists the configurable parameters of the K10 chart and their default values.

Parameter |

Description |

Default |

|---|---|---|

|

Whether to enable accept EULA before installation |

|

|

Company name. Required field if EULA is accepted |

|

|

Contact email. Required field if EULA is accepted |

|

|

License string obtained from Kasten |

|

|

Whether to enable RBAC with a specific cluster role and binding for K10 |

|

|

Whether to create a SecurityContextConstraints for K10 ServiceAccounts |

|

|

Whether the dashboardbff pods may use the node network |

|

|

Whether the executor pods may use the node network |

|

|

Specifies count of running executor workers |

8 |

|

Limit of concurrent restore CSI snapshots operations per each restore action |

3 |

|

Limit of concurrent restore generic volume snapshots operations per each restore action |

3 |

|

Limit of concurrent restore workloads operations per each restore action |

3 |

|

Whether the aggregatedapis pods may use the node network |

|

|

Specifies whether a ServiceAccount should be created |

|

|

The name of the ServiceAccount to use. If not set, a name is derived using the release and chart names. |

|

|

Specifies whether the K10 dashboard should be exposed via ingress |

|

|

Cluster ingress controller class: |

|

|

FQDN (e.g., |

|

|

URL path for K10 Dashboard (e.g., |

|

|

Additional Ingress object annotations |

|

|

Configures a TLS use for |

|

|

Specifies a name of TLS secret |

|

|

Specifies the path type for the ingress resource |

|

|

Default global size of volumes for K10 persistent services |

|

|

Size of a volume for catalog service |

|

|

Size of a volume for jobs service |

|

|

Size of a volume for logging service |

|

|

Size of a volume for metering service |

|

|

Specified StorageClassName will be used for PVCs |

|

|

Specify the helm repository for offline (airgapped) installation |

|

|

Provide secret which contains docker config for private repository. Use |

|

|

Provide external prometheus host name |

|

|

Provide external prometheus port number |

|

|

Provide Base URL of external prometheus |

|

|

Enable |

|

|

AWS access key ID (required for AWS deployment) |

|

|

AWS access key secret |

|

|

ARN of the AWS IAM role assumed by K10 to perform any AWS operation. |

|

|

Non-default base64 encoded GCP Service Account key file |

|

|

Azure tenant ID (required for Azure deployment) |

|

|

Azure Service App ID |

|

|

Azure Service APP secret |

|

|

Resource Group name that was created for the Kubernetes cluster |

|

|

Subscription ID in your Azure tenant |

|

|

Resource management endpoint for the Azure Stack instance |

|

|

Azure Active Directory login endpoint |

|

|

Azure Active Directory resource ID to obtain AD tokens |

|

|

Azure Cloud Environment ID |

|

|

vSphere endpoint for login |

|

|

vSphere username for login |

|

|

vSphere password for login |

|

|

Use --set-file secrets.dockerConfigPath=path_to_docker_config.yaml to specify docker config for image pull |

|

|

Name of the ConfigMap that contains a certificate for a trusted root certificate authority |

|

|

Cluster name for better logs visibility |

|

|

Sets AWS_REGION for metering service |

|

|

Control license reporting (set to |

|

|

Sets metric report collection period (in seconds) |

|

|

Sets metric report push period (in seconds) |

|

|

Sets K10 promotion ID from marketing campaigns |

|

|

Sets AWS cloud metering license mode |

|

|

Sets AWS managed license mode |

|

|

Sets Red Hat cloud metering license mode |

|

|

Sets AWS managed license config secret |

|

|

Configures an external gateway for K10 API services |

|

|

Standard annotations for the services |

|

|

Domain name for the K10 API services |

|

|

Supported gateway type: |

|

|

ARN for the AWS ACM SSL certificate used in the K10 API server |

|

|

Configures basic authentication for the K10 dashboard |

|

|

A username and password pair separated by a colon character |

|

|

Name of an existing Secret that contains a file generated with htpasswd |

|

|

A list of groups whose members are granted admin level access to K10's dashboard |

|

|

A list of users who are granted admin level access to K10's dashboard |

|

|

Configures token based authentication for the K10 dashboard |

|

|

Configures Open ID Connect based authentication for the K10 dashboard |

|

|

URL for the OIDC Provider |

|

|

URL to the K10 gateway service |

|

|

Space separated OIDC scopes required for userinfo. Example: "profile email" |

|

|

The type of prompt to be used during authentication (none, consent, login or select_account) |

|

|

Client ID given by the OIDC provider for K10 |

|

|

Client secret given by the OIDC provider for K10 |

|

|

The claim to be used as the username |

|

|

Prefix that has to be used with the username obtained from the username claim |

|

|

Name of a custom OpenID Connect claim for specifying user groups |

|

|

All groups will be prefixed with this value to prevent conflicts |

|

|

Enables access to the K10 dashboard by authenticating with the OpenShift OAuth server |

|

|

Name of the service account that represents an OAuth client |

|

|

The token corresponding to the service account |

|

|

The URL used for accessing K10's dashboard |

|

|

The URL for accessing OpenShift's API server |

|

|

To turn off SSL verification of connections to OpenShift |

|

|

Set this to true to use the CA certificate corresponding to the Service Account |

|

|

Configures Active Directory/LDAP based authentication for the K10 dashboard |

|

|

To force a restart of the authentication service pod (useful when updating authentication config) |

|

|

The URL used for accessing K10's dashboard |

|

|

Host and optional port of the AD/LDAP server in the form |

|

|

Required if the AD/LDAP host is not using TLS |

|

|

To turn off SSL verification of connections to the AD/LDAP host |

|

|

When set to true, ldap:// is used to connect to the server followed by creation of a TLS session. When set to false, ldaps:// is used. |

|

|

The Distinguished Name(username) used for connecting to the AD/LDAP host |

|

|

The password corresponding to the |

|

|

The name of the secret that contains the password corresponding to the |

|

|

The base Distinguished Name to start the AD/LDAP search from |

|

|

Optional filter to apply when searching the directory |

|

|

Attribute used for comparing user entries when searching the directory |

|

|

AD/LDAP attribute in a user's entry that should map to the user ID field in a token |

|

|

AD/LDAP attribute in a user's entry that should map to the email field in a token |

|

|

AD/LDAP attribute in a user's entry that should map to the name field in a token |

|

|

AD/LDAP attribute in a user's entry that should map to the preferred_username field in a token |

|

|

The base Distinguished Name to start the AD/LDAP group search from |

|

|

Optional filter to apply when searching the directory for groups |

|

|

The AD/LDAP attribute that represents a group's name in the directory |

|

|

List of field pairs that are used to match a user to a group. |

|

|

Attribute in the user's entry that must match with the |

|

|

Attribute in the group's entry that must match with the |

|

|

A list of groups whose members are allowed access to K10's dashboard |

|

|

Custom security context for K10 service containers |

|

|

User ID K10 service containers run as |

|

|

Group ID K10 service containers run as |

|

|

FSGroup that owns K10 service container volumes |

|

|

Enable Kanister sidecar injection for workload pods |

|

|

Set of labels to select namespaces in which sidecar injection is enabled for workloads |

|

|

Set of labels to filter workload objects in which the sidecar is injected |

|

|

Port number on which the mutating webhook server accepts request |

|

|

Specifies whether to disable SSL verification for gateway pods |

|

|

Specifies whether to expose Admin port for gateway service |

|

|

Resource requests and limits for gateway pod |

|

|

Resource requests and limits for Generic Volume Snapshot restore pods |

|

|

(optional) Set Prometheus image registry. |

|

|

(optional) Set Prometheus image repository. |

|

|

(optional) Prometheus init container configuration. Enable |

|

|

(optional) Whether to create Prometheus RBAC configuration. Warning - this action will allow prometheus to scrape pods in all k8s namespaces |

|

|

(optional) Enable Prometheus |

|

|

(optional) Enable Prometheus |

|

|

(optional) Enable Prometheus |

|

|

(optional) Enable Prometheus |

|

|

(optional) Enable Prometheus |

|

|

(optional) Enable Prometheus ScrapeCAdvisor |

|

|

(optional) If false, K10's Prometheus server will not be created, reducing the dashboard's functionality. |

|

|

(optional) Set security context |

|

|

(optional) Enable security context |

|

|

(optional) Set security context |

|

|

(optional) Set security context |

|

|

(optional) K10 Prometheus data retention |

|

|

(optional) The number of Prometheus server pods that can be created above the desired amount of pods during an update |

|

|

(optional) The number of Prometheus server pods that can be unavailable during the upgrade process |

|

|

(optional) Change default deployment strategy for Prometheus server |

|

|

(optional) If true, K10 Prometheus server will create a Persistent Volume Claim |

|

|

(optional) K10 Prometheus server data Persistent Volume size |

|

|

(optional) StorageClassName used to create Prometheus PVC. Setting this option overwrites global StorageClass value |

|

|

(optional) Prometheus configmap name to override default generated name |

|

|

(optional) Prometheus deployment name to override default generated name |

|

|

(optional) K10 Prometheus external url path at which the server can be accessed |

|

|

(optional) K10 Prometheus prefix slug at which the server can be accessed |

|

|

(optional) Set true to create ServiceAccount for Prometheus |

|

|

(optional) Set true to create ServiceAccount for Prometheus |

|

|

(optional) Set true to create ServiceAccount for Prometheus |

|

|

(optional) Set true to create ServiceAccount for Prometheus |

|

|

(optional) Set true to create ServiceAccount for Prometheus server service |

|

|

(optional) If false Grafana will not be available |

|

|

(optional) URL for Prometheus datasource in Grafana (must match |

|

|

Overwrite default K10 container resource requests and limits |

varies by container |

|

Specifies whether the K10 dashboard should be exposed via route |

|

|

FQDN (e.g., |

|

|

URL path for K10 Dashboard (e.g., |

|

|

Additional Route object annotations |

|

|

Additional Route object labels |

|

|

Configures a TLS use for |

|

|

Specifies behavior for insecure scheme traffic |

|

|

Specifies the TLS termination of the route |

|

|

Specifies the resolver used for service discovery in the API gateway ( |

|

|

Limit of concurrent generic volume snapshot create operations |

|

|

Limit of concurrent generic volume snapshot copy operations |

|

|

Limit of concurrent generic volume snapshot restore operations |

|

|

Limit of concurrent CSI snapshot create operations |

|

|

Limit of concurrent cloud provider create operations |

|

|

Specifies the domain name of the cluster |

|

|

Specifies timeout to set on Kanister backup operations |

|

|

Specifies timeout to set on Kanister restore operations |

|

|

Specifies timeout to set on Kanister delete operations |

|

|

Specifies timeout to set on Kanister pre-hook and post-hook operations |

|

|

Specifies timeout to set on Kanister checkRepo operations |

|

|

Specifies timeout to set on Kanister stats operations |

|

|

Specifies timeout to set on Kanister efsPostRestore operations |

|

|

Duration of a session token generated by AWS for an IAM role. The minimum value is 15 minutes and the maximum value is the maximum duration setting for that IAM role. For documentation about how to view and edit the maximum session duration for an IAM role see https://docs.aws.amazon.com/IAM/latest/UserGuide/id_roles_use.html#id_roles_use_view-role-max-session. The value accepts a number along with a single character |

|

|

Specifies the AWS EFS backup vault name |

|

|

Specifies the timeout for VMWare operations |

|

|

Specifies the AWS CMK key ID for encrypting K10 Primary Key |

|

|

Sets garbage collection period (in seconds) |

|

|

Sets maximum actions to keep |

|

|

Enables |

|

|

Enables |

|

|

Defines the time duration within which the the VMs must be unfrozen while backing them up. To know more about format go doc can be followed |

|

|

Specifies a list of applications to be excluded from the dashboard & compliance considerations. Format should be a YAML array |

|